Mastering Data Lakes: Strategies and Best Practices for Successful Implementation

Ananya Arora

Jan 15, 2025

Introduction

A data lake is a centralized repository that allows organizations to store structured, semi-structured, and unstructured data at any scale. Unlike traditional databases and data warehouses, which require data to be preprocessed and structured before ingestion, a data lake enables raw data storage in its native format. This flexibility empowers organizations to harness the full potential of their data, enabling advanced analytics, machine learning, and data-driven decision-making.

This comprehensive blog post will explore the world of data lakes, their fundamental concepts, architecture, and best practices. We will compare data lakes with traditional data warehouses, examine the key technologies powering data lakes, and showcase real-world examples of data lake implementations. Furthermore, we will discuss the challenges and solutions in data lake deployment, share expert insights from industry professionals, and provide a beginner’s guide to getting started with data lakes.

Whether you are a data professional, business leader, or simply curious about the potential of data lakes, this blog post will equip you with the knowledge and insights needed to understand, utilize, and optimize data lakes for your organization’s data-driven initiatives.

Understanding the Concept of Data Lake

To fully grasp the concept of a data lake, let’s start by defining what it is and exploring its key characteristics and components.

A data lake is a centralized repository that allows organizations to store vast amounts of raw, unprocessed data in its native format until it is needed for analysis. Unlike traditional data storage systems, which require data to be structured and preprocessed before ingestion, a data lake enables data storage in various formats, including structured, semi-structured, and unstructured data.

The key characteristics of a data lake include:

- Schema-on-Read: In a data lake, the schema or structure of the data is applied when the data is read or analyzed rather than at the time of ingestion. This approach, known as schema-on-read, provides flexibility and agility in handling diverse data types and structures.

- Scalability: Data lakes are designed to scale horizontally, allowing organizations to store and process massive volumes of data cost-effectively. They leverage distributed storage and processing technologies to accommodate growing data demands.

- Data Diversity: Data lakes can store data from various sources, including transactional systems, social media, IoT devices, and external data providers. This ability to handle diverse data types gives organizations a holistic view of their data landscape.

- Raw Data Preservation: Data lakes store data in raw, unprocessed form, preserving the original data fidelity. This approach allows organizations to retain the full context and granularity of the data, enabling future analysis and reinterpretation as business needs evolve.

Compared to traditional databases and data warehouses, data lakes offer several advantages:

| Aspect | Description |

|---|---|

| Flexibility | Data lakes can handle structured, semi-structured, and unstructured data, whereas traditional databases and data warehouses primarily focus on structured data. |

| Scalability | Data lakes are designed to scale seamlessly, leveraging distributed storage and processing technologies, while traditional systems may face scalability limitations. |

| Cost-effectiveness | Data lakes can store vast amounts of data cost-effectively, as they do not require extensive data preprocessing and can leverage commodity hardware. |

| Agility | Data lakes enable organizations to quickly ingest and analyze data without needing upfront schema definition and data modeling. |

Understanding data lakes’ fundamental concepts and characteristics can help organizations leverage their potential for storing, processing, and analyzing large-scale, diverse data sets.

Data Lake vs. Data Warehouse: Understanding the Differences

While data lakes and data warehouses serve as centralized repositories for storing and managing data, their approach, purpose, and characteristics differ. Understanding these differences is crucial for organizations to make informed decisions about their data architecture.

| Aspect | Data Warehouse | Data Lake |

|---|---|---|

| Purpose | A data warehouse is designed to support structured, filtered data for specific business intelligence and reporting requirements. | A data lake is designed to store vast amounts of raw, unprocessed data in its native format, enabling exploratory analysis and data discovery. |

| Data Quality | Data in a data warehouse is typically cleansed, transformed, and structured before ingestion, ensuring high data quality and consistency. | Data in a data lake is typically stored in its raw form, without upfront data cleansing or transformation, allowing for flexibility in future processing and analysis. |

| Schema | Data warehouses follow a predefined schema, where the structure and relationships of the data are defined upfront. | Data lakes follow a schema-on-read approach, where the schema is applied when the data is read or analyzed, enabling agility in handling diverse data structures. |

| Processing | Data warehouses are optimized for read-intensive, complex queries and aggregations, supporting fast data retrieval for reporting and analysis. | Data lakes are optimized for write-intensive batch processing and support various processing engines, such as Apache Spark and Hadoop MapReduce, for large-scale data processing and analysis. |

| Users | Data warehouses primarily serve business analysts, data scientists, and decision-makers who require access to structured, aggregated data for reporting and analysis. | Data lakes serve many users, including data scientists, data engineers, and business analysts, who require access to raw, detailed data for exploratory analysis, machine learning, and advanced analytics. |

Scenarios where a data lake may be preferred over a data warehouse include:

- Unstructured and Semi-Structured Data: When dealing with large volumes of unstructured or semi-structured data, such as social media data, sensor data, or log files, a data lake provides the flexibility to store and process these diverse data types.

- Data Exploration and Discovery: Data lakes enable data scientists and analysts to explore and discover insights from raw, detailed data without the constraints of predefined schemas or data models.

- Machine Learning and Advanced Analytics: Data lakes provide the foundation for machine learning and advanced analytics, as they allow access to large volumes of diverse data for model training and optimization.

On the other hand, scenarios where a data warehouse may be more suitable include:

- Structured Data and Reporting: When dealing with structured data and well-defined reporting requirements, a data warehouse provides an optimized and efficient solution for data storage, retrieval, and analysis.

- Data Governance and Consistency: Data warehouses enforce strict data governance and ensure data consistency through upfront data cleansing, transformation, and schema definition.

- Performance and Concurrency: Data warehouses are designed for fast query performance and can handle high concurrency, making them suitable for reporting and business intelligence workloads.

Organizations often implement a hybrid approach, leveraging data lakes and warehouses in their data architecture. Data lakes can serve as a staging area for raw data, while data warehouses provide a structured and optimized layer for reporting and analysis.

Architectural Foundations of Data Lakes

Building a data lake requires a well-designed architecture to handle big data’s scale, diversity, and complexity. Let’s explore the key components and considerations for building a data lake.

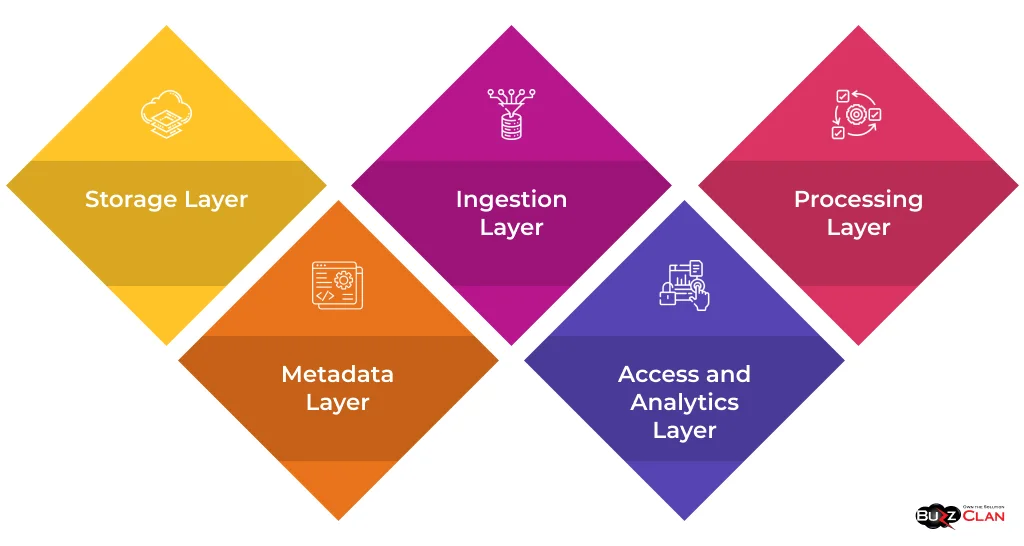

Data Lake Architecture

- Storage Layer: The storage layer stores vast amounts of raw, unprocessed data. It typically leverages distributed file systems, such as Hadoop Distributed File System (HDFS), or cloud storage solutions like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage. These storage systems provide scalability, fault tolerance, and cost-effectiveness for storing large-scale data.

- Ingestion Layer: The ingestion layer collects and brings data from various sources into the data lake. It involves data acquisition, data extraction, and data loading mechanisms. Tools like Apache Kafka, Apache Flume, and Apache NiFi are commonly used for data ingestion, enabling real-time and batch data ingestion from diverse sources.

- Processing Layer: The processing layer transforms and prepares the data stored in the data lake for analysis. It leverages distributed processing frameworks, such as Apache Spark, Apache Flink, or Hadoop MapReduce, to perform data processing, transformation, and aggregation tasks. These frameworks enable parallel processing of large datasets, making it efficient to process and analyze data at scale.

- Metadata Layer: The metadata layer captures information about the data stored in the data lake, including data lineage, data provenance, data quality, and data schema. Metadata management tools like Apache Atlas or AWS Glue Data Catalog help catalog and discover data assets, enabling data governance and exploration.

- Access and Analytics Layer: The access and analytics layer provides interfaces and tools for users to interact with and analyze the data in the data lake. It includes query engines, such as Apache Hive or Presto, for SQL-like querying and data visualization and business intelligence tools, such as Tableau or Power BI, for data exploration and reporting.

Considerations for Building a Data Lake

- Storage Options: The right storage option depends on scalability, cost, and data access patterns. Cloud storage solutions like Amazon S3, Azure Data Lake Storage, and Google Cloud Storage provide scalable and cost-effective options for storing large volumes of data.

- Data Ingestion: It is crucial to select the appropriate data ingestion tools and techniques based on the data sources, data formats, and ingestion frequency. Real-time data ingestion tools like Apache Kafka or NiFi can handle streaming data. In contrast, batch data ingestion tools like Apache Sqoop or AWS Glue can handle periodic data loads.

- Data Processing: Choose processing frameworks and tools based on data processing requirements, such as batch processing, real-time processing, or machine learning workloads. Apache Spark has emerged as a popular choice for data processing in data lakes due to its fast and flexible processing capabilities.

- Data Governance: Implementing data governance practices, including data quality management, data security, and data privacy, is essential for ensuring the reliability, integrity, and compliance of data in the data lake. Data governance frameworks and tools, such as Apache Atlas or Collibra, can help enforce data governance policies.

- Scalability and Performance: It is crucial to design the data lake architecture to scale horizontally and handle growing data volumes and processing requirements. Leveraging distributed storage and processing technologies and cloud-based elastic scaling can ensure the data lake’s scalability and performance.

Organizations can design and implement a robust and scalable data lake solution that meets their data storage, processing, and analysis needs by understanding the architectural components and considerations for building a data lake.

Data Lakes Across Cloud Providers

Cloud platforms have become popular for implementing data lakes due to their scalability, flexibility, and cost-effectiveness. Leading cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), offer comprehensive services and solutions for building and managing data lakes. Let’s compare the data lake offerings across these cloud providers.

| Aspect | AWS Data Lake | Azure Data Lake | Google Data Lake |

|---|---|---|---|

| Storage | Amazon S3 (Simple Storage Service) provides scalable and durable object storage for storing data in a data lake. It offers features like versioning, lifecycle management, and access control. | Azure Data Lake Storage (ADLS) is a scalable, secure storage service optimized for big data analytics workloads. It offers features like hierarchical namespace, access control, and data encryption. | Google Cloud Storage provides a unified object storage service for storing data in a data lake. It offers features like versioning, lifecycle management, and access control. |

| Processing | AWS offers various processing services, including Amazon EMR (Elastic MapReduce) for big data processing using Hadoop and Spark, AWS Glue for ETL (Extract, Transform, Load) jobs, and Amazon Athena for serverless SQL querying. | Azure HDInsight is a fully managed cloud service running open-source analytics frameworks, including Hadoop, Spark, and Hive. Azure Databricks provides a collaborative platform for big data processing and machine learning. | Google Cloud Dataproc is a fully managed cloud service running Apache Hadoop, Spark, and other big data processing frameworks. Google Cloud Dataflow is a serverless, fully managed stream and batch data processing service. |

| Data Catalog | AWS Glue Data Catalog provides a centralized metadata repository for discovering, managing, and accessing data in the data lake. | Azure Data Catalog is a fully managed service for registering, discovering, and understanding data assets across the data lake. | Google Cloud Data Catalog is a fully managed and scalable metadata management service for discovering, understanding, and managing data assets. |

| Analytics | Amazon QuickSight is a scalable business intelligence service for data visualization and analysis, while Amazon SageMaker enables machine learning and predictive analytics. | Azure Synapse Analytics is a limitless analytics service that combines data integration, enterprise data warehousing, and big data analytics. Azure Machine Learning enables building, training, and deploying machine learning models. | Google BigQuery is a serverless, highly scalable, cost-effective cloud data warehouse for analytics and BI. Google Cloud AI Platform enables the building, training, and deploying of machine learning models. |

Unique features and considerations

- AWS Lake Formation: AWS offers Lake Formation, a fully managed service that makes setting up a secure data lake in days easy. It automates data discovery, cleansing, and preparation tasks.

- Azure Purview: Azure Purview is a unified data governance service that helps manage and govern data across on-premises, multi-cloud, and software-as-a-service (SaaS) environments.

- Google BigLake: Google BigLake is a storage engine that provides a unified storage interface across data warehouses, data lakes, and databases, enabling seamless data access and analytics.

When choosing a cloud provider for a data lake, organizations should consider scalability, cost, integration with existing tools and services, and each provider’s specific features and capabilities. It is important to evaluate the data lake offerings in the context of the organization’s data requirements, technical skills, and overall cloud strategy.

Key Technologies Powering Data Lakes

Data lakes rely on various technologies and frameworks to enable data storage, processing, and analysis at scale. Let’s explore some of the key technologies powering modern data lakes.

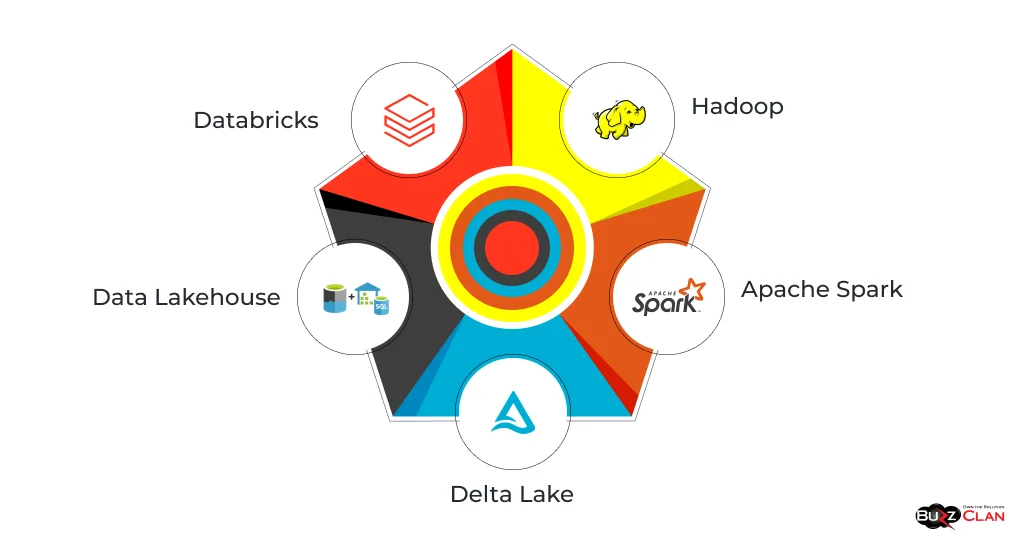

Hadoop

- Apache Hadoop is an open-source framework for distributed storage and processing large datasets across commodity hardware clusters.

- Hadoop Distributed File System (HDFS) provides a scalable and fault-tolerant storage layer for data lakes, enabling the storage of structured, semi-structured, and unstructured data.

- Hadoop MapReduce is a programming model for processing and generating large datasets in parallel, enabling distributed data processing in the data lake.

Apache Spark

- Apache Spark is a fast and general-purpose cluster computing system for big data processing and analytics.

- Spark provides in-memory computing capabilities, enabling faster processing and iterative algorithms compared to Hadoop MapReduce.

- Spark supports various data processing tasks, including batch processing, real-time streaming, machine learning, and graph processing.

- Spark SQL provides a SQL interface for structured data processing, enabling querying and data analysis in the data lake.

Delta Lake

- Delta Lake is an open-source storage layer that brings reliability, scalability, and performance to data lakes.

- It provides ACID (Atomicity, Consistency, Isolation, Durability) transactions, data versioning, and schema enforcement on top of data lakes.

- Delta Lake enables data lake use cases that require data consistency, data quality, and data governance, such as data warehousing and machine learning.

Databricks

- Databricks is a unified analytics platform that combines the power of Apache Spark with a user-friendly interface and collaborative workspace.

- It provides a fully managed, cloud-based environment for data engineering, data science, and machine learning workloads.

- Databricks integrates with various data sources, including data lakes, data warehouses, and streaming platforms, enabling end-to-end data processing and analytics.

Data Lakehouse

- Data lakehouse is an emerging architectural pattern combining the best data lakes and data warehouses.

- It combines data lakes’ schema-on-read flexibility and cost-effectiveness with data warehouses’ data management and performance optimization capabilities.

- Data lakehouses enable SQL analytics, business intelligence, and machine learning directly on top of the data lake, eliminating the need for separate data movement and transformation processes.

These technologies and frameworks form the foundation of modern data lakes, enabling organizations to store, process, and analyze large-scale and diverse datasets efficiently. Organizations can build robust and scalable data lake solutions that drive data-driven insights and decision-making by leveraging the right combination of technologies based on their specific requirements.

Managing Data Lakes: Best Practices and Strategies

Effective data lake management ensures data quality, governance, and security. Let’s explore some best practices and strategies for managing data lakes.

Data Governance

- Establish a data governance framework that defines policies, procedures, and standards for data management in the data lake.

- Assign clear roles and responsibilities for data ownership, stewardship, and access control.

- Implement data lineage and data provenance tracking to understand the origin, transformation, and usage of data in the data lake.

- Define data quality metrics and implement data validation and cleansing processes to ensure data accuracy, completeness, and consistency.

- Establish data retention policies and implement data archival and deletion processes to manage the data lifecycle in the data lake.

Data Security and Privacy

- Implement strong authentication and authorization mechanisms to control access to data in the data lake based on user roles and permissions.

- Encrypt sensitive data at rest and in transit to protect against unauthorized access and data breaches.

- Implement data masking and data anonymization techniques to protect sensitive information and comply with data privacy regulations.

- Establish data auditing and monitoring processes to detect and respond to security incidents and anomalies.

- Review and update data security policies and procedures to address emerging threats and compliance requirements.

Data Discovery and Metadata Management

- Implement a metadata management system to capture and maintain metadata about the data in the data lake, including data descriptions, data schemas, and data lineage.

- Provide a centralized data catalog or data discovery tool to enable users to easily search, discover, and understand the data assets in the data lake.

- Encourage data producers to provide comprehensive and accurate metadata when ingesting data into the data lake to facilitate data discovery and understanding.

- Establish data governance processes to ensure the quality and consistency of metadata across the data lake.

Data Quality and Data Profiling

- Perform regular data profiling to assess data quality, completeness, and consistency in the data lake.

- Implement data validation rules and data quality checks to identify and remediate data quality issues.

- Establish data cleansing and data enrichment processes to improve the quality and usability of data in the data lake.

- Monitor data quality metrics and establish data quality thresholds to trigger alerts and remediation actions.

Data Partitioning and Data Organization

- Implement an effective data partitioning strategy to optimize data storage and retrieval based on data access patterns and query requirements.

- Organize data in the data lake using a structured hierarchy or folder structure based on data domains, data types, and usage patterns.

- Use time-based or hash-based partitioning techniques to improve query performance and data management.

- Review and optimize data partitioning and organization strategies to adapt to changing data volumes and access patterns.

Data Lifecycle Management

- Define data lifecycle policies that specify how data should be managed from ingestion to archival or deletion.

- Implement data retention policies to ensure that data is retained only for the necessary duration based on legal, regulatory, and business requirements.

- Establish data archival processes to move older or less frequently accessed data to cost-effective storage tiers.

- Implement data deletion processes to securely remove data that is no longer needed or must be retained.

By following these best practices and strategies, organizations can manage their data lakes effectively, ensuring data quality, governance, and security. Regular monitoring, auditing, and continuous improvement of data lake management processes are essential to adapting to evolving data requirements and maintaining the data lake’s value and usability over time.

Data Lakes in Practice: Use Cases and Examples

Data lakes have found widespread adoption across various industries, enabling organizations to unlock the value of their data assets. Let’s explore some real-world examples and use cases of data lake applications.

Healthcare

- A healthcare provider implemented a data lake to integrate and analyze patient data from electronic health records (EHRs), medical imaging systems, and wearable devices. By combining structured and unstructured data in the data lake, they gained insights into patient outcomes, identified risk factors, and personalized treatment plans.

- The data lake enabled the healthcare provider to apply machine learning algorithms to predict patient readmissions, optimize resource allocation, and improve the overall quality of care. They also complied with data privacy regulations by implementing data masking and access controls in the data lake

Marketing and Customer 360

- A retail company built a data lake to unify customer data from various sources, including transactional systems, web analytics, social media, and customer service interactions. By creating a comprehensive customer 360 view in the data lake, they were able to gain a holistic understanding of customer behaviors, preferences, and journeys.

- The data lake enabled the retail company to perform advanced customer segmentation, personalized marketing campaigns, and targeted product recommendations. They optimized their marketing spend, improved customer engagement, and drove higher conversion rates and customer lifetime value.

Fraud Detection and Risk Management

- A financial institution implemented a data lake to integrate and analyze data from various sources, including transactional systems, customer profiles, and external data feeds. By applying machine learning algorithms to the data lake data, they could detect fraudulent activities and assess credit risk in real time.

- The data lake enabled the financial institution to improve the accuracy and efficiency of its fraud detection and risk assessment processes. It reduced false positives, minimized financial losses, and complied with regulatory requirements for anti-money laundering (AML) and know-your-customer (KYC) compliance.

Supply Chain Optimization

- A manufacturing company built a data lake to integrate and analyze data from various sources, including sensor data from production lines, supplier data, and logistics data. By combining and analyzing this data in the data lake, they were able to optimize their supply chain operations and improve overall efficiency.

- The data lake enabled the manufacturing company to perform predictive maintenance on their equipment, reducing downtime and maintenance costs. They also optimized inventory levels, improved demand forecasting, and streamlined logistics processes, resulting in significant cost savings and improved customer satisfaction.

These examples demonstrate the diverse applications and benefits of data lakes across industries. By leveraging data lakes to integrate and analyze large volumes of structured and unstructured data, organizations can gain valuable insights, drive innovation, and make data-driven decisions to improve operational efficiency, customer experience, and business outcomes.

The Future of Data Lakes: Trends and Innovations

As data grows in volume, variety, and velocity, emerging trends and innovations shape the future of data lakes. Let’s explore some of the key trends and developments driving this evolution.

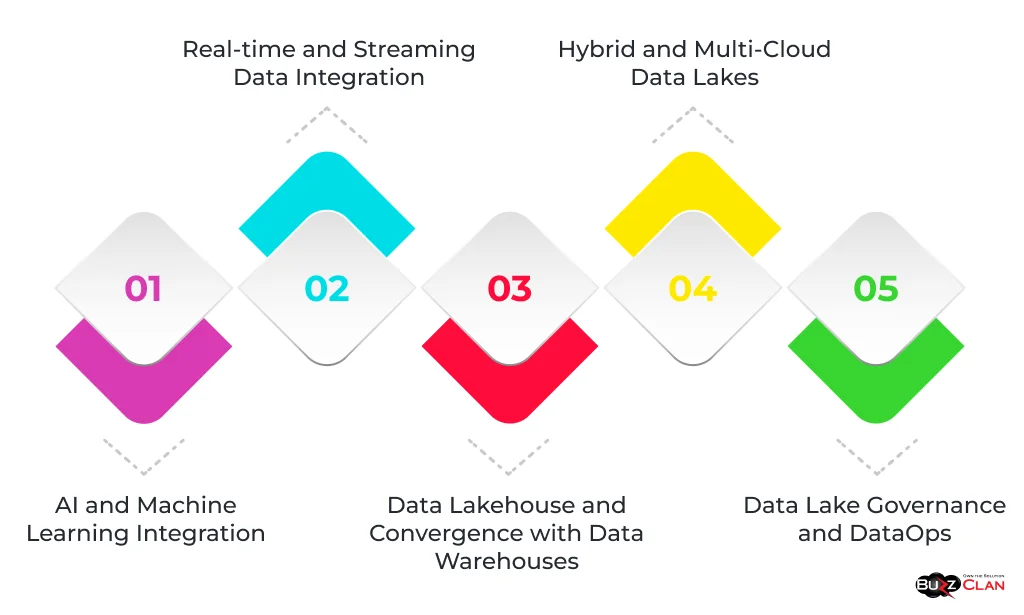

AI and Machine Learning Integration

- Integrating artificial intelligence (AI) and machine learning (ML) capabilities with data lakes is becoming increasingly prevalent. Data lakes provide the foundation for storing and processing large volumes of data required for training and deploying AI and ML models.

- Advances in technologies like deep learning, natural language processing (NLP), and computer vision enable organizations to extract valuable insights and automate decision-making processes using the data stored in data lakes.

- Data lakes are becoming the central hub for AI and ML workloads, enabling seamless integration of data ingestion, data preparation, model training, and model deployment pipelines.

Real-time and Streaming Data Integration

- The growing importance of real-time analytics and decision-making drives the integration of streaming data sources into data lakes. Data lakes are evolving to support the ingestion and processing of real-time data streams alongside batch data.

- Technologies like Apache Kafka, Apache Flink, and Apache Spark Streaming enable organizations to capture, process, and analyze streaming data in real-time, enabling use cases like real-time fraud detection, predictive maintenance, and personalized recommendations.

- The convergence of data lakes and streaming data enables organizations to build real-time data pipelines and deliver actionable insights with minimal latency.

Data Lake Governance and DataOps

- As data lakes grow in size and complexity, the importance of data governance and DataOps practices is increasingly recognized. DataOps, which combines data management, integration, and operations, is emerging as a key approach to ensuring data quality, security, and consistency in data lakes.

- Automated data discovery, data lineage tracking, and data cataloging tools are being integrated into data lakes to improve data governance and enable users to access self-service data.

- Adopting data governance frameworks, policies, and best practices is becoming crucial to ensure data reliability, integrity, and compliance in data lakes.

Hybrid and Multi-Cloud Data Lakes

- Organizations increasingly adopt hybrid and multi-cloud architectures for their data lake implementations. Hybrid data lakes combine on-premises and cloud-based storage and processing capabilities, enabling organizations to leverage the scalability and cost-efficiency of the cloud while maintaining control over sensitive data on-premises.

- Multi-cloud data lakes distribute data and workloads across multiple cloud providers to avoid vendor lock-in, improve data resilience, and optimize costs and performance.

- Data lake solutions are evolving to support seamless data integration, movement, and processing across hybrid and multi-cloud environments.

Data Lakehouse and Convergence with Data Warehouses

- The data lakehouse architecture, which combines the best of data lakes and data warehouses, is gaining traction to enable ACID transactions, data consistency, and performance optimization directly on top of data lakes.

- Data lakehouses provide a unified platform for data storage, processing, and analysis, eliminating the need for separate data movement and transformation processes between data lakes and data warehouses.

- The convergence of data lakes and data warehouses is enabling organizations to build end-to-end data pipelines and deliver analytics and BI capabilities directly on the data lake, simplifying data architectures and reducing data silos.

These trends and innovations highlight data lakes’ ongoing evolution and increasing importance in the data landscape. As organizations continue to harness the power of big data, the future of data lakes lies in the seamless integration of AI and ML capabilities, real-time data processing, robust data governance, and hybrid and multi-cloud architectures.

Challenges and Solutions in Data Lake Deployment

While data lakes offer significant benefits for managing and analyzing large volumes of diverse data, they also present several challenges that organizations must navigate. Let’s explore some common challenges and solutions in data lake deployment.

Data Quality and Consistency

- Challenge: Ensuring data quality and consistency in a data lake can be difficult due to the diverse and unstructured nature of the data. Data inconsistencies, errors, and duplications can lead to inaccurate insights and decision-making.

- Solution: Implement data quality and data validation processes to identify and address data quality issues. Establish data governance policies and procedures to ensure data consistency and accuracy. Regularly profile and monitor data to detect anomalies and inconsistencies.

Data Security and Privacy

- Challenge: Securing sensitive data and ensuring data privacy compliance in a data lake can be challenging, especially when dealing with large volumes of unstructured data from various sources.

- Solution: Implement strong authentication and access control mechanisms to protect data lake data. Encrypt sensitive data at rest and in transit. Apply data masking and anonymization techniques to protect personally identifiable information (PII). Regularly audit and monitor data access and usage to detect and respond to security incidents.

Data Integration and Interoperability

- Challenge: Integrating data from diverse sources and systems into a data lake can be complex, especially when dealing with different data formats, schemas, and APIs.

- Solution: Utilize data integration tools and frameworks that support various data sources and formats. Establish data ingestion pipelines and ETL processes to extract, transform, and load data into the data lake. Leverage data virtualization and data federation techniques to enable seamless data access and interoperability across different systems.

Scalability and Performance

- Challenge: As data volumes grow, ensuring the scalability and performance of the data lake can become challenging. Queries and analytics workloads may slow down or fail if the data lake is not designed to handle large-scale data processing.

- Solution: Implement a scalable and distributed data lake architecture to handle growing data volumes and concurrent workloads. Leverage technologies like Apache Spark and Hadoop for parallel processing and distributed computing. Optimize data partitioning and indexing strategies to improve query performance. Utilize cloud-based services that provide elastic scalability and on-demand resources.

Data Discovery and Governance

- Challenge: With vast amounts of data stored in a data lake, enabling users to discover, understand, and govern data assets can be difficult. A lack of metadata and data lineage can hinder data discovery and governance efforts.

- Solution: Implement a data catalog and metadata management system to capture and maintain metadata about the data in the data lake. Provide a user-friendly data discovery interface that allows users to search, browse, and understand data assets. Establish data governance policies and procedures to ensure data quality, security, and compliance. Regularly review and update metadata and data lineage information.

Skill Gap and Complexity

- Challenge: Implementing and managing a data lake requires specialized skills and expertise in big data technologies, integration, and governance. Organizations may struggle to find and retain talent with the necessary skill sets.

- Solution: Invest in training and upskilling programs to build in-house expertise in data lake technologies and best practices. Partner with experienced data lake consultants and service providers to accelerate implementation and knowledge transfer. Utilize managed services and platforms that abstract away the complexity of underlying technologies and provide user-friendly interfaces for data management and analytics.

By proactively addressing these challenges and implementing effective solutions, organizations can successfully deploy and manage data lakes to derive maximum value from their data assets. Continuous monitoring, optimization, and adaptation to evolving data requirements and best practices are key to ensuring data lake implementations’ long-term success and effectiveness.

Starting with Data Lakes: A Guide for Beginners

If you are new to data lakes and looking to get started, here’s a beginner’s guide to help you understand and leverage data lakes effectively.

Step 1: Understand the Fundamentals

- Familiarize yourself with the basic concepts of data lakes, such as data storage, processing, and governance.

- Learn about data lakes’ key characteristics and benefits, such as schema-on-read, data flexibility, and cost-effectiveness.

- Explore the differences between data lakes and traditional data warehouses to understand when to use each approach.

Step 2: Identify Business Use Cases

- Determine the specific business problems or opportunities a data lake can address in your organization.

- Identify the data sources and types relevant to your use cases, such as structured data from transactional systems, unstructured data from social media, or streaming data from IoT devices.

- Define the expected outcomes and benefits of implementing a data lake, such as improved analytics, data-driven decision-making, or operational efficiency.

Step 3: Assess Your Data Landscape

- Evaluate your current data landscape, including existing data sources, volumes, and formats.

- Identify the data silos and integration challenges that a data lake can help overcome.

- Assess your data sources’ quality and consistency to determine the necessary data cleansing and transformation steps.

Step 4: Choose the Right Technologies

- Explore the technologies and frameworks available for building and managing data lakes, such as Hadoop, Apache Spark, and cloud-based services like Amazon S3 or Azure Data Lake Storage.

- Evaluate different technologies’ scalability, performance, and cost considerations based on your requirements.

- Consider your organization’s skill sets and expertise to select technologies that align with your capabilities.

Step 5: Design and Implement the Data Lake

- Develop a data lake architecture that aligns with your business requirements and technology choices.

- Establish data ingestion pipelines to extract, transform, and load data from various sources into the data lake.

- Implement data storage and processing components, such as distributed file systems, data processing engines, and data query tools.

- Define data governance policies and procedures to ensure data quality, security, and compliance.

Step 6: Enable Data Discovery and Analytics

- Implement a data catalog and metadata management system to enable data discovery and understanding.

- Provide self-service analytics capabilities to empower users to explore and analyze data in the data lake.

- Establish data access controls and security measures to protect sensitive data and ensure compliance with regulations.

Step 7: Monitor and Optimize

- Continuously monitor the performance, scalability, and data quality of your data lake.

- Identify and address any bottlenecks, data inconsistencies, or performance issues.

- Optimize data storage and processing strategies based on usage patterns and evolving requirements.

- Review and update data governance policies and procedures to ensure ongoing effectiveness.

Recommended Resources

Books:

- “Data Lake for Enterprises” by Tomcy John and Pankaj Misra

- “Designing Data-Intensive Applications” by Martin Kleppmann

- “Hadoop: The Definitive Guide” by Tom White

Online Courses:

- “Introduction to Big Data” by Coursera

- “Data Lake Fundamentals” by Pluralsight

- “AWS Data Lake Formation” by AWS

Online Communities:

- Apache Hadoop Community

- Data Science Central

- DataCamp Community

By following this beginner’s guide and leveraging the recommended resources, you can start your journey into the world of data lakes. Remember that building a data lake is an iterative process that requires continuous learning, experimentation, and adaptation. Engage with the data lake community, seek expert guidance, and stay updated with the latest trends and best practices to maximize your data lake initiatives.

Conclusion

In this comprehensive blog post, we have explored the vast and transformative world of data lakes. We have delved into the fundamental concepts, architecture, and best practices of data lakes, highlighting their significance in the era of big data.

We have learned that data lakes provide a centralized repository for storing and processing large volumes of structured, semi-structured, and unstructured data, enabling organizations to unlock the full potential of their data assets. By leveraging the schema-on-read approach and the flexibility of data lakes, organizations can gain valuable insights, drive innovation, and make data-driven decisions.

Throughout the blog post, we have compared data lakes with traditional data warehouses, emphasizing the unique characteristics and benefits of data lakes. We have explored the key technologies powering data lakes, such as Hadoop, Apache Spark, and Delta Lake, and discussed the data lake offerings of major cloud providers like AWS, Azure, and Google Cloud.

We have also delved into the best practices and strategies for managing data lakes effectively, covering crucial aspects such as data governance, security, discovery, and quality. Real-world examples and use cases have demonstrated data lakes’ practical applications and benefits across industries, from healthcare and marketing to fraud detection and supply chain optimization.

Looking ahead, we have examined the future trends and innovations shaping the evolution of data lakes, including the integration of AI and machine learning, real-time data processing, hybrid and multi-cloud architectures, and the convergence with data warehouses through the data lakehouse paradigm.

Furthermore, we have addressed the challenges and solutions in data lake deployment, providing insights into overcoming common obstacles and implementing effective data quality, security, scalability, and governance strategies.

Expert insights from industry professionals have provided valuable perspectives and best practices for successful data lake implementations, emphasizing the importance of scalable architecture, robust data integration, strong governance, and collaborative approaches.

For beginners embarking on their data lake journey, we have provided a step-by-step guide and recommended resources to help them effectively understand and leverage data lakes.

As we conclude, it is evident that data lakes have become an indispensable component of modern data architectures, enabling organizations to harness the power of big data and drive transformative outcomes. By embracing the potential of data lakes, organizations can unlock new opportunities, gain competitive advantages, and make informed decisions in an increasingly data-driven world.

However, the success of data lake initiatives relies on a combination of technical expertise, robust governance, and a data-driven culture. Organizations must continually adapt to data requirements, technologies, and best practices to ensure their data lakes’ long-term value and effectiveness.

As you embark on your data lake journey, remember to start with a clear understanding of your business objectives, assess your data landscape, choose the right technologies, and implement strong data governance and security measures. Engage with the data lake community, learn from the experiences of others, and stay curious and open to new possibilities.

The future of data lakes is bright, and the opportunities are limitless. By harnessing the power of data lakes, organizations can transform their data into actionable insights, drive innovation, and shape a data-driven future. So, dive into the world of data lakes, explore their potential, and unlock the value of your data assets.

FAQs

Get In Touch