What is Data Engineering: Building the Foundation for Data-driven Insights

Priya Patel

Apr 10, 2024

Introduction

Data engineering has emerged as a crucial discipline in data-driven decision-making. As organizations grapple with an ever-increasing volume, velocity, and variety of data, the role of data engineers has become indispensable. This blog post delves into the intricacies of data engineering, exploring its definition, the evolving role of data engineers, and the key topics covered in the following sections.

Data engineering involves designing, building, and maintaining the infrastructure and systems that enable data collection, storage, processing, and analysis. Data engineers are the architects who lay the foundation for data-driven insights. They are responsible for ensuring that data is efficiently and reliably transported from its source to the end-users, such as data scientists, analysts, and business stakeholders.

In today's data-driven landscape, the role of data engineers has evolved significantly. They are no longer mere data custodians; they are strategic partners who collaborate closely with cross-functional teams to unlock the full potential of data. As organizations recognize the competitive advantage that data can provide, the demand for skilled data engineers has skyrocketed.

This blog post will take you on a comprehensive journey through data engineering. We will start by exploring the historical context and emergence of this field, followed by a deep dive into the core responsibilities of a data engineer. We will also examine the essential tools and technologies for data engineering, including databases, big data technologies, and cloud platforms.

Furthermore, we will explore advanced concepts like feature engineering, big data engineering, and real-time data processing. We will discuss the education and career path for aspiring data engineers, highlighting the skills and knowledge required to succeed in this field. We will also cover best practices in data engineering, including data quality, governance, security, and collaboration.

As we look toward the future, we will explore the trends shaping the evolution of data engineering, such as the increasing role of artificial intelligence and machine learning. Real-world applications and case studies will showcase the impact of data engineering in various industries and provide practical insights into successful data engineering projects.

By the end of this blog post, you will have a comprehensive understanding of data engineering, its significance in today's data-driven world, and the integral role that data engineers play in leveraging data for business insights. Whether you are a data enthusiast, an aspiring data engineer, or a business leader seeking to harness the power of data, this blog post will provide you with valuable knowledge and inspiration.

The Data Engineering Landscape

Data engineering has its roots in the early days of data management and analysis. As organizations began to collect and store increasing amounts of data, the need for specialized professionals to manage and process this data became evident. Over time, the role of data engineers evolved from mere data administrators to strategic partners in driving data-driven decision-making.

To understand the data engineering landscape, it is essential to differentiate between the roles of data engineers, data scientists, and data analysts. While these roles often intersect and collaborate closely, they have distinct responsibilities and skill sets.

Data engineers are primarily responsible for designing, building, and maintaining the infrastructure and systems that enable data collection, storage, processing, and analysis. They focus on the technical aspects of data management, ensuring that data is efficiently and reliably transported from its source to the end-users.

On the other hand, data scientists extract insights and knowledge from the data. They use statistical and machine learning techniques to analyze complex datasets, uncover patterns, and build predictive models. Data scientists rely on the infrastructure and data pipelines built by data engineers to access and manipulate the data they need.

Data analysts, in contrast, are more focused on the business aspects of data. They use data to answer specific business questions, create reports and visualizations, and communicate insights to stakeholders. Data analysts often work closely with data engineers to ensure the necessary data is available and accessible.

The data engineering landscape spans various business sectors, from technology and e-commerce to healthcare and finance. Data engineers are crucial in building and maintaining the data infrastructure that powers personalized recommendations, targeted advertising, and user behavior analysis in the tech industry. E-commerce companies rely on data engineers to process and analyze vast amounts of transactional data, enabling them to optimize pricing, inventory management, and customer experience.

Data engineers are instrumental in building systems that can handle sensitive patient data securely and efficiently in the healthcare sector. They enable the integration of electronic health records, medical imaging, and wearable device data, facilitating advanced analytics and personalized medicine.

Financial institutions leverage data engineering to process and analyze massive volumes of financial data, detect fraudulent activities, and comply with regulatory requirements. Data engineers build scalable systems that handle real-time data streams from trading platforms, enabling high-frequency trading and risk management.

As the data landscape continues to evolve, the role of data engineers becomes increasingly critical. They are at the forefront of designing and implementing the infrastructure that enables organizations to harness the power of data. In the following sections, we will dive deeper into the core responsibilities of data engineers, the tools and technologies they use, and the best practices they follow to ensure the success of data-driven initiatives.

Core Responsibilities of a Data Engineer

Data engineers are pivotal in enabling organizations to leverage data for strategic decision-making and competitive advantage. Their core responsibilities revolve around designing, building, and maintaining the infrastructure and systems that facilitate data flow from its source to the end-users.

One of a data engineer's primary responsibilities is to define the data architecture and design the data pipelines that transport data from various sources to the target systems. This involves understanding the organization's data requirements, identifying the relevant data sources, and determining the most efficient and scalable way to extract, transform, and load (ETL) the data.

Data engineers work closely with business stakeholders, data scientists, and analysts to gather requirements and ensure that the data infrastructure aligns with the organization's goals. They collaborate with cross-functional teams to define the data models, schemas, and structures that will be used to store and organize the data.

Building and maintaining data pipelines is a core aspect of a data engineer's job. Data pipelines are the backbone of data engineering, responsible for automating the movement and transformation of data from source systems to target systems. Data engineers design and implement ETL processes that extract data from various sources, such as databases, APIs, and streaming platforms. They transform the data to ensure consistency and quality and load it into the target systems, such as data warehouses or data lakes.

Data engineers ensure the data pipelines are robust, scalable, and fault-tolerant. They optimize the pipelines' performance, minimizing latency and maximizing throughput. They also implement monitoring and alerting mechanisms to detect and resolve any issues or failures in the data flow.

Data engineers also have crucial responsibilities in data modeling and warehousing. They design the logical and physical data models that define how data is structured and stored in the target systems. Data engineers create schemas that optimize query performance, ensure data integrity, and facilitate easy access for downstream consumers.

Data warehousing involves designing and implementing systems that store and manage large volumes of structured data. Data engineers are responsible for selecting the appropriate database technologies, such as relational databases or columnar storage, based on the organization's specific requirements. They design the data warehouse architecture, including the staging area, the data vault, and the data marts, to support efficient data retrieval and analysis.

Data engineers also play a significant role in ensuring data quality and governance. They implement data validation and cleansing mechanisms to identify and correct data inconsistencies, duplicates, and errors. They define data quality metrics and establish processes to monitor and measure data quality across the organization.

In addition to technical responsibilities, data engineers also contribute to the organization's overall data strategy. They provide input on data architecture decisions, help define data governance policies, and collaborate with cross-functional teams to ensure data is used effectively and ethically.

As data volume, variety, and velocity continue to grow, the role of data engineers becomes increasingly complex and challenging. They must stay up-to-date with the latest technologies and best practices in data engineering to ensure the data infrastructure remains scalable, reliable, and secure.

In the next section, we will explore the tools and technologies that data engineers use to fulfill their responsibilities and build robust data engineering solutions.

The Tools of the Trade

Data engineers use various tools and technologies to design, build, and maintain the data infrastructure that powers data-driven organizations. These tools span multiple categories, including data integration, modeling, data warehousing, and data orchestration.

One of the most critical tools in a data engineer's toolkit is the Extract, Transform, Load (ETL) process. ETL tools enable data engineers to extract data from various sources, transform it to ensure consistency and quality, and load it into target systems such as data warehouses or data lakes. Popular ETL tools include Apache NiFi, Talend, and Informatica.

Data engineers also use data modeling and data warehousing as essential tools. Data modeling tools like ER/Studio and Erwin Data Modeler allow data engineers to design logical and physical data models that define how data is structured and stored in the target systems. Data warehousing tools, such as Amazon Redshift, Google BigQuery, and Snowflake, provide scalable and high-performance solutions for storing and managing large volumes of structured data.

In recent years, modern data stacks have introduced new tools and frameworks that have revolutionized how data engineers work. One such tool is DBT (Data Build Tool), which has gained significant popularity among data engineers. dbt is an open-source command-line tool that enables data transformation and modeling using SQL. It allows data engineers to define and manage data transformations as code, making the process more efficient, version-controlled, and collaborative.

Another crucial tool in the modern data stack is Apache Airflow, a powerful data orchestration platform. Airflow enables data engineers to define, schedule, and monitor complex data pipelines as code. It provides a web-based user interface for managing and monitoring workflows, making it easy to visualize dependencies and track the progress of data pipelines.

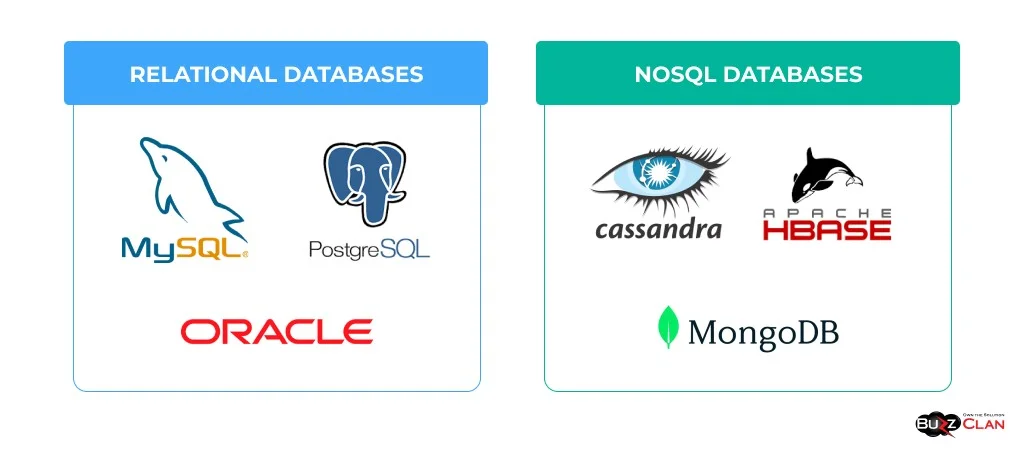

Data engineers also utilize various database technologies, depending on the organization's requirements. Relational databases, such as MySQL and PostgreSQL, are commonly used for structured data storage and retrieval. NoSQL databases, such as MongoDB and Cassandra, handle unstructured or semi-structured data. Big data technologies, such as Apache Hadoop and Apache Spark, are employed for processing and analyzing large-scale datasets.

Cloud platforms have become increasingly popular among data engineers due to their scalability, flexibility, and cost-effectiveness. Cloud providers such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer a wide range of data engineering services, including data storage, processing, and analytics. Data engineers leverage these services to build and deploy data pipelines, data warehouses, and big data solutions in the cloud.

Another important aspect of a data engineer's work is integrating data engineering tools with enterprise applications. Data engineers must often integrate data from various sources, such as customer relationship management (CRM) systems, enterprise resource planning (ERP) systems, and marketing automation platforms. Tools like Apache Kafka and Apache NiFi facilitate real-time data integration and streaming, enabling data engineers to build real-time data pipelines.

As the data engineering landscape evolves, new tools and technologies emerge to address the challenges of big data, real-time processing, and machine learning. Data engineers must stay up-to-date with these advancements and continuously evaluate and adopt the tools that best fit their organization's needs.

In the next section, we will explore some of the key data engineering technologies, including databases, big data technologies, and cloud platforms, to understand their significance in the data engineering workflow.

Data Engineering Technologies Explained

Data engineering encompasses many technologies that enable efficient and reliable data processing, storage, and analysis. This section will look closely at key technologies that form the backbone of data engineering, including databases, big data technologies, cloud platforms, and data orchestration tools.

Databases

Databases are the foundation of any data-driven organization. They provide a structured way to store, organize, and retrieve data. Data engineers work with various databases, depending on the organization's requirements.

Relational databases, such as MySQL, PostgreSQL, and Oracle, are widely used for storing structured data. They organize data into tables with predefined schemas and use SQL (Structured Query Language) to query and manipulate data. Relational databases provide strong consistency and ACID (Atomicity, Consistency, Isolation, Durability) properties and support complex joins and transactions.

NoSQL databases, such as MongoDB, Cassandra, and Apache HBase, have gained popularity recently due to their ability to handle unstructured or semi-structured data at scale. NoSQL databases offer high scalability, flexible schemas, and eventual consistency. They are often used in scenarios where data volume and variety are high, such as web-scale applications and real-time data processing.

Big Data Technologies

Big data technologies have revolutionized the way organizations process and analyze large-scale datasets. Apache Hadoop and Apache Spark are two of the most widely used big data technologies in the data engineering landscape.

Apache Hadoop is an open-source framework that enables distributed storage and processing of large datasets across clusters of commodity hardware. It consists of two main components: Hadoop Distributed File System (HDFS) for storage and MapReduce for processing. Hadoop provides fault tolerance, scalability, and cost-effectiveness for handling big data workloads.

Apache Spark is a fast and general-purpose cluster computing system. It provides an in-memory computing framework that efficiently processes large datasets. Spark supports various data processing tasks, including batch processing, real-time streaming, machine learning, and graph processing. Spark's key advantage is its ability to perform in-memory computations, which significantly speeds up data processing compared to disk-based systems like Hadoop.

Cloud Platforms

Cloud platforms have transformed data engineering by providing scalable, flexible, and cost-effective data storage, processing, and analytics solutions. Major cloud providers, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, offer various data engineering services.

AWS provides services like Amazon S3 for object storage, Amazon Redshift for data warehousing, Amazon EMR for big data processing, and Amazon Kinesis for real-time data streaming. GCP offers similar services, including Google Cloud Storage, BigQuery for data warehousing, and Cloud Dataflow for data processing. Azure provides Azure Blob Storage, Azure Synapse Analytics for data warehousing, and Azure Databricks for big data processing.

Cloud platforms enable data engineers to quickly provision and scale resources based on demand, reducing the need for upfront infrastructure investments. They also provide managed services that abstract away the complexity of underlying infrastructure management, allowing data engineers to focus on building and maintaining data pipelines.

Data Build Tool (dbt)

dbt (Data Build Tool) has emerged as a game-changer in the data engineering workflow. It is an open-source command-line tool that enables data transformation and modeling using SQL. dbt allows data engineers to define and manage data transformations as code, making the process more efficient, version-controlled, and collaborative.

With dbt, data engineers can define data models using SQL and leverage the power of version control systems like Git to manage and track changes. dbt provides a testing framework that allows data engineers to write and run tests against their data models, ensuring data quality and integrity.

dbt also promotes the concept of modularity and reusability in data modeling. Data engineers can define reusable macros and packages encapsulating common data transformation logic, making it easier to maintain and share across projects.

Apache Airflow

Apache Airflow is a popular data orchestration platform enabling engineers to define, schedule, and monitor complex data pipelines as code. It provides a web-based user interface for managing and monitoring workflows, making it easy to visualize dependencies and track the progress of data pipelines.

Airflow uses directed acyclic graphs (DAGs) to represent data pipelines. DAGs are collections of tasks that are defined and orchestrated using Python code. Each task in a DAG represents a specific data processing step, such as extracting data from a source, transforming it, or loading it into a target system.

Airflow provides a rich set of operators and sensors that allow data engineers to interact with various data sources, databases, and cloud services. It also supports task dependencies, scheduling, and error handling, making it a comprehensive solution for data pipeline orchestration.

Understanding these key data engineering technologies is crucial for data engineers to design and build robust and scalable data infrastructure. In the next section, we will explore advanced data engineering concepts like feature engineering, big data engineering, and real-time data processing to deepen our field understanding further.

Advanced Data Engineering Concepts

As data engineering continues to evolve, several advanced concepts are necessary for data engineers to tackle complex data challenges. This section will explore three key areas: feature engineering, big data engineering, and real-time data processing.

Feature Engineering

Feature engineering is the process of creating new features or transforming existing ones to improve the performance of machine learning models. It is crucial in data science and significantly impacts data engineering workflows.

Data engineers often collaborate with data scientists to understand the feature requirements for machine learning models. They are responsible for designing and building the data pipelines that extract, transform, and prepare the necessary features for model training and inference.

Feature engineering involves various techniques, such as feature selection, extraction, scaling, and encoding. Data engineers must understand these techniques to effectively preprocess and transform the data.

Some common feature engineering tasks include handling missing values, encoding categorical variables, scaling numerical features, and creating derived features based on domain knowledge. Data engineers may also need to apply dimensionality reduction techniques, such as principal component analysis (PCA) or t-SNE, to reduce the number of features while preserving the most relevant information.

Efficient feature engineering requires data engineers to design scalable and reusable data pipelines that can handle large volumes of data. They need to ensure that the feature extraction and transformation processes are optimized for performance and can be easily integrated into the overall data workflow.

Big Data Engineering

Big data engineering involves designing and building solutions to handle the challenges of processing and analyzing massive volumes of data. As organizations collect and generate data at an unprecedented scale, data engineers need to develop strategies and architectures to efficiently store, process, and derive insights from big data.

One key aspect of big data engineering is the ability to handle the three Vs of big data: volume, velocity, and variety. Data engineers need to design systems that can scale horizontally to accommodate the growing volume of data. They also need to leverage distributed computing frameworks, such as Apache Hadoop and Apache Spark, to process data in parallel across large clusters of machines.

Big data engineering also requires dealing with data that arrives at high velocity, such as real-time streaming data from sensors, social media feeds, or financial transactions. Data engineers must build pipelines that can ingest, process, and analyze data in real time, often using technologies like Apache Kafka, Apache Flink, or Apache Storm.

The variety of data sources and formats adds another complexity to big data engineering. Data engineers must handle structured, semi-structured, and unstructured data from various sources, such as databases, log files, JSON, XML, and text. They must design data models and schemas that accommodate diverse data types and enable efficient querying and analysis.

To tackle the challenges of big data, data engineers often employ techniques such as data partitioning, data compression, and data indexing. They may also leverage cloud-based big data services, such as Amazon EMR, Google Cloud Dataproc, or Azure HDInsight, to process and analyze large datasets scalable and cost-effectively.

Real-time Data Processing and Streaming

Real-time data processing and streaming have become increasingly important in modern data engineering. Many applications, such as fraud detection, personalized recommendations, and IoT monitoring, require the ability to process and analyze data as it arrives without the latency of batch processing.

Data engineers must design and implement real-time data ingestion, processing, and analysis architectures. This involves using technologies like Apache Kafka for data streaming, Apache Flink or Apache Spark Streaming for real-time data processing, and Apache Cassandra or Amazon Kinesis for real-time data storage.

Real-time data processing pipelines typically follow a lambda architecture, combining batch and real-time processing to provide a comprehensive data view. The batch layer processes historical data and provides a baseline, while the real-time layer processes incoming data streams and updates the results in near real-time.

Data engineers must ensure the real-time processing pipelines are fault-tolerant, scalable, and can handle high throughput. They must also implement techniques like data partitioning, replication, and checkpointing to ensure data consistency and recovery in case of failures.

Real-time data processing also requires careful consideration of data quality and data validation. Data engineers must implement mechanisms to detect and handle anomalies, missing values, and data drift in real time. They may use techniques like data sampling, data filtering, and data enrichment to improve the quality and relevance of the processed data.

As data engineering evolves, staying up-to-date with advanced concepts like feature engineering, big data engineering, and real-time data processing is crucial for data engineers to build robust and scalable data solutions. In the next section, we will explore the education and career path for aspiring data engineers, including the skills and knowledge required to succeed in this field.

Education and Career Path

Data engineering requires combining technical skills, domain knowledge, and a passion for data. This section will explore data engineers' educational background, skills, and career trajectory.

Educational Background

Data engineers typically have a strong foundation in computer science, software engineering, or a related field. A bachelor's degree in computer science, information technology, or a similar discipline is often the minimum requirement for entry-level data engineering positions.

However, many data engineers also pursue advanced degrees, such as a master's degree in data science, big data, or computer science, to deepen their knowledge and specialize in specific areas of data engineering. These programs provide in-depth training in data structures, algorithms, database systems, distributed computing, and machine learning.

In addition to formal education, data engineers continuously learn and upskill through online courses, workshops, and certifications. Platforms like Coursera, Udacity, and edX offer a wide range of data engineering courses and nano degrees that cover data warehousing, data pipelines, big data technologies, and cloud computing.

Skills Required

To succeed as a data engineer, diverse technical and soft skills are essential. Some of the key skills required for data engineering include:

- Programming: Proficiency in programming languages such as Python, Java, Scala, or SQL is crucial for data engineers. They should be comfortable writing efficient and scalable code to process and manipulate data.

- Database Management: Data engineers should have a strong understanding of database concepts, including data modeling, database design, and query optimization. Familiarity with relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra) is essential.

- Big Data Technologies: Knowledge of big data frameworks and tools, such as Apache Hadoop, Apache Spark, Apache Kafka, and Apache Airflow, is essential for handling large-scale data processing and pipeline orchestration.

- Cloud Computing: Familiarity with cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), or Microsoft Azure is increasingly important as more organizations adopt cloud-based data solutions.

- Data Warehousing and ETL: Understanding data warehousing concepts, dimensional modeling, and extract-transform-load (ETL) processes is necessary for designing and building efficient data storage and integration systems.

- Data Analysis and Visualization: While data engineers primarily focus on data infrastructure, having basic data analysis and visualization skills can help them collaborate effectively with data scientists and communicate insights to stakeholders.

- Problem-Solving and Critical Thinking: Data engineers often face complex data challenges that require strong problem-solving abilities and critical thinking skills to design and implement effective solutions.

- Communication and Collaboration: Data engineers work closely with cross-functional teams, including data scientists, analysts, and business stakeholders. Effective communication and collaboration skills are essential for understanding requirements, explaining technical concepts, and driving successful data projects.

Career Trajectory and Job Market

The career trajectory for data engineers typically starts with entry-level positions, such as data engineer, ETL developer, or big data developer. As they gain experience and expertise, data engineers can progress to more senior roles, such as senior data engineer, data architect, or data platform engineer.

With the increasing demand for data-driven insights across industries, the job market for data engineers is up and coming. According to industry reports and surveys, data engineering is consistently ranked among the technology sector's top in-demand skills and highest-paying jobs.

Data engineers can find employment opportunities in various industries, including technology, e-commerce, finance, healthcare, and consulting. Companies of all sizes, from startups to large enterprises, invest in data engineering talent to build and maintain their data infrastructure.

Data engineers command competitive compensation packages. The average base salary for a data engineer in the United States is around $100,000 to $130,000 annually, with experienced professionals earning even higher wages. Location, industry, and company size can influence the salary range.

As the field of data engineering continues to evolve, there is a strong growth potential for professionals who stay updated with the latest technologies and best practices. Continuous learning, staying curious, and adapting to new challenges are key to a successful and rewarding career in data engineering.

In the next section, we will discuss best practices in data engineering, including ensuring data quality, governance, security, and collaboration to help engineers build robust and reliable data solutions.

Best Practices in Data Engineering

Data engineers must follow best practices to build robust, scalable, and maintainable data solutions. These practices ensure data quality, governance, security, and effective collaboration. This section will explore some of the key best practices in data engineering.

Ensuring Data Quality and Governance

Data quality and governance are critical aspects of data engineering. Poor data quality can lead to inaccurate insights, wrong business decisions, and loss of trust in the data. Data engineers play a crucial role in ensuring the quality and integrity of data throughout the data lifecycle.

Some best practices for ensuring data quality include:

| Practices | Description |

| Data Validation | Implement validation checks at various data pipeline stages to identify and handle data anomalies, missing values, and inconsistencies. This can include data type checks, range checks, and pattern matching. |

| Data Cleansing | Applying data cleansing techniques to remove or correct invalid, incomplete, or duplicated data. This may involve data standardization, data normalization, and data deduplication. |

| Data Profiling | Conduct data profiling to understand the data's characteristics, patterns, and quality. This helps identify data quality issues, such as data skewness, outliers, and inconsistencies. |

| Data Lineage | Maintaining data lineage tracks data's origin, transformations, and dependencies as it flows through the data pipeline. This helps understand the impact of data changes and facilitates data governance and compliance. |

| Data Monitoring | Implement data monitoring and alerting mechanisms to detect and address data quality issues proactively. This includes setting up data quality metrics, thresholds, and automated notifications. |

Data governance is another critical aspect of data engineering. It involves defining policies, standards, and processes to ensure data assets' proper management, usage, and protection. Data engineers should collaborate with data stewards, data owners, and compliance teams to establish and enforce data governance practices.

Some best practices for data governance include:

| Practices | Description |

| Data Catalog | Maintaining a comprehensive data catalog that documents data assets, their definitions, ownership, and access policies. This helps in promoting data discovery, understanding, and reuse. |

| Data Access Control | Implementing appropriate access controls and permissions ensures that only authorized users can access and modify data. This includes role-based access control (RBAC) and fine-grained access control mechanisms. |

| Data Retention and Archival | Defining data retention and archival policies to ensure compliance with legal and regulatory requirements. This involves determining the data lifecycle, including how long it should be retained and when it should be archived or deleted. |

| Data Auditing | Implement data auditing and logging mechanisms to track data access, modifications, and usage. This helps detect and investigate data breaches, unauthorized access, and compliance violations. |

Data Security and Privacy Considerations

Data security and privacy are top priorities for data engineers. With the increasing volume and sensitivity of data being collected and processed, it is crucial to implement robust security measures to protect data from unauthorized access, breaches, and misuse.

Some best practices for data security and privacy include:

| Practices | Description |

| Encryption | Encrypting sensitive data at rest and in transit to protect it from unauthorized access. This includes using secure encryption algorithms and key management practices. |

| Data Masking and Anonymization | Data masking and anonymization techniques protect personally identifiable information (PII) and sensitive data. This involves replacing sensitive data with fictitious, realistic data or irreversibly removing PII. |

| Secure Data Storage | Implementing secure data storage practices, such as using encrypted file systems, secure databases, and access controls to prevent unauthorized access to data. |

| Data Breach Response Plan | Develop and regularly test a data breach response plan to ensure prompt and effective response in case of a security incident. This includes procedures for incident detection, containment, investigation, and notification. |

| Compliance with Regulations | Ensuring compliance with relevant data privacy and security regulations, such as the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and industry-specific standards. |

The Importance of Collaboration and Communication

Data engineering is not a siloed function. It requires close collaboration and effective communication with cross-functional teams, including data scientists, analysts, business stakeholders, and IT teams. Cooperation and communication are essential for understanding requirements, aligning goals, and delivering successful data solutions.

Some best practices for collaboration and communication include:

| Practices | Description |

| Agile Methodologies | Adopting agile methodologies, such as Scrum or Kanban, to facilitate iterative development, continuous feedback, and collaboration among team members. This helps deliver value incrementally and adapt to changing requirements. |

| Documentation and Knowledge Sharing | Maintain clear and comprehensive documentation of data pipelines, architectures, and processes. This includes data dictionaries, data flow diagrams, and code documentation. Encouraging knowledge sharing through wikis, forums, and brown bag sessions helps foster a culture of learning and collaboration. |

| Cross-functional Meetings | Conduct regular cross-functional meetings to align goals, discuss progress, and address challenges. This includes sprint planning meetings, daily stand-ups, and retrospectives. |

| Effective Communication | Communicating technical concepts and data insights clearly and accessible to non-technical stakeholders. Visualizations, dashboards, and storytelling techniques can convey complex data concepts effectively. |

| Continuous Feedback and Improvement | Seek stakeholder feedback and incorporate it into the data engineering processes. Encourage a culture of experimentation, learning from failures, and continuously improving the data solutions. |

By following these best practices in data engineering, organizations can build robust, secure, and collaborative data environments that drive business value and enable data-driven decision-making.

In the next section, we will explore the future of data engineering, including current trends, the role of AI and machine learning, and the importance of continuous learning and adaptability for data engineers.

The Future of Data Engineering

Data engineering is constantly evolving, driven by technological advancements, changing business requirements, and the increasing importance of data-driven decision-making. In this section, we will explore the current trends shaping the future of data engineering and discuss how data engineers can prepare for the challenges and opportunities ahead.

Current Trends Shaping the Future of Data Engineering

- Cloud Adoption: Cloud computing is accelerating in the data engineering landscape. Cloud platforms offer scalability, flexibility, and cost-efficiency for storing, processing, and analyzing large volumes of data. Data engineers increasingly leverage cloud services, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, to build and deploy data solutions.

- Serverless Computing: Serverless computing is gaining traction in data engineering. It allows data engineers to focus on writing code and building data pipelines without worrying about infrastructure management. Serverless platforms like AWS Lambda, Google Cloud Functions, and Azure Functions enable event-driven and scalable data processing.

- Data Mesh and Data Fabric: Data mesh and data fabric concepts are emerging as new architectural patterns for managing and accessing data across distributed systems. Data mesh emphasizes decentralized data ownership and self-serve data infrastructure, while data fabric provides a unified and integrated view of data across multiple sources and systems.

- Real-Time and Streaming Data: The demand for real-time data processing and analysis is growing as businesses require instant insights and faster decision-making. Data engineers leverage technologies like Apache Kafka, Apache Flink, and Apache Spark Streaming to build real-time data pipelines and enable stream processing.

- Data Observability and Monitoring: Data observability and monitoring are becoming critical as data pipelines become more complex and distributed. Data engineers are adopting tools and practices to monitor data quality, data lineage, and pipeline performance in real-time. This helps detect and troubleshoot issues quickly and ensure the reliability of data systems.

The Role of AI and Machine Learning in Data Engineering

Artificial intelligence (AI) and machine learning (ML) are transforming the data engineering landscape. Data engineers enable AI and ML by providing the necessary data infrastructure and pipelines. Some key areas where AI and ML are impacting data engineering include:

- Automated Data Discovery and Profiling: AI and ML techniques can be applied to automate data discovery and profiling tasks. This includes identifying data patterns, detecting anomalies, and suggesting data quality improvements. Tools like AWS Glue, Google Cloud Data Catalog, and Azure Data Catalog leverage AI and ML to simplify data discovery and governance.

- Data Pipeline Optimization: AI and ML can be used to optimize data pipeline performance and resource utilization. By analyzing historical data and usage patterns, intelligent systems can recommend optimal data partitioning strategies, data compression techniques, and resource allocation.

- Predictive Maintenance: AI and ML algorithms can be applied to predict and prevent data pipeline failures. By analyzing log data, performance metrics, and error patterns, predictive maintenance models can identify potential issues before they occur and trigger proactive measures to ensure pipeline stability.

- Automated Data Integration: AI and ML techniques can enable automated data integration by intelligently mapping and matching data schemas across different sources. This can significantly reduce the manual effort required in data integration tasks and improve data consistency.

Preparing for the Future – Continuous Learning and Adaptability

To thrive in the rapidly evolving field of data engineering, professionals must embrace continuous learning and adaptability. Staying up-to-date with the latest technologies, tools, and best practices is essential to remain competitive and deliver value to organizations.

Some strategies for continuous learning and adaptability include:

- Learning New Technologies: Data engineers should proactively learn and experiment with new technologies and frameworks. This includes exploring cloud platforms, big data tools, data streaming technologies, and AI/ML frameworks. Participating in online courses, workshops, and hackathons can help you acquire new skills and stay current with industry trends.

- Collaborative Learning: Engaging in collaborative learning opportunities, such as joining data engineering communities, attending conferences, and participating in open-source projects, can foster knowledge sharing and exposure to diverse perspectives. Collaborating with peers, mentors, and experts can accelerate learning and problem-solving.

- Domain Expertise: Developing domain expertise in specific industries or business domains can provide data engineers with a competitive edge. Understanding a particular domain's unique data challenges and requirements helps design more effective and tailored data solutions.

- Adaptability and Flexibility: Data engineers should cultivate a mindset of adaptability and flexibility. As business requirements and technologies evolve, being open to change and embracing new approaches is crucial. This includes being willing to learn new programming languages, adapt to new development methodologies, and explore innovative solutions to data challenges.

By continuously learning, staying curious, and adapting to the changing landscape, data engineers can position themselves for success in the future of data engineering. They can contribute to developing cutting-edge data solutions, drive innovation, and significantly impact organizations' data-driven initiatives.

In the final section, we will explore real-world applications and case studies that showcase the impact of data engineering in various industries and provide practical insights into successful data engineering projects.

Real-World Applications and Case Studies

To better understand the practical impact of data engineering, let's explore some real-world applications and case studies that demonstrate how data engineering has transformed businesses and driven success across various industries.

Case Study: Data Engineering in a Leading Tech Company

Consider a leading tech company that operates a massive e-commerce platform. The company generates petabytes of data every day from user interactions, transactions, and product catalogs. The company invested heavily in data engineering to leverage this data for personalized recommendations, fraud detection, and supply chain optimization.

The data engineering team built a scalable, fault-tolerant data infrastructure using Apache Hadoop and Apache Spark. They designed data pipelines to ingest, process, and store data from various sources, including clickstream data, user profiles, and inventory systems. The team implemented data quality checks, validation, and enrichment processes to ensure the accuracy and completeness of the data.

The data engineers collaborated closely with data scientists to develop machine-learning models for personalized recommendations and fraud detection. They built feature engineering pipelines to extract relevant features from the data and feed them into the machine learning models. The team also implemented real-time data streaming using Apache Kafka to enable real-time recommendations and fraud alerts.

The impact of data engineering was significant. The personalized recommendations improved customer engagement and increased sales by 20%. The fraud detection system reduced fraudulent transactions by 90%, saving the company millions. The optimized supply chain management led to a 15% reduction in inventory costs and improved order fulfillment rates.

This case study highlights how data engineering can enable data-driven solutions that directly impact business metrics and drive competitive advantage.

Example: Data Engineering's Role in a Successful Marketing Campaign

Let's consider a retail company that launched a targeted marketing campaign to boost sales during the holiday season. The campaign's success relied heavily on data engineering efforts.

The data engineering team collected and integrated data from various sources, including customer demographics, purchase history, web analytics, and social media interactions. They built ETL pipelines to extract, transform, and load the data into a centralized data warehouse.

The team applied data cleansing techniques to handle missing values, remove duplicates, and standardize data formats. They performed data profiling to identify key customer segments and purchase patterns. The data engineers collaborated with the marketing team to define relevant customer attributes and create targeted customer segments.

The marketing team created personalized email campaigns, targeted ads, and promotional offers using the segmented data. The data engineering team also built real-time dashboards to monitor campaign performance and track key metrics such as open, click-through, and conversion rates.

The targeted marketing campaign was a resounding success. The personalized approach led to a 30% increase in email open rates and a 25% increase in conversion rates compared to previous campaigns. The real-time dashboards allowed the marketing team to make data-driven decisions and adjust the campaign strategy on the fly.

This example demonstrates how data engineering enables targeted marketing efforts, improves customer engagement, and drives measurable business results.

From Concept to Execution – Walking Through a Data Engineering Project

Let's walk through a typical data engineering project to understand the end-to-end process and the key stages involved.

- Requirements Gathering: The project begins with understanding the business requirements and defining the goals of the data engineering solution. This involves collaborating with stakeholders, such as business analysts, data scientists, and IT teams, to identify the data sources, data formats, and desired outputs.

- Data Architecture Design: The data engineering team designs the overall data architecture based on the requirements. This includes selecting the appropriate technologies, defining the data flow, and creating the data. The data engineering team designs the overall data architecture based on the requirements while designing the architecture.

- Data Ingestion and Integration: The next stage involves building data pipelines to ingest data from various sources and integrate them into a centralized data store. This may include extracting data from databases, APIs, or streaming sources, applying data transformations, and loading the data into a data warehouse or data lake.

- Data Processing and Transformation: Once the data is ingested, the data engineering team develops data processing and transformation logic to clean, enrich, and structure the data. This may involve applying data quality checks, handling data inconsistencies, and creating derived features or aggregations.

- Data Storage and Retrieval: The processed data is stored in a suitable data storage system, such as a data warehouse, data lake, or NoSQL database. The data engineering team designs the storage schema, optimizes query performance, and implements data partitioning and indexing strategies.

- Data Consumption and Visualization: The data engineering team collaborates with data consumers, such as data scientists, analysts, and business users, to enable easy and efficient data consumption. This may involve creating data APIs, building data visualization dashboards, or integrating with business intelligence tools.

- Testing and Deployment: Before deploying the data engineering solution, thorough testing is conducted to ensure data accuracy, pipeline reliability, and performance. The team performs unit testing, integration testing, and end-to-end testing. Once tested, the solution is deployed to the production environment following established deployment processes and best practices.

- Monitoring and Maintenance: After deployment, the data engineering team continuously monitors the data pipelines, data quality, and system performance. They set up monitoring dashboards, alerts, and logging mechanisms to identify and resolve issues proactively. Regular maintenance tasks, such as data backups, schema updates, and performance tuning, are performed to ensure the smooth operation of the data engineering solution.

Throughout the project, the data engineering team follows agile development methodologies, collaborates closely with stakeholders, and iteratively improves the solution based on feedback and changing requirements.

Walking through a typical data engineering project, we can appreciate the various stages and efforts in delivering a successful data solution. From concept to execution, data engineering is critical in enabling organizations to harness the power of data and drive business value.

Conclusion

In this comprehensive blog post, we have explored data engineering. We started by defining it and highlighting its crucial role in today's data-driven landscape. We then delved into the historical context and the emergence of data engineering as a distinct discipline.

We examined the core responsibilities of a data engineer, including building and maintaining data pipelines, data modeling, and data warehousing. We discussed the tools and technologies that form the backbone of data engineering, such as databases, big data technologies, cloud platforms, and data orchestration tools like Apache Airflow.

We explored advanced data engineering concepts, including feature engineering, big data engineering, and real-time data processing. We also discussed the education and career path for aspiring data engineers, highlighting the skills and knowledge required to succeed in this field.

Best practices in data engineering, such as ensuring data quality, data governance, security, and collaboration, were covered to emphasize the importance of building robust and reliable data solutions. We also looked toward the future, discussing the current trends shaping data engineering, the role of AI and machine learning, and the importance of continuous learning and adaptability.

Real-world applications and case studies showcased the impact of data engineering in various industries, from e-commerce and marketing to healthcare and finance. We walked through a typical data engineering project to understand the end-to-end process and the key stages involved.

In conclusion, data engineering is a vital discipline that enables organizations to leverage data for business insights and decision-making. Data engineers are the unsung heroes who build and maintain the infrastructure that powers data-driven initiatives. As data volume, variety, and velocity continue to grow, the role of data engineers becomes increasingly critical.

For those pursuing a career in data engineering, the future is bright. The demand for skilled data engineers is high, and the opportunities for growth and impact are immense. Data engineers can significantly contribute to organizations' success in the data-driven world by staying curious, continuously learning, and adapting to new challenges.

FAQs

Get In Touch