DataOps: The Key to Agile and Reliable Data Management

Rahul Rastogi

May 2, 2024

Introduction

In today's data-driven world, organizations are grappling with managing and leveraging vast amounts of data effectively. The exponential growth of data and the increasing complexity of data ecosystems have given rise to a new approach known as DataOps. DataOps has emerged as a game-changer in modern data management, revolutionizing how organizations handle data and extract valuable insights.

DataOps, short for Data Operations, is a methodology that combines the principles of Agile development, DevOps, and lean manufacturing to streamline and optimize data management processes. It aims to break down silos between data teams, foster collaboration, and enable the rapid and reliable delivery of high-quality data to stakeholders.

DataOps is rooted in the growing need for organizations to become more data-driven and agile in their decision-making. As businesses recognize data's strategic value, they seek ways to harness its potential and transform it into actionable insights. However, traditional data management practices often need help keeping pace with data's dynamic nature and the ever-changing business requirements.

DataOps has evolved as a response to these challenges, drawing inspiration from the success of DevOps in software development. Just as DevOps revolutionized how software is developed and deployed, DataOps aims to bring similar agility, automation, and collaboration to data management processes.

In this comprehensive blog post, we will delve into the world of DataOps, exploring its key concepts, lifecycle, tools, and best practices. We will also examine real-world case studies and discuss the future trends shaping the DataOps landscape. By the end of this article, you will have a deep understanding of DataOps and how it can transform your organization's data management practices.

Understanding DataOps

DataOps is a collaborative and process-oriented methodology that focuses on improving the quality, speed, and reliability of data analytics and data management processes. It encompasses a set of practices, tools, and cultural principles that aim to break down silos between data teams, automate workflows, and promote continuous improvement.

At its core, DataOps seeks to address the challenges that organizations face when dealing with data, such as data silos, inconsistent data quality, lengthy data processing cycles, and the lack of collaboration between data teams. By applying the principles of Agile development and DevOps to data management, DataOps enables organizations to streamline their data workflows, reduce errors, and deliver trusted data to stakeholders faster.

The key components of the DataOps methodology include:

- Collaboration: DataOps promotes a culture of collaboration and communication among data teams, including data engineers, data scientists, data analysts, and business stakeholders. It encourages cross-functional teamwork and knowledge sharing to ensure alignment and efficiency.

- Automation: DataOps heavily relies on automation to streamline data workflows, reduce manual efforts, and minimize errors. It leverages tools and technologies to automate data ingestion, transformation, validation, and deployment processes.

- Continuous Integration and Delivery (CI/CD): DataOps applies the principles of CI/CD to data pipelines, enabling frequent and automated testing, integration, and deployment of data changes. This ensures that data is consistently reliable and up-to-date.

- Monitoring and Observability: DataOps emphasizes the importance of monitoring and observability throughout the data lifecycle. It tracks data quality, performance, and usage metrics to identify issues, optimize processes, and ensure data reliability.

- Agile Methodologies: DataOps adopts Agile principles to deliver data projects iteratively and incrementally. It focuses on rapid prototyping, frequent feedback loops, and continuous improvement to align data initiatives with business needs.

- Data Governance and Security: DataOps incorporates data governance and security practices to ensure data integrity, privacy, and compliance. It establishes policies, standards, and controls to manage data access, protect sensitive information, and maintain regulatory compliance.

By embracing these components, DataOps aims to create a data-driven culture where data is treated as a valuable asset, and data teams can collaborate effectively to deliver insights that drive business value.

The DataOps Lifecycle

The DataOps lifecycle encompasses the end-to-end data management process, from data ingestion to data consumption. It consists of several stages that work together to streamline data workflows and ensure the delivery of high-quality data. Let's explore each stage of the DataOps lifecycle:

- Data Ingestion: This stage involves collecting and ingesting data from various sources, such as databases, APIs, streaming platforms, and file systems. DataOps ensures that data ingestion processes are automated, scalable, and resilient to handle the growing volume and variety of data.

- Data Preparation: In this stage, raw data is cleaned, transformed, and prepared for analysis. DataOps applies automated data quality checks, data validation rules, and data transformation logic to ensure data consistency and integrity. It also incorporates data lineage and data cataloging to provide visibility into data origins and dependencies.

- Data Integration: DataOps facilitates the integration of data from multiple sources into a unified view. It leverages data integration tools and techniques, such as ETL (Extract, Transform, Load) processes, data virtualization, and data federation, to create a cohesive and reliable data ecosystem.

- Data Testing and Validation: DataOps emphasizes the importance of rigorous testing and validation throughout the data lifecycle. It includes automated data quality checks, data profiling, and data reconciliation to identify and resolve data issues early in the process. DataOps also incorporates continuous integration and continuous delivery (CI/CD) practices to ensure data reliability and consistency.

- Data Deployment: Once data is prepared and validated, it is deployed to target systems, such as data warehouses, data lakes, or analytics platforms. DataOps automates the deployment process, ensuring data is securely and efficiently delivered to the right destinations. It also incorporates version control and release management practices to track and manage data changes.

- Data Monitoring and Observability: DataOps emphasizes the continuous monitoring and observability of data pipelines and data quality. It involves implementing monitoring tools and metrics to track data flow, identify anomalies, and detect performance bottlenecks. DataOps also establishes alerting mechanisms to notify teams of data issues and enable rapid resolution proactively.

- Data Consumption and Analysis: DataOps aims to enable data consumers, such as data analysts, data scientists, and business users, to access and analyze data effectively. DataOps provides self-service data access, data discovery tools, and collaborative platforms to empower users to derive insights and make data-driven decisions.

By streamlining the DataOps lifecycle, organizations can achieve faster time-to-insight, improve data quality, and enable agile data analytics. DataOps integrates seamlessly into the overall data lifecycle, ensuring that data flows smoothly from source to consumption and insights are delivered promptly and reliably.

DataOps vs. DevOps

DataOps and DevOps share similar principles and goals but focus on different aspects of the technology landscape. While DevOps primarily deals with software development and deployment, DataOps extends these concepts to data management and analytics. Let's compare and contrast DataOps and DevOps:

| Similarities | Differences |

| Both DataOps and DevOps emphasize collaboration, automation, and continuous improvement. | DevOps focuses on developing and deploying software applications, while DataOps focuses on managing and delivering data and analytics. |

| They aim to break down silos between teams and promote a culture of shared responsibility. | DataOps deals with the unique challenges of data, such as data quality, data governance, and data security, which are not typically the primary focus of DevOps. |

| Both methodologies leverage automation tools and practices to streamline processes and reduce manual efforts. | DataOps involves a broader range of stakeholders, including data engineers, data scientists, data analysts, and business users, while DevOps primarily involves developers and IT operations teams. |

| They focus on delivering value to the business through faster and more reliable software or data pipelines. | DataOps requires specialized tools and technologies for data integration, data transformation, data quality, and data governance, which may differ from the tools used in DevOps. |

Despite these differences, DataOps and DevOps aim to enable organizations to be more agile, responsive, and data-driven. DataOps extends the principles of DevOps to the data domain, bringing the benefits of automation, collaboration, and continuous improvement to data management and analytics processes.

The synergies between DataOps and DevOps can be leveraged to create a holistic approach to delivering value to the business. By integrating DataOps practices into the software development lifecycle, organizations can ensure that data is treated as a first-class citizen and is seamlessly integrated into the application development process.

DataOps Tools and Technologies

DataOps relies on many tools and technologies to automate and streamline data workflows. These tools enable organizations to implement DataOps practices effectively and achieve the benefits of agility, reliability, and efficiency. Let's explore some of the key categories of DataOps tools:

| Category | Tool | Description |

| Data Integration and ETL Tools | Apache NiFi | An open-source data integration tool that automates system data flows. |

| Talend | A data integration platform that provides ETL, data quality, and data governance capabilities. | |

| Informatica | A comprehensive data integration solution that offers ETL, data quality, and data management features. | |

| Data Orchestration and Workflow Management Tools | Apache Airflow | An open-source platform to programmatically author, schedule, and monitor workflows. |

| Dagster | A data orchestrator that enables the development and execution of data pipelines. | |

| Prefect | A modern data workflow management system that simplifies data pipeline building, scheduling, and monitoring. | |

| Data Quality and Profiling Tools | Great Expectations | An open-source data validation and profiling tool that allows teams to define and test data quality expectations. |

| Trifacta | A data wrangling platform that enables data exploration, cleaning, and transformation. | |

| Alteryx | A self-service data analytics platform with data profiling and quality management capabilities. | |

| Data Catalog and Discovery Tools | Alation | A collaborative data catalog that enables data discovery, governance, and analytics. |

| Collibra | A data intelligence platform that provides data cataloging, governance, and lineage capabilities. | |

| Apache Atlas | An open-source data governance and metadata management framework. | |

| Data Virtualization and Federation Tools | Denodo | A data virtualization platform that enables real-time data integration and abstraction. |

| Dremio | A data lake engine that provides fast query performance and data virtualization capabilities. | |

| IBM Cloud Pak for Data | A unified data and AI platform with data virtualization and federation features. |

When choosing DataOps tools, organizations should consider scalability, integration capabilities, ease of use, and alignment with their existing technology stack. It's important to evaluate the organization's specific needs, the data teams' skills, and the data ecosystem's complexity to select the most suitable tools.

Commercial and open-source DataOps tools offer different advantages. Commercial tools often provide comprehensive features, enterprise-grade support, and seamless integration with other systems. On the other hand, open-source tools offer flexibility, cost-effectiveness, and the ability to customize and extend the functionality to meet specific requirements.

Ultimately, the choice of DataOps tools depends on the organization's goals, budget, and technical expertise. It's essential to conduct a thorough evaluation, consider the tools' long-term scalability and maintainability, and ensure they can effectively support DataOps practices and deliver value to the business.

Implementing a DataOps Strategy

Implementing a DataOps strategy requires a systematic approach that involves people, processes, and technology. Here are the key steps to successfully adopt DataOps in an organization:

- Evaluate the existing data infrastructure, processes, and team structure.

- Identify the pain points, bottlenecks, and areas for improvement in the data lifecycle.

- Assess the skills and capabilities of the data teams and identify any gaps.

- Establish clear goals and objectives for the DataOps initiative aligned with business priorities.

- Define metrics and key performance indicators (KPIs) to measure the success of DataOps implementation.

- Communicate the vision and benefits of DataOps to stakeholders and secure their buy-in.

- Foster a culture of collaboration, experimentation, and continuous improvement.

- Encourage cross-functional teamwork and break down silos between data teams.

- Promote a data-driven mindset and empower teams to make data-informed decisions.

- Develop standardized data ingestion, preparation, integration, and deployment processes.

- Implement automation and orchestration tools to streamline data workflows.

- Establish data quality and governance practices to ensure data integrity and compliance.

- Select and implement DataOps tools that align with the organization's requirements and technology stack.

- Provide training and support to data teams to effectively utilize the chosen tools.

- Continuously evaluate and optimize the tool ecosystem to meet evolving needs.

- Establish CI/CD pipelines for data workflows to enable frequent automated testing, integration, and deployment.

- Implement version control and release management practices for data artifacts and pipelines.

- Automate data quality checks and validation processes to ensure data reliability.

- Implement monitoring and observability tools to track data pipeline performance and data quality.

- Establish metrics and dashboards to measure the effectiveness of DataOps processes.

- Gather stakeholder feedback and iterate on the DataOps strategy based on insights.

Best practices for implementing DataOps include:

- Start small and iteratively scale the DataOps initiative.

- Prioritize automation and standardization to reduce manual efforts and errors.

- Foster a culture of experimentation and embrace failure as an opportunity to learn and improve.

- Collaborate closely with business stakeholders to align DataOps efforts with business goals.

- Continuously monitor and optimize DataOps processes based on feedback and performance metrics.

Adopting DataOps can come with challenges like resistance to change, legacy systems, and skill gaps. To overcome these challenges, organizations should:

- Communicate the benefits and value of DataOps clearly to stakeholders.

- Provide training and support to upskill data teams and foster a DataOps mindset.

- Incrementally modernize legacy systems and integrate them into the DataOps ecosystem.

- Celebrate successes and share lessons learned to build momentum and support for DataOps.

By following these steps and best practices, organizations can successfully implement a DataOps strategy and realize the benefits of agility, reliability, and efficiency in their data management and analytics processes.

DataOps Frameworks and Platforms

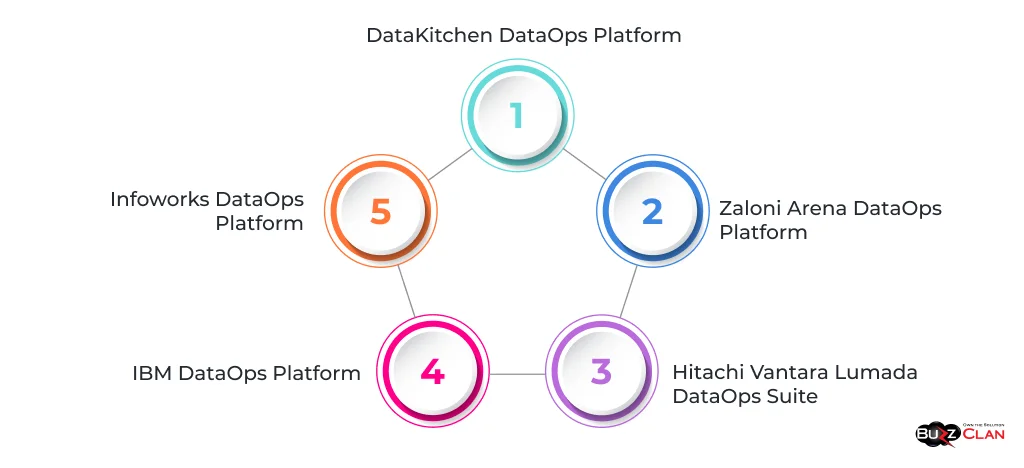

DataOps frameworks and platforms provide a structured approach to implementing DataOps practices and accelerating adoption of DataOps within organizations. These frameworks and platforms offer a range of features and capabilities to streamline data workflows, automate processes, and enable collaboration among data teams.

Let's explore some popular DataOps frameworks and platforms:

- Offers an end-to-end DataOps platform that automates the data pipeline lifecycle.

- Provides features for data orchestration, testing, monitoring, and deployment.

- Enables collaboration and version control for data artifacts and pipelines.

- Provides a unified platform for data governance, data quality, and data integration.

- Offers self-service data discovery and access for business users.

- Enables metadata management and data lineage tracking.

- Offers a comprehensive DataOps suite that includes data integration, data catalog, and data governance

capabilities. - Provides AI-driven automation and intelligence for data workflows.

- Enables real-time data processing and analytics.

- Provides a cloud-native DataOps platform that integrates data management, data governance, and data science capabilities.

- Offers automated data discovery, quality assessment, and lineage tracking.

- Enables collaborative data science workflows and model deployment.

- Offers an end-to-end DataOps platform that automates data ingestion, transformation, and delivery.

- Provides a visual interface for designing and managing data workflows.

- Enables real-time data processing and analytics.

When selecting a DataOps platform, organizations should consider the following criteria:

- Alignment with the organization's data ecosystem and technology stack.

- Scalability and performance to handle growing data volumes and complexity.

- Integration capabilities with existing data sources, tools, and systems.

- Ease of use and learning curve for data teams.

- Robustness of features for data orchestration, automation, and governance.

- Support for collaboration and version control for data artifacts and pipelines.

- Monitoring and observability features are available to track data pipeline performance.

- Total cost of ownership, including licensing, training, and maintenance costs.

It's important to thoroughly evaluate the features and capabilities of different DataOps platforms and assess their fit with the organization's specific requirements. Conducting proof-of-concept projects and engaging with vendor demonstrations can help make an informed decision.

Adopting a DataOps platform can provide several benefits, such as accelerated data pipeline development, improved data quality and reliability, enhanced collaboration among data teams, and increased agility in responding to changing business needs. However, it's crucial to have a well-defined DataOps strategy and governance framework in place to ensure the successful implementation and utilization of a DataOps platform.

The Role of DataOps in Data Governance

Data governance is critical to managing and leveraging data assets effectively. It involves establishing policies, standards, and processes to ensure data quality, security, privacy, and compliance. DataOps plays a significant role in supporting and enhancing data governance initiatives within organizations.

DataOps influences data governance in several ways

- DataOps practices, such as automated testing and data validation, help ensure the quality and integrity of data throughout its lifecycle.

- DataOps tools and processes enable the identification and resolution of data quality issues early in the pipeline, reducing the risk of downstream errors.

- DataOps emphasizes the importance of data lineage and traceability, allowing organizations to track data's origin, movement, and transformations.

- DataOps tools provide visibility into data flows, making it easier to understand data dependencies and identify the impact of changes.

- DataOps incorporates security and privacy controls into the data pipeline, ensuring that sensitive data is

protected and access is granted only to authorized users. - DataOps practices, such as data masking and encryption, help safeguard sensitive information and maintain compliance with data protection regulations.

- DataOps promotes standardized data formats, schemas, and metadata, ensuring consistency and interoperability across different systems and teams.

- DataOps processes enforce data naming conventions and data definitions, reducing ambiguity and promoting a shared understanding of data assets.

- DataOps fosters collaboration among data teams, including data stewards, data owners, and data consumers, enabling effective data governance.

- DataOps establishes clear roles and responsibilities for data management, promoting accountability and ownership of data assets.

By integrating DataOps practices into data governance frameworks, organizations can enhance their data's reliability, security, and quality. DataOps enables automating and enforcing data governance policies, reducing manual efforts, and ensuring consistent adherence to standards.

Moreover, DataOps provides the tools and processes to monitor and measure data quality and compliance metrics. It enables the generation of data quality reports, data lineage documentation, and audit trails, supporting regulatory compliance and data governance reporting requirements.

Combining DataOps and data governance creates a powerful synergy, enabling organizations to manage their data assets effectively, maintain data integrity, and derive valuable insights while ensuring compliance and security.

DataOps in the Industry

DataOps has gained significant traction across various industries as organizations recognize the value of agile and reliable data management practices. Let's explore some case studies (anonymized for NDA / privacy) demonstrating the successful implementation of DataOps in real-world scenarios.

- A leading healthcare provider implemented DataOps to streamline their data pipeline and improve patient care.

- By automating data ingestion and quality checks, they reduced data errors and inconsistencies, enabling faster and more accurate clinical decision-making.

- DataOps practices allowed them to securely integrate data from multiple sources, including electronic health records (EHR) and IoT devices, enabling personalized patient care.

- A global financial institution adopted DataOps to enhance its risk management and regulatory compliance processes.

- By implementing automated data validation and lineage tracking, they identified and mitigated data quality issues, reducing the risk of financial losses and regulatory penalties.

- DataOps enabled them to quickly adapt to changing regulatory requirements and generate accurate and timely reports for compliance purposes.

- A major retail chain leveraged DataOps to optimize its supply chain and improve customer experience.

- They gained real-time visibility into their operations by integrating data from various sources, including sales transactions, customer interactions, and inventory systems.

- DataOps practices allowed them to quickly process and analyze large volumes of data, enabling data-driven decision-making and personalized marketing campaigns.

- A telecommunications company implemented DataOps to improve network performance and customer satisfaction.

- By automating data collection and analysis from network devices and customer feedback channels, they could proactively identify and resolve network issues.

- DataOps enabled them to deliver real-time network performance insights to operations teams, reducing downtime and improving customer experience.

These case studies highlight the impact of DataOps on the speed and quality of data analysis across different industries. By adopting DataOps practices, organizations were able to:

- Reduce data processing time and accelerate time-to-insights.

- Improve data quality and reliability, leading to more accurate decision-making.

- Enable real-time data processing and analysis, driving operational efficiency and agility.

- Enhance collaboration among data teams, breaking down silos and fostering innovation.

- Ensure compliance with regulatory requirements and maintain data security and privacy.

The successful implementation of DataOps in these industries demonstrates its potential to transform data management practices and drive significant business value. As more organizations recognize the benefits of DataOps, its adoption is expected to grow across various sectors, enabling data-driven innovation and competitive advantage.

The Future of DataOps

As data continues to grow in volume, variety, and velocity, the future of DataOps is poised for significant advancements and innovations. Here are some emerging trends that are shaping the future of DataOps:

- Integrating AI and ML technologies into DataOps processes will enable intelligent automation and optimization of data workflows.

- AI-powered anomaly detection, predictive maintenance, and self-healing capabilities will enhance the reliability and resilience of data pipelines.

- ML algorithms will enable adaptive and self-learning DataOps processes, continuously improving data quality and performance.

- Adopting serverless computing and cloud-native architectures will revolutionize DataOps deployment and scalability.

- Serverless DataOps will allow organizations to focus on data workflows and logic while cloud providers automatically manage and scale the underlying infrastructure.

- Cloud-native DataOps will enable the development and deployment of data pipelines as microservices, improving agility and flexibility.

- As edge computing becomes more prevalent, DataOps practices will extend to the edge, enabling real-time data processing and analysis closer to the data sources.

- Edge DataOps will address the challenges of data locality, bandwidth limitations, and latency, enabling faster and more efficient data processing at the edge.

- DataOps tools and frameworks will evolve to support the unique requirements of edge computing environments.

- The emergence of data mesh and data fabric architectures will drive the evolution of DataOps practices.

- DataOps will play a crucial role in enabling the decentralized ownership and management of data products in a data mesh architecture.

- DataOps practices will facilitate the seamless integration and governance of data across distributed data fabrics, ensuring data quality, consistency, and security.

- The future of DataOps will emphasize collaboration and self-service capabilities, empowering data teams and business users to work together seamlessly.

- DataOps platforms will provide intuitive interfaces and low-code/no-code tools, enabling non-technical users to participate in data workflows and analysis.

Collaborative DataOps will foster cross-functional teamwork, enabling data scientists, analysts, and business stakeholders to jointly develop and iterate on data products.

The future of DataOps is expected to witness significant market growth as organizations increasingly recognize the value of agile and automated data management practices. According to industry reports, the global DataOps platform market is projected to grow at a compound annual growth rate (CAGR) of over 20% during the forecast period (2021-2026).

As DataOps evolves and matures, it will play a pivotal role in enabling organizations to harness the full potential of their data assets. DataOps will empower organizations to deliver trusted and actionable insights at the speed of business, driving innovation, competitive advantage, and customer satisfaction.

However, the success of DataOps in the future will depend on organizations' ability to adapt to new technologies, embrace a data-driven culture, and continuously refine their DataOps practices based on feedback and evolving business needs.

Conclusion

DataOps has emerged as a transformative approach to data management, enabling organizations to achieve agility, reliability, and efficiency in their data workflows. By adopting DataOps practices, organizations can streamline their data pipelines, improve data quality, and accelerate time-to-insights.

Throughout this blog post, we have explored the key concepts of DataOps, including its lifecycle, tools, frameworks, and best practices. We have seen how DataOps extends the principles of DevOps to data management, fostering collaboration, automation, and continuous improvement.

DataOps is critical in supporting data governance initiatives ensuring data quality, security, and compliance. Real-world case studies have demonstrated the impact of DataOps in various industries, showcasing its potential to drive business value and innovation.

As we look towards the future, DataOps is poised for significant advancements, with emerging trends such as AI/ML integration, serverless and cloud-native architectures, edge computing, data mesh, and self-service capabilities shaping its evolution.

The importance of DataOps cannot be overstated in today's data-driven world. Organizations that embrace DataOps and invest in building a strong data culture will be well-positioned to unlock the full potential of their data assets, make informed decisions, and stay ahead of the competition.

As the volume and complexity of data continue to grow, DataOps will become an essential component of modern data management strategies. It will enable organizations to transform raw data into actionable insights, drive innovation, and create value for their customers and stakeholders.

Suppose your organization is looking to embark on a DataOps journey. In that case, assessing your current data landscape is crucial, as is defining clear goals and objectives and developing a roadmap for implementation. Partnering with experienced DataOps practitioners and leveraging best practices and proven frameworks can help ensure a successful DataOps adoption.

The future belongs to organizations that can harness the power of data and transform it into a strategic asset. By embracing DataOps and continuously refining their data management practices, organizations can unlock new opportunities, drive innovation, and stay ahead in the ever-evolving data-driven landscape.

FAQs

Get In Touch