Mastering ETL: Techniques, Tools, and Best Practices

Rahul Rastogi

Apr 24, 2024

Introduction

In the era of big data, organizations are collecting and storing vast amounts of information from various sources. However, more than raw data is needed to drive informed business decisions. Data must be processed, transformed, and loaded into a centralized system for analysis to extract meaningful insights. This is where ETL (Extract, Transform, Load) comes into play.

ETL is a critical process in data management that enables organizations to integrate data from multiple sources, transform it into a consistent format, and load it into a target system, such as a data warehouse or a data lake. The ETL process forms the backbone of data warehousing and business intelligence initiatives, ensuring that data is accurate, reliable, and ready for analysis.

The concept of ETL has evolved from simple data movement scripts to complex data integration platforms. As data volumes have grown and data sources have become more diverse, ETL processes have adapted to handle the increasing complexity and scale of data management. Today, ETL is an essential component of modern data architectures, enabling organizations to harness the power of data for competitive advantage.

In this comprehensive article, we will dive deep into the world of ETL, exploring its fundamental concepts, stages, tools, and best practices. We will also discuss advanced ETL processes, the impact of cloud computing on ETL, and future trends shaping the ETL landscape. By the end of this article, you will have a solid understanding of ETL and its significance in driving data-driven decision-making.

ETL Explained

ETL stands for Extract, Transform, and Load, the three fundamental stages involved in data integration. The primary goal of ETL is to extract data from various sources, transform it into a consistent and usable format, and load it into a target system for analysis and reporting.

ETL is crucial in data warehousing and business intelligence. It consolidates data from disparate sources, such as transactional databases, CRM systems, ERP systems, and external data providers. By integrating and transforming data into a unified structure, ETL enables organizations to gain a holistic view of their business operations and make informed decisions based on accurate and timely information.

The three stages of ETL can be described as follows:

- Extract: The first stage involves extracting data from source systems. This can include databases, files, APIs, or any other data source relevant to the business.

- Transform: In the second stage, the extracted data is transformed to ensure consistency, quality, and compatibility with the target system. This may involve data cleansing, deduplication, validation, and formatting.

- Load: The final stage involves loading the transformed data into the target system, such as a data warehouse or a data lake, where business users and decision-makers can access and analyze it.

The ETL process is typically executed in a batch mode, where data is processed at regular intervals, such as daily or weekly. However, with the increasing demand for real-time analytics, modern ETL solutions also support near-real-time or streaming data integration, allowing organizations to process and analyze data as it arrives.

ETL is essential for maintaining data quality, consistency, and reliability across an organization. By automating data integration and transformation processes, ETL reduces manual efforts, minimizes errors, and ensures that data is accurate and up-to-date for analysis and reporting purposes.

The "Extract" Phase

The extraction phase is the first step in the ETL process, where data is retrieved from various source systems. The extraction phase aims to collect data efficiently and reliably from different sources and prepare it for the subsequent transformation and loading stages.

Data extraction can involve a wide range of data sources, including:

| Data Sources | Challenges |

| Relational databases | Extracting data from databases such as MySQL, Oracle, or SQL Server using SQL queries or database connectors. |

| Files | Data is extracted from flat files, such as CSV, TSV, or XML files, using file readers or parsers. |

| APIs | Retrieve data from web services or APIs using REST or SOAP protocols. |

| CRM and ERP systems | Extracting data from customer relationship management (CRM) systems like Salesforce or enterprise resource planning (ERP) systems like SAP or Oracle. |

| Social media platforms | Collect data from social media APIs like Twitter or Facebook for sentiment analysis or trend monitoring. |

| IoT devices | Extract data from sensors, machines, or other devices for real-time monitoring and analysis. |

The extraction process can be challenging due to various factors, such as:

- Data volume: Dealing with large volumes of data that need to be extracted efficiently without impacting the performance of source systems.

- Data variety: Handling different data formats, structures, and types from multiple sources, requiring data normalization and standardization.

- Data quality: Ensuring the accuracy, completeness, and consistency of extracted data, handling missing values, duplicates, or inconsistencies.

- Data security: Implementing appropriate security measures, such as encryption or access controls, to protect sensitive data during extraction.

To overcome these challenges, best practices in the extraction phase include:

- Incremental extraction: This method involves extracting only the changed or new data since the last extraction, instead of the entire dataset every time, to improve efficiency and reduce load on source systems.

- Parallel extraction: Extracting data from multiple sources simultaneously using parallel processing techniques to speed up the extraction process.

- Data validation: Applying data validation rules and checks during extraction to identify and handle data quality issues early in the ETL pipeline.

- Error handling: Implementing robust mechanisms to gracefully handle extraction failures, network issues, or source system unavailability.

- Data lineage: Maintaining data lineage information to track the origin and movement of data throughout the ETL process for auditing and troubleshooting purposes.

By following these best practices and using appropriate tools and techniques, organizations can ensure efficient and reliable data extraction from various sources, setting the foundation for the subsequent transformation and loading stages.

The "Transform" Phase

The transformation phase is the heart of the ETL process, where extracted data undergoes a series of modifications and enhancements to ensure its quality, consistency, and compatibility with the target system. Transformation logic is critical to maintaining data integrity and preparing data for analysis and reporting.

Data transformation involves various techniques and methods, including:

| Techniques and Methods | Challenges |

| Data cleansing | Identifying and correcting data quality issues, such as missing values, duplicates, or inconsistent formats. Data cleansing techniques include data profiling, data validation, and data standardization. |

| Data deduplication | Eliminating duplicate records or merging them into a single representation to ensure data consistency and accuracy. |

| Data validation | Applying business rules and constraints to validate data against predefined criteria, such as data type checks, range checks, or pattern matching. |

| Data enrichment | Enhancing data with additional information from external sources or derived attributes to provide more context and value for analysis. |

| Data aggregation | Summarizing data at different levels of granularity, such as calculating totals, averages, or counts, to support reporting and analysis requirements. |

| Data integration | Combining data from multiple sources into a unified structure, resolving data conflicts, and ensuring data consistency across different systems. |

| Data format conversion | Converting data formats, such as date/time representations or numeric formats, to ensure compatibility with the target system and analysis tools. |

Transformation techniques can be implemented using various tools and technologies, such as:

- SQL: Using SQL queries and stored procedures to transform data within relational databases.

- ETL tools: Leveraging specialized ETL tools, such as Informatica, Talend, or SSIS, which provide graphical interfaces and pre-built transformations for data manipulation.

- Programming languages: For complex data processing requirements, write custom transformation logic using programming languages like Python, Java, or Scala.

- Data quality tools: Utilizing data quality tools, such as Talend Data Quality or IBM InfoSphere Information Server, to automate data cleansing, validation, and standardization tasks.

The choice of transformation techniques and tools depends on the specific requirements of the ETL project, the complexity of the data, and the technical skills of the ETL development team

Best practices in the transformation phase include:

- Data lineage and auditing: Maintaining data lineage information to track the transformations applied to data and enabling auditing capabilities for compliance and troubleshooting purposes.

- Data validation and error handling: Implementing robust data validation checks and error handling mechanisms to identify and handle data quality issues and ensure data integrity.

- Performance optimization: Optimizing transformation logic for performance, such as using efficient SQL queries, parallel processing, or in-memory computing techniques.

- Reusability and modularity: Designing reusable and modular transformation components to promote code reuse, maintainability, and scalability.

- Documentation and version control: Document transformation logic, business rules, and dependencies and use version control systems to manage changes and collaborate effectively.

By applying appropriate transformation techniques and following best practices, organizations can ensure that data is accurately transformed, enriched, and prepared for the final loading stage, enabling reliable and meaningful analysis and reporting.

The "Load" Phase

The loading phase is the final step in the ETL process, where the transformed data is loaded into the target system, such as a data warehouse or a data lake. The goal of the loading phase is to efficiently and reliably transfer the processed data into the target system, ensuring data consistency and availability for analysis and reporting.

There are two main strategies for loading data into the target system:

| Strategies | Challenges |

| Full load | In a full load, the entire dataset is loaded into the target system, replacing any existing data. This approach is typically used for initial data loads or when a complete data refresh is required. |

| Incremental load | In an incremental load, only the new or changed data since the last load is appended to the existing data in the target system. This approach is more efficient and reduces the load time compared to a full load, especially for large datasets. |

The choice between full and incremental load depends on data volume, data change frequency, and business requirements. Incremental loads are generally preferred for ongoing data integration scenarios, while full loads are used for the initial data population or when a complete data refresh is necessary.

During the loading phase, several considerations need to be taken into account:

- Data consistency: Ensuring the loaded data is consistent with the source data and the applied transformation logic. This involves data validation checks, reconciliation processes, and error-handling mechanisms.

- Data integrity: Maintaining the integrity of the loaded data by enforcing referential integrity, unique constraints, and other data integrity rules defined in the target system.

- Data availability: Ensuring the loaded data is available for querying and analysis as soon as the loading process is complete. This may involve indexing, partitioning, or optimizing the target system for query performance.

- Load performance: Optimizing the loading process for performance, such as using bulk loading techniques, parallel processing, or partitioning strategies to minimize load time and resource utilization.

- Error handling and recovery: Implementing robust error handling and recovery mechanisms to handle load failures, data inconsistencies, or system issues. This includes logging, error reporting, and automated recovery processes.

To handle load failures and ensure data consistency, various techniques can be employed:

- Data validation checks: Performing data validation checks before and after the loading process to identify and handle data inconsistencies or errors.

- Transactional loading: Using transactional loading techniques, such as database transactions or staging tables, to ensure atomicity and consistency of the loaded data.

- Data reconciliation: Implementing data reconciliation processes to compare the source and target data, identify discrepancies, and resolve them through automated or manual intervention.

- Rollback and recovery: Enabling rollback and recovery mechanisms to revert the target system to a consistent state in case of load failures or data integrity issues.

- Monitoring and alerting: Setting up monitoring and alerting systems to detect load failures, data inconsistencies, or performance issues and triggering appropriate actions or notifications.

Best practices in the loading phase include:

- Data partitioning: Partitioning the target system based on relevant dimensions, such as date or geography, to improve query performance and data management.

- Indexing: Creating appropriate indexes on the target system to optimize query performance and support efficient data retrieval.

- Data compression: Applying data compression techniques to reduce storage footprint and improve query performance, especially for large datasets.

- Data security: Implementing data security measures, such as access controls, encryption, or data masking, to protect sensitive data in the target system.

- Data archiving and retention: Defining data archiving and retention policies to manage historical data, optimize storage utilization, and comply with regulatory requirements.

By following these best practices and implementing appropriate loading strategies and techniques, organizations can ensure that data is efficiently and reliably loaded into the target system, ready for analysis and reporting.

ETL Tools and Technologies

ETL tools and technologies are crucial in automating and streamlining the ETL process, enabling organizations to efficiently extract, transform, and load data from various sources into target systems. A wide range of commercial and open-source ETL tools are available in the market, each with strengths and capabilities.

Some popular commercial ETL tools include:

- Informatica PowerCenter is a comprehensive ETL platform with a graphical development environment, pre-built connectors, and advanced data integration features.

- IBM InfoSphere DataStage is an enterprise-level ETL tool offering scalable data integration, quality management, and real-time data processing capabilities.

- Oracle Data Integrator: A powerful ETL tool that supports various data sources, provides a graphical interface for data mapping and transformation, and integrates with Oracle's database technologies.

- Microsoft SQL Server Integration Services (SSIS) is a Microsoft ETL tool that integrates with SQL Server and provides a visual designer for building and managing ETL workflows.

- SAP Data Services is an ETL tool offering data integration, quality management, and profiling capabilities, seamlessly integrating with SAP's business applications.

In addition to commercial tools, several open-source ETL tools provide cost-effective and flexible options for data integration:

- Apache NiFi: A robust data integration platform that enables real-time data processing, data flow management, and data provenance tracking.

- Talend Open Studio: An open-source ETL tool that provides a graphical development environment, a wide range of connectors, and data quality management features.

- Pentaho Data Integration (PDI): An open-source ETL tool that offers a user-friendly interface, data transformation capabilities, and integration with various data sources and targets.

- Apache Airflow: A platform to programmatically author, schedule, and monitor workflows, allowing for the creation of complex ETL pipelines.

- Apache Kafka is a distributed streaming platform enabling real-time data integration, processing, and pipelines.

When comparing ETL tools, it's essential to consider factors such as scalability, performance, ease of use, integration capabilities, and cost. The choice of ETL tool depends on the organization's specific requirements, the complexity of the data integration scenarios, and the technical skills of the ETL development team.

In recent years, there has been a shift towards ELT (Extract, Load, Transform) approaches, where data is first loaded into the target system and then transformed within it. ELT tools leverage modern data platforms' processing power and scalability, such as Hadoop or cloud-based data warehouses, to perform transformations directly on the loaded data.

Some popular ELT tools include:

- Amazon Redshift: A cloud-based data warehouse that provides built-in ELT capabilities, allowing for efficient data loading and transformation within the data warehouse itself.

- Google BigQuery is a serverless, highly scalable data warehouse that supports ELT workflows and enables data loading and transformation using SQL-based queries.

- Snowflake: A cloud-based data warehousing platform that offers ELT capabilities, allowing for data loading and transformation using SQL and other programming languages.

The choice between ETL and ELT approaches depends on factors such as data volume, data complexity, processing requirements, and the capabilities of the target system. ELT approaches are often preferred when dealing with large-scale data processing and leveraging the power of modern data platforms.

Regardless of the chosen approach, ETL tools and technologies are vital in automating and simplifying data integration, enabling organizations to efficiently extract, transform, and load data from diverse sources into target systems for analysis and reporting.

Designing an ETL Process

Designing an effective ETL process is crucial for ensuring data quality, consistency, and reliability in a data integration project. Creating a robust and efficient ETL workflow involves several steps and considerations.

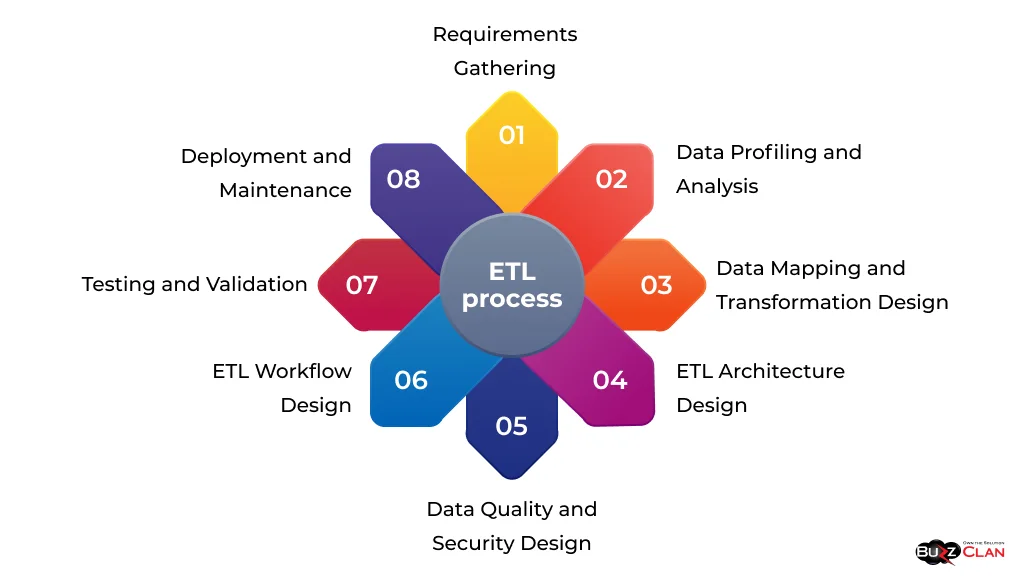

The key steps in designing an ETL process include:

- understand the business requirements, data sources, and target systems involved in the ETL process.

- Identify the data integration scenarios, such as data consolidation, synchronization, or migration.

- Define the data quality and data governance requirements.

- Analyze the source systems to understand the data structure, quality, and relationships.

- Identify data inconsistencies, missing values, or anomalies that must be addressed during the ETL process.

- Determine the data volume, growth rate, and data latency requirements.

- Define the data mapping rules between the source and target systems.

- Design the data transformation logic, including data cleansing, validation, enrichment, and aggregation.

- Identify the data dependencies and the order of transformations.

- Select the appropriate ETL tools and technologies based on the project requirements and organizational standards.

- Design the ETL architecture, including the data flow, staging, and loading strategies.

- Consider scalability, performance, and fault-tolerance aspects of the ETL architecture.

- Incorporate data quality checks and validations at various stages of the ETL process.

- Define data cleansing and standardization rules to ensure data consistency and accuracy.

- Implement data security measures, such as data encryption, access controls, and masking, to protect sensitive data during the ETL process.

- Design the ETL workflow, including the sequence of tasks, dependencies, and error-handling mechanisms.

- Define the scheduling and frequency of ETL jobs based on data freshness requirements and system load.

- Incorporate monitoring and logging mechanisms to track the progress and performance of ETL jobs.

- Develop test cases and test scenarios to validate the ETL process end-to-end.

- Perform unit, integration, and user acceptance testing to ensure data accuracy and consistency.

- Validate the ETL process against the business requirements and data quality standards.

- Deploy the ETL process to the production environment following the organization's deployment procedures.

- Establish maintenance and support processes to monitor and troubleshoot ETL jobs.

- Implement version control and documentation practices to manage changes and ensure maintainability of the ETL process.

When designing an ETL process, it's essential to consider best practices for managing data quality and security:

- Data Profiling: Perform thorough data profiling to understand the source data's structure, quality, and relationships. This helps identify data quality issues and design appropriate data cleansing and transformation rules.

- Data Validation: Implement data validation checks at various stages of the ETL process to ensure data accuracy and consistency. This includes validating data types, formats, ranges, and business rules.

- Data Cleansing: Apply data cleansing techniques to handle missing values, remove duplicates, and standardize data formats. Establish data cleansing rules and incorporate them into the ETL process.

- Data Security: Implement data security measures to protect sensitive data during the ETL process. This includes data encryption, access controls, and data masking techniques to ensure data confidentiality and compliance with security regulations.

- Data Lineage and Auditing: Maintain data lineage information to track data flow from source to target systems. Implement auditing mechanisms to capture data changes and track data transformations for compliance and troubleshooting purposes.

Automating ETL processes is crucial for efficiency and reliability. Automation can be achieved through various means:

- Scheduling: Utilize ETL tool scheduling capabilities or external schedulers to automate the execution of ETL jobs based on predefined schedules and dependencies.

- Orchestration: Implement ETL orchestration tools or frameworks to manage the flow and dependencies of ETL tasks, ensure proper execution order, and gracefully handle failures.

- Parameterization: Parameterize ETL jobs to allow for dynamic configuration and reusability. This enables running ETL jobs with different input parameters and reduces manual intervention.

- Error Handling and Notifications: Incorporate robust error-handling mechanisms to capture and handle exceptions in the ETL process. Implement notification systems to alert stakeholders in case of failures or anomalies.

- Monitoring and Logging: Implement monitoring and logging mechanisms to track the progress, performance, and health of ETL jobs. This helps identify bottlenecks, optimize performance, and troubleshoot issues.

Organizations can ensure a reliable and efficient data integration pipeline that meets business requirements and enables data-driven decision-making by following a structured approach to designing an ETL process and incorporating best practices for data quality, security, and automation.

ETL Testing and Quality Assurance

ETL testing and quality assurance are critical components of the ETL development lifecycle. They ensure the ETL process functions as expected, maintains data integrity, and produces accurate and reliable results. Testing helps identify and resolve issues early in the development process, reducing the risk of data inconsistencies and errors in the target system.

The importance of testing in ETL processes cannot be overstated. ETL testing helps:

- Validate data accuracy: Testing ensures that the data extracted from source systems is accurately transformed and loaded into the target system, maintaining data integrity and consistency.

- Identify data quality issues: Testing helps uncover data quality issues, such as missing values, duplicates, or data inconsistencies, allowing for timely resolution and data cleansing.

- Verify business rules: Testing validates that the ETL process adheres to the defined business rules and transformation logic, ensuring that the data meets the expected business requirements.

- Ensure performance and scalability: Testing helps assess the ETL process's performance and scalability, identifying bottlenecks and optimizing it for efficient data processing.

- Minimize risks and errors: Testing helps identify and mitigate risks and errors in the ETL process, reducing the likelihood of data discrepancies and ensuring the reliability of the data in the target system.

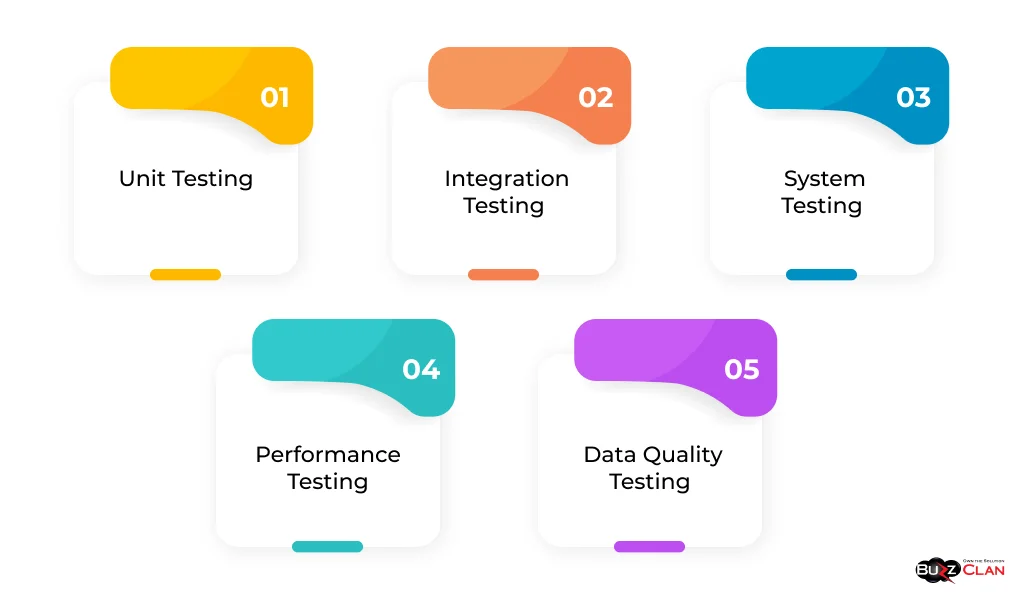

Different types of ETL tests should be performed to ensure comprehensive coverage:

- Unit Testing: Unit testing focuses on testing individual components or modules of the ETL process in isolation. It validates the functionality and behavior of specific transformations, mappings, or data cleansing routines.

- Integration Testing: Integration testing verifies the interaction and compatibility between different components of the ETL process. It ensures that data flows correctly from source to target systems and that the ETL process functions as a cohesive unit.

- System Testing: System testing validates the end-to-end functionality of the ETL process, including data extraction, transformation, and loading. It ensures that the ETL process meets the overall business requirements and produces the expected results.

- Performance Testing: Performance testing assesses the efficiency, scalability, and response time of the ETL process under different load conditions. It helps identify performance bottlenecks and optimize the process for optimal data processing.

- Data Quality Testing: Data quality testing focuses on validating the accuracy, completeness, and consistency of the data processed by the ETL pipeline. It involves data profiling, validation, and reconciliation techniques to meet data quality standards.

To facilitate ETL testing, various tools and frameworks are available:

- ETL Testing Tools: Specialized ETL testing tools, such as QuerySurge, Informatica Data Validation, or IBM InfoSphere Information Analyzer, provide automated data validation, data comparison, and data profiling capabilities.

- Test Automation Frameworks: Test automation frameworks, such as Selenium, Cucumber, or Robot Framework, can automate ETL testing scenarios and reduce manual testing efforts.

- Data Quality Tools: Data quality tools, such as Talend Data Quality, SAP Data Services, or Trillium Software, offer data profiling, cleansing, and validation functionalities to ensure data quality throughout the ETL process.

- Scripting Languages: Scripting languages, such as Python, Perl, or Shell scripting, can be used to write custom testing scripts and automate ETL testing tasks.

Best practices for ETL testing include:

- Establishing a comprehensive test strategy that covers all aspects of the ETL process, including data extraction, transformation, loading, and data quality.

- Defining clear test cases and scenarios based on business requirements and data quality standards.

- Utilizing test automation wherever possible to reduce manual testing efforts and ensure consistent and repeatable test execution.

- Performing regular data reconciliation and validation to identify and resolve source and target systems discrepancies.

- Implementing a robust defect tracking and resolution process to capture and resolve issues identified during ETL testing.

By incorporating thorough testing and quality assurance practices into the ETL development lifecycle, organizations can ensure their ETL processes' reliability, accuracy, and performance, mitigating risks and delivering high-quality data to the target systems.

Advanced ETL Processes

As data landscapes evolve and business requirements become more complex, ETL processes have adapted to handle advanced data integration scenarios. In this section, we will explore some of the advanced ETL processes that have emerged to meet the demands of modern data environments.

Real-time ETL Processing:

Traditional ETL processes typically operate in batch mode, processing data regularly. However, real-time ETL processing has gained prominence with the increasing need for real-time data and near-instant insights. Real-time ETL enables continuous data integration, where data is extracted, transformed, and loaded as soon as it becomes available.

Real-time ETL is achieved through various technologies and architectures, such as:

- CDC techniques capture data changes in source systems and propagate them to the target systems in real-time, ensuring data synchronization and minimizing latency.

- Streaming ETL: ETL leverages data streaming platforms like Apache Kafka or Apache Flink to process and transform data in real time as it flows through the data pipeline.

- Micro-batch ETL: Micro-batch ETL breaks down the data processing into smaller, more frequent batches, reducing the latency between data extraction and availability in the target system.

Real-time ETL enables organizations to make data-driven decisions based on the most up-to-date information, enabling real-time analytics, operational intelligence, and timely business actions.

Artificial Intelligence (AI) and Machine Learning (ML) techniques are increasingly incorporated into ETL processes to enhance data integration, quality, and automation. AI and ML can be applied in various aspects of ETL, such as:

- Data Quality and Anomaly Detection: AI and ML algorithms can be trained to identify anomalies, outliers, and data quality issues. By analyzing historical data patterns and learning from labeled examples, these algorithms can automatically detect and flag data inconsistencies, reducing manual effort in data cleansing.

- Intelligent Data Mapping: AI and ML can assist in automating data mapping tasks by learning from existing mappings and suggesting appropriate transformations based on data patterns and relationships. This can significantly reduce the time and effort required for manual data mapping.

- Predictive Data Transformation: ML models can be trained to predict missing values, impute data, or generate derived attributes based on historical data patterns. This enables more accurate and complete data transformation, enhancing the data quality in the target system.

Autonomous ETL Optimization: AI and ML can optimize ETL workflows by analyzing job execution logs, resource utilization, and performance metrics. ML algorithms can suggest optimal scheduling, resource allocation, and data partitioning strategies to improve ETL efficiency and performance.

Organizations can automate complex data integration tasks by incorporating AI and ML into ETL processes, improving data quality and gaining valuable insights from their data assets.

Big data introduces new challenges and opportunities for ETL processes. With the exponential growth of data volume, variety, and velocity, traditional ETL approaches may need help to handle the scale and complexity of big data environments.

ETL processes in big data environments often leverage distributed computing frameworks, such as Apache Hadoop or Apache Spark, to address these challenges. These frameworks enable parallel processing of large datasets across clusters of computers, providing scalability and fault tolerance.

In big data ETL, data is typically extracted from various sources, including structured databases, semi-structured log files, and unstructured data from social media or IoT devices. The extracted data is then loaded into distributed storage systems, such as Hadoop Distributed File System (HDFS) or cloud storage, for further processing.

Transformation in big data ETL often involves applying complex business logic, data cleansing, and data aggregation using distributed processing frameworks like Apache Spark or Hive. These frameworks provide high-level APIs and SQL-like query languages to process and transform data at scale.

Loading the transformed data into target systems, such as data warehouses or data lakes, may involve bulk loading techniques or incremental updates to efficiently handle the large data volumes.

Big data ETL also requires considerations for data governance, data security, and data lineage in distributed environments. Tools and frameworks like Apache Atlas and Apache Ranger provide metadata management, data lineage tracking, and fine-grained access control for big data ecosystems.

By leveraging advanced ETL processes, such as real-time ETL, AI/ML integration, and big data ETL, organizations can tackle the challenges of modern data landscapes and derive valuable insights from their data assets. These advanced techniques enable organizations to process data at scale, ensure data quality, and respond quickly to changing business requirements.

ETL in the Cloud

Cloud computing, including ETL, has revolutionized how organizations approach data management and processing. The rise of cloud-based ETL services has brought new opportunities and benefits to organizations looking to streamline their data integration processes.

Cloud-based ETL Services:

Cloud providers offer managed ETL services that simplify the deployment, management, and scaling of ETL processes. These services provide a fully managed infrastructure, eliminating the need for organizations to maintain on-premises hardware and software.

Some popular cloud-based ETL services include:

- AWS Glue: Amazon Web Services (AWS) Glue is a fully managed ETL service that makes extracting, transforming, and loading data easy. It provides a serverless environment for running ETL jobs and a built-in data catalog and job scheduling capabilities.

- Azure Data Factory: Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and orchestrate ETL workflows. It supports many data sources and provides a visual designer for building data pipelines.

- Google Cloud Dataflow: Google Cloud Dataflow is a fully managed service for executing Apache Beam pipelines. It enables batch and streaming data processing with automatic scaling and fault-tolerance capabilities.

Cloud-based ETL services offer several advantages, such as scalability, flexibility, and cost-efficiency. They allow organizations to provision resources quickly, scale ETL processes based on demand, and pay only for the resources consumed.

While the core concepts of ETL remain the same, there are some key differences between on-premises and cloud-based ETL:

- Infrastructure Management: Organizations maintain the hardware and software infrastructure in on-premises ETL. The cloud provider manages infrastructure with cloud-based ETL, including provisioning, scaling, and maintenance.

- Scalability and Elasticity: Cloud-based ETL services offer elastic scalability, allowing organizations to scale up or down based on data volume and processing requirements. On-premises ETL may have limitations in terms of scalability due to fixed hardware resources.

- Cost Model: On-premises ETL involves upfront investment in hardware, software licenses, and maintenance costs. Cloud-based ETL follows a pay-as-you-go model, where organizations pay for the resources consumed, providing cost flexibility and potential cost savings.

- Integration with Cloud Ecosystems: Cloud-based ETL services seamlessly integrate with other cloud services, such as cloud storage, data warehouses, and analytics platforms. This enables organizations to build end-to-end data pipelines within the cloud ecosystem.

- Data Security and Compliance: Cloud providers offer robust security measures and compliance certifications to ensure data protection and meet regulatory requirements. However, organizations must carefully evaluate and configure security settings to align with their security and compliance needs.

The rise of cloud-based ETL services has significantly impacted the data landscape. Cloud ETL has democratized data integration, making it more accessible and cost-effective for organizations of all sizes.

Cloud ETL has also accelerated the adoption of modern data architectures, such as data lakes and data warehouses in the cloud. Services like Amazon S3, Azure Data Lake Storage, and Google Cloud Storage provide scalable and cost-effective storage options for raw and processed data.

Cloud ETL has also enabled organizations to leverage cloud platforms' advanced analytics and machine learning capabilities. Services like Amazon SageMaker, Azure Machine Learning, and Google AI Platform allow organizations to build and deploy machine learning models on top of their ETL pipelines.

Furthermore, cloud ETL has fostered the growth of data-driven cultures by enabling self-service data integration and analytics. Business users can easily access and integrate data using cloud-based ETL tools, empowering them to make data-driven decisions without heavy reliance on IT teams.

As organizations increasingly adopt cloud-based ETL solutions, factors such as data security, compliance, and vendor lock-in must be considered. Proper planning, architecture design, and governance practices are essential to ensuring successful and secure ETL processes in the cloud.

Best Practices and Future Trends

To ensure the success and effectiveness of ETL processes, it is crucial to adhere to best practices and stay informed about future trends in the field. This section will discuss some key best practices for ETL development and deployment and explore the future trends shaping the ETL landscape.

Best Practices for ETL Development and Deployment:

- Modular and Reusable Design: Develop ETL processes using a modular and reusable approach. Break down the ETL workflow into smaller, independent components that can be easily maintained, tested, and reused across different projects. This promotes code reusability, reduces development efforts, and facilitates easier maintenance.

- Data Quality and Validation: Implement robust data quality checks and validation mechanisms throughout the ETL process. Establish data quality rules, such as data type checks, range checks, and business rule validations, to ensure the accuracy and integrity of the data. Incorporate data profiling and cleansing techniques to identify and handle anomalies and inconsistencies.

- Error Handling and Logging: Implement comprehensive error handling and logging mechanisms in the ETL process. Capture and handle exceptions gracefully, providing meaningful error messages and logging relevant information for troubleshooting. Implement retry mechanisms for transient errors and define escalation procedures for critical failures.

- Performance Optimization: Optimize the performance of ETL processes by applying techniques such as parallel processing, data partitioning,and query optimization. Analyze the performance bottlenecks and tune the ETL workflows to minimize data processing time and resource utilization. Use techniques like indexing, caching, and data compression to improve query performance and reduce storage costs.

- Security and Compliance: Ensure the security and compliance of ETL processes by implementing appropriate security measures and adhering to relevant regulations. Encrypt sensitive data at rest and in transit, apply access controls and authentication mechanisms, and audit data access and modifications. Comply with data privacy regulations, such as GDPR or HIPAA, by implementing data masking, data anonymization, and data retention policies.

- Documentation and Version Control: Maintain comprehensive documentation for ETL processes, including data flow diagrams, transformation logic, and data lineage. Use version control systems to track changes, collaborate effectively, and maintain a history of ETL code and configurations. Document the dependencies, assumptions, and business rules to facilitate knowledge transfer and maintainability.

- Monitoring and Alerting: Implement robust monitoring and alerting mechanisms to identify and address issues in ETL processes proactively. Monitor key performance indicators (KPIs) such as data processing time, data quality metrics, and resource utilization. Set up alerts and notifications to promptly detect and respond to anomalies, errors, or performance degradation.

- Continuous Integration and Deployment (CI/CD): Adopt CI/CD practices to automate the build, testing, and deployment of ETL processes. Integrate ETL code into a version control system, automate the execution of unit tests and integration tests, and establish automated deployment pipelines. This ensures consistent and reliable deployments, reduces manual errors, and enables faster iteration and bug fixes.

Future Trends in ETL

- Streaming ETL: The increasing demand for real-time data processing and analytics has led to the rise of streaming ETL. Streaming ETL enables continuous data integration and transformation as data arrives in real time. Technologies like Apache Kafka, Apache Flink, and Amazon Kinesis are gaining popularity for building real-time ETL pipelines. Streaming ETL allows organizations to process and analyze data in near real-time, enabling faster decision-making and real-time insights.

- Data Virtualization: Data virtualization is an emerging trend that allows organizations to access and integrate data from multiple sources without physically moving or copying the data. It provides a virtual layer that abstracts the complexity of underlying data sources and presents a unified view of the data. Data virtualization enables real-time data access, reduces data duplication, and simplifies data management. It complements traditional ETL processes by providing a flexible and agile approach to data integration.

- Serverless ETL: Serverless computing is gaining traction in the ETL domain, offering a scalable and cost-effective approach to data processing. Serverless ETL leverages cloud-based services, such as AWS Lambda, Azure Functions, or Google Cloud Functions, to execute ETL tasks without the need to manage the underlying infrastructure. Serverless ETL allows organizations to focus on writing ETL logic while the cloud provider handles resource scaling, provisioning, and management.

- DataOps: DataOps is an emerging practice that combines principles from DevOps, Agile, and lean manufacturing to improve the speed, quality, and collaboration in data management and analytics. DataOps emphasizes automation, continuous integration, and continuous delivery of data pipelines. It involves close collaboration between data engineers, data scientists, and business stakeholders to deliver data products and insights rapidly and iteratively. DataOps practices can streamline ETL processes, improve data quality, and accelerate data-driven decision-making.

Preparing for the Future as an ETL Developer:

To stay competitive and adapt to the evolving ETL landscape, ETL developers should:

- Continuously Learn and Upskill: Stay updated with the latest technologies, tools, and best practices in ETL development. Invest time learning new programming languages, data integration frameworks, and cloud-based ETL services. Attend conferences, webinars, and workshops to gain insights from industry experts and expand your knowledge.

- Embrace Cloud and Big Data Technologies: Gain proficiency in cloud-based ETL services and big data technologies. Understand ETL's capabilities and best practices in cloud environments like AWS, Azure, or Google Cloud. Familiarize yourself with big data processing frameworks like Apache Spark, Hadoop, and Hive to handle large-scale data integration challenges.

- Focus on Data Quality and Governance: Develop a strong understanding of data quality and governance principles. Learn techniques for data profiling, data cleansing, and data validation. Understand the importance of data lineage, metadata management, and data security in ETL processes. Stay informed about data privacy regulations and best practices for compliance.

- Collaborate and Communicate Effectively: Foster strong collaboration and communication skills to work effectively with cross-functional teams, including data architects, data analysts, and business stakeholders. Develop the ability to translate business requirements into technical solutions and communicate complex ETL concepts to non-technical audiences. Embrace agile methodologies and be open to feedback and iterative development.

Conclusion

We have seen how ETL plays a critical role in data integration, enabling organizations to consolidate data from diverse sources, transform it into a consistent and usable format, and load it into target systems for analysis and reporting.

We delved into the three core stages of ETL - extraction, transformation, and loading - and discussed the challenges, techniques, and considerations associated with each stage. We highlighted the importance of data quality, validation, and error handling in ensuring data accuracy and reliability.

We explored the various ETL tools and technologies available, both commercial and open-source, and compared their features and capabilities. We also examined the differences between ETL and ELT (Extract, Load, Transform) approaches and their suitability for data integration scenarios.

We discussed the key steps in designing an effective ETL process, emphasizing the importance of requirements gathering, data profiling, data mapping, and architectural considerations. We also covered best practices for managing data quality, ensuring data security, and automating ETL workflows.

Testing and quality assurance were highlighted as crucial components of the ETL development lifecycle. We explored different types of ETL tests, such as unit testing, integration testing, and data quality testing, and discussed the tools and frameworks available to facilitate thorough testing practices.

We also delved into advanced ETL processes, such as real-time ETL, AI and machine learning integration, and ETL in big data environments. These advancements showcase how ETL processes are evolving to meet the demands of modern data landscapes and enable organizations to derive valuable insights from their data assets.

Another key topic we explored was the rise of cloud computing and its impact on ETL. We discussed the benefits and differences of cloud-based ETL services compared to traditional on-premises ETL and how the cloud transforms the data integration landscape.

Looking toward the future, we discussed best practices for ETL development and deployment, emphasizing the importance of modular design, performance optimization, security, and documentation. We also explored emerging trends, such as streaming ETL, data virtualization, serverless ETL, and DataOps, which are shaping the future of data integration.

As organizations continue to rely on data-driven decision-making, the importance of robust and efficient ETL processes cannot be overstated. ETL enables organizations to harness the power of their data, gain valuable insights, and drive business growth. Organizations can build scalable and reliable data integration pipelines by understanding the fundamentals of ETL, leveraging the right tools and technologies, and adopting best practices.

For ETL developers and professionals, staying updated with the latest trends, technologies, and best practices is crucial. Continuous learning, upskilling, and adapting to the evolving data landscape will be key to ETL and data integration success.

As we conclude this comprehensive exploration of ETL, we encourage readers to explore the topics discussed further, experiment with different ETL tools and techniques, and apply the best practices in their own data integration projects. By embracing the power of ETL and staying at the forefront of data integration advancements, organizations can unlock the full potential of their data and drive meaningful business outcomes.

FAQs

Get In Touch