Data Mesh Architecture: A Comprehensive Guide to Modern Data Architecture Transformation [Bonus: AWS Specifics]

Priya Patel

May 16, 2025

In today’s data-driven enterprise landscape, organizations face unprecedented challenges in managing, scaling, and deriving value from their data assets. While effective for smaller scales, traditional centralized data architectures often need to pay more attention to modern data complexity and organizational demands. Data Mesh Architecture emerges as a transformative approach that fundamentally reimagines how enterprises organize, manage, and utilize their data assets.

Introduction

Data Mesh represents a paradigm shift in data architecture, moving from centralized, monolithic approaches to a distributed, domain-oriented model. This architectural pattern, first introduced by Zhamak Dehghani, addresses the limitations of traditional data platforms that struggle to scale across large organizations with diverse data domains and use cases.

The evolution toward Data Mesh architecture stems from several critical challenges in modern data environments:

- Exponential growth in data volume and variety: The amount of data generated by organizations is growing exponentially, and the variety of data formats and sources is also increasing. This makes it difficult for traditional data architectures to keep up, as they often need to be designed to handle such large volumes and diverse data types.

- Increasing complexity of data pipelines: Data pipelines are the processes that extract, transform, and load data from its source systems into a usable format. As data volumes and variety increase, so does the complexity of data pipelines. This complexity can make managing and maintaining data pipelines difficult, leading to errors and data quality issues.

- Bottlenecks in centralized data teams: In traditional data architectures, data is often centralized in a single team. This team manages all data-related tasks, from data acquisition to data analysis. This can create a bottleneck, as the centralized data team can become overwhelmed with requests from different business units.

- Disconnect between domain expertise and data management: In traditional data architectures, domain experts and data management professionals often disconnect. This disconnect can lead to data quality issues, as domain experts may not be aware of the data management requirements, and data management professionals may not know the data’s business context.

- Monolithic architectures have limitations regarding scalability. They are not designed to scale easily, and as an organization’s data needs grow, they can become difficult to manage and maintain, leading to performance issues and outages.

Organizations adopting Data Mesh seek to transform their data architecture from a liability into a strategic asset, enabling faster innovation and more efficient data utilization across the enterprise. By adopting a distributed, domain-oriented approach, Data Mesh can help organizations address the challenges of modern data environments and unlock the full potential of their data.

Further Reading

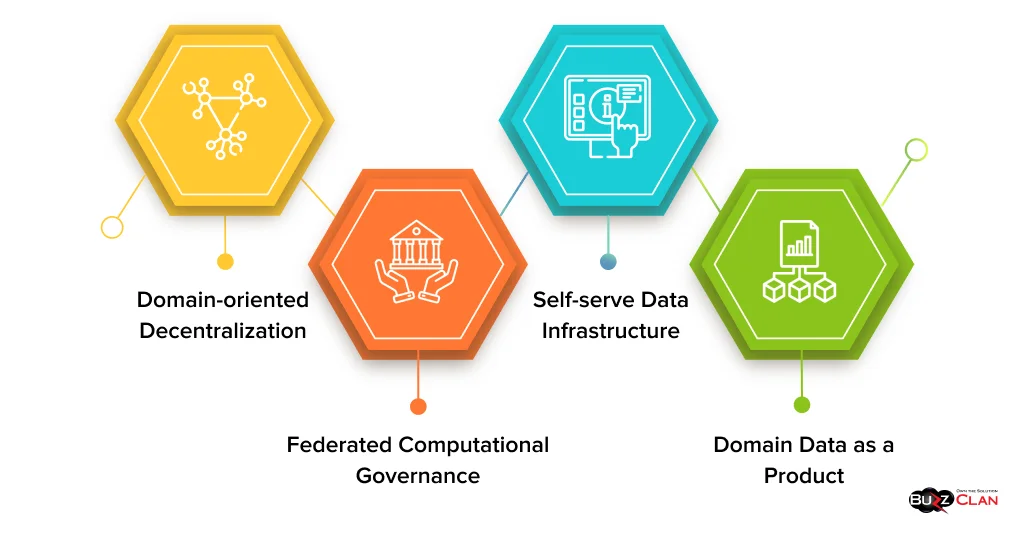

Core Principles of Data Mesh Architecture

Data Mesh architecture stands on four fundamental principles that work in concert to create a scalable, maintainable, and value-driven data ecosystem:

Domain-oriented Decentralization

Microservices architectures decentralize operational capabilities, allowing different teams to own and manage their services. Data Mesh furthers this concept by decentralizing data ownership and architecture to domain teams. This means that each domain team is responsible for the relevant data to their domain.

Responsibilities of Domain Teams in a Data Mesh

In a Data Mesh, domain teams are responsible for the following:

- Data product creation and maintenance: Domain teams are accountable for creating and maintaining the data products used by their applications. This includes defining the data models, collecting the data, and ensuring that the data is accurate and complete.

- Quality assurance and validation: Domain teams ensure the quality of their data, which includes validating it for accuracy, completeness, and consistency.

- Domain-specific data transformations: Domain teams are responsible for performing any necessary data transformations to make the data usable by their applications. This may include filtering, sorting, aggregating, or joining data from different sources.

- Local governance implementation: Domain teams are responsible for implementing local governance policies for their data. This includes defining who can access the data, how it can be used, and how it should be protected.

- Service-level objectives (SLOs) management: Domain teams manage the SLOs for their data products. This includes setting targets for data availability, latency, and throughput and monitoring the performance of their data products against these targets.

Benefits of a Data Mesh

A Data Mesh offers several benefits, including:

- Improved agility: A data mesh can help organizations become more agile by decentralizing data ownership and architecture. Domain teams can decide about their data without going through a centralized team.

- Increased innovation: A data mesh can also help increase innovation by allowing domain teams to experiment with new data products and services.

- Reduced costs: A data mesh can help reduce costs by eliminating the need for a centralized data team.

- Improved data governance: A Data Mesh can improve data governance by assigning domain teams responsible for implementing local governance policies.

Domain Data as a Product

Treating data as a product rather than a byproduct signifies a transformative shift in perspective within an organization. This principle necessitates a comprehensive approach encompassing several critical elements:

Clear Product Ownership and Accountability:

- Assign a dedicated product owner responsible for the data product’s success.

- Establish clear roles and responsibilities for data stewards, engineers, analysts, and other stakeholders in the data product lifecycle.

- Implement regular communication and collaboration processes among team members to ensure alignment and timely decision-making.

Defined Service Level Agreements (SLAs):

- Develop SLAs that outline the expected data quality, availability, and performance metrics for the data product.

- Define response times, escalation procedures, and remediation plans in case of SLA violations.

- Monitor SLA compliance and adjust as needed to ensure the data product consistently meets user expectations.

Documentation and Discoverability:

- Create comprehensive documentation for the data product, including dictionaries, lineage, and usage guidelines.

- Implement data discovery tools and platforms to enable users to search, access, and understand the available data easily.

- Provide training and support resources to help users effectively utilize the data product.

Quality Metrics and Monitoring:

- Establish data quality metrics and monitoring mechanisms to assess the data product’s accuracy, completeness, consistency, and timeliness.

- Implement automated data validation and cleansing processes to identify and correct errors and inconsistencies.

- Regularly review data quality reports and take proactive measures to address any issues.

User-centric Design Thinking:

- Involve users in the design and development process of the data product to ensure it meets their needs and expectations.

- Conduct user research, gather feedback, and iterate on the data product based on user input.

- To make the data product intuitive and enjoyable, prioritize usability, accessibility, and ease of use.

Self-serve Data Infrastructure

To empower domain teams to operate autonomously while upholding consistency, Data Mesh necessitates a robust self-serve infrastructure platform that offers the following:

Standardized Tools and Templates:

- Provides a cohesive set of tools and pre-defined templates tailored to specific data domains, ensuring uniformity in data integration, transformation, and analysis processes.

- Simplifies the development and deployment of data pipelines, reducing the time and effort required for data engineers.

- Enhances collaboration and knowledge sharing among domain teams by promoting the reuse of proven solutions and best practices.

Automated Deployment Pipelines:

- Integrates continuous integration/continuous delivery (CI/CD) pipelines to streamline the deployment of data pipelines and applications.

- Automates the testing, validation, and deployment processes, enabling faster and more reliable releases.

- Reduces the risk of human error and ensures consistency in the deployment process across different environments.

Monitoring and Observability:

- Offers real-time monitoring and observability tools to track the health and performance of data pipelines and applications.

- It provides insights into data lineage, quality, and usage patterns, enabling proactive issue identification and resolution.

- Facilitates proactive maintenance and optimization of data infrastructure, ensuring high availability and responsiveness.

Security and Compliance Controls:

- Implements robust security measures to protect sensitive data and ensure compliance with regulatory requirements.

- Access control, encryption, and intrusion detection are included to prevent unauthorized access and data breaches.

- Provides tools for continuous compliance monitoring and reporting, aiding organizations in meeting their regulatory obligations.

Infrastructure as Code Capabilities:

- Enables managing and provisioning infrastructure resources using code, promoting consistency and repeatability.

- Allows for the automated creation and configuration of data platforms, reducing the need for manual intervention.

- Facilitates version control and collaboration, enabling teams to track changes and maintain a consistent infrastructure environment.

Federated Computational Governance

Governance in Data Mesh operates through a federated model that strikes a delicate balance between local autonomy and global consistency. This model comprises several key components:

Global Policies and Standards:

- Establish overarching data governance principles, policies, and standards across the data mesh.

- These policies address data security, privacy, quality, and other critical governance aspects.

Local Policy Implementation:

- Empower individual domains or teams to implement specific policies and standards tailored to their unique needs and use cases.

- Local policies can be more granular and flexible, allowing for adaptation to varying data types, sources, and business requirements.

Automated Compliance Checking:

- Implement automated mechanisms to monitor and assess compliance with global and local data governance policies.

- Real-time monitoring tools can identify potential violations and trigger alerts, enabling prompt remediation.

Cross-Domain Interoperability:

- Ensure seamless data exchange and interoperability across different domains within the data mesh.

- Establish standard data formats, protocols, and APIs to facilitate efficient data sharing and consumption.

Shared Metrics and KPIs:

- Define a set of shared metrics and key performance indicators (KPIs) to measure the effectiveness of data governance practices.

- These metrics can include data quality, accessibility, compliance rates, and business value derived from data.

This federated governance model enables data domains to maintain autonomy and agility while adhering to a common set of overarching principles and standards. It promotes collaboration, transparency, and accountability across the organization, fostering a data-driven culture and ensuring responsible and effective use of data.

Data Mesh Architecture Components

The implementation of Data Mesh architecture requires several key components working in harmony:

Domain-oriented Data Teams

The core members of a domain-oriented data team typically include:

- Domain Experts: These individuals possess deep knowledge and understanding of the specific business domain, its processes, pain points, and opportunities. They bring valuable insights and context to data analysis and interpretation.

- Data Engineers: Data engineers are responsible for designing, building, and maintaining the data infrastructure that supports data collection, storage, processing, and analysis. They ensure that data is accessible, reliable, and secure.

- Data Product Owners: Data product owners are accountable for defining the vision and roadmap for data products and services. They work closely with domain experts and other stakeholders to gather requirements, prioritize features, and ensure data products align with business objectives.

- Quality Assurance Specialists: Quality assurance specialists ensure data accuracy, completeness, and consistency. They develop and implement testing procedures to identify and resolve data quality issues.

- Platform Engineers: Platform engineers are responsible for building and maintaining the underlying data platform that supports the team’s data processing and analysis needs. They ensure that the platform is scalable, reliable, and secure.

These cross-functional teams work collaboratively to extract meaningful insights from data, identify trends and patterns, and develop data-driven solutions to real-world problems. They enable organizations to make informed decisions, optimize operations, and gain a competitive advantage in the market.

Data Product Ownership Model

Each data product should have clearly defined ownership and responsibility to ensure its effective management throughout its lifecycle. Here are the key areas that require clear ownership and responsibility:

Product Lifecycle Management:

- Ownership: The product manager or data steward should own the data product’s overall lifecycle, from inception to retirement.

- Responsibility: The product manager is responsible for defining the product vision, setting goals, and ensuring that the product meets the needs of its consumers.

Version Control and Change Management:

- Ownership: The data engineering team or governance committee should own version control and change management processes.

- Responsibility: The data engineering team is responsible for implementing version control systems and ensuring that changes to the data product are tracked, documented, and approved.

Consumer Engagement and Support:

- Ownership: The customer success or support team should own consumer engagement and support.

- Responsibility: The customer success team is responsible for onboarding new consumers, providing technical support, and gathering feedback to improve the data product.

Quality Metrics and Monitoring:

- Ownership: The data quality team or governance committee should own quality metrics and monitoring.

- Responsibility: The data quality team establishes quality metrics, monitors data quality, and identifies and resolves issues.

Documentation and Metadata Management:

- Ownership: The data documentation team or governance committee should own documentation and metadata management.

- Responsibility: The data documentation team is responsible for creating and maintaining accurate and up-to-date documentation, including user guides, technical specifications, and metadata definitions.

By clearly defining ownership and responsibility for each of these areas, organizations can ensure that their data products are well-managed, high-quality, and meet the needs of their consumers.

Data Infrastructure Platforms

The underlying platform must support distributed operations while maintaining consistency.

Data storage and processing:

- The platform should provide scalable and reliable data storage solutions for handling large volumes of data.

- It should support distributed processing capabilities for efficient data processing across multiple nodes or servers.

- Data replication and redundancy mechanisms should be in place to ensure data availability and prevent data loss in case of hardware failures or network disruptions.

Security and access control:

- The platform should implement robust security measures to protect data from unauthorized access and ensure confidentiality.

- It should provide fine-grained access control mechanisms to control who can access data and what operations they can perform.

- Encryption should be employed to safeguard data both at rest and in transit.

Metadata management:

- The platform should offer efficient metadata management capabilities to organize and catalog data assets.

- Metadata should include information such as data lineage, data quality metrics, and access control policies.

- Metadata should be easily discoverable and accessible to users for better understanding and utilization of data.

Monitoring and alerting:

- The platform should provide comprehensive monitoring and alerting mechanisms to track the health and performance of the underlying infrastructure.

- Real-time monitoring should be in place to detect anomalies and potential issues.

- Automated alerts should be triggered to notify administrators or relevant stakeholders about critical events or performance degradation.

Deployment automation:

- The platform should support automated deployment processes to streamline the deployment and updates of applications and services.

- It should enable continuous integration and continuous delivery (CI/CD) pipelines to facilitate rapid and iterative development cycles.

- Deployment automation should include version control, testing, and rollback capabilities for managing changes and ensuring stability.

Interoperability Standards

Standards are pivotal in facilitating seamless data exchange across disparate domains, ensuring that information can be shared and utilized effectively. Several key standards contribute to achieving this interoperability:

- Data Formats and Schemas: Standardized data formats and schemas establish common structures and representations for data elements, enabling consistent interpretation and processing. Examples include JavaScript Object Notation (JSON), Extensible Markup Language (XML), and Comma-Separated Values (CSV), each designed to represent data in a structured and machine-readable manner.

- API Specifications: Application Programming Interface (API) specifications define the protocols and methods for interacting with software applications and services. By adhering to standardized API specifications, developers can easily integrate external systems and applications, ensuring seamless data exchange and communication. Well-known API specification standards include Representational State Transfer (REST) and Simple Object Access Protocol (SOAP).

- Quality Metrics: Quality metrics objectively assess data accuracy, completeness, and reliability. These standards help identify and address data inconsistencies, ensuring that the exchanged data is trustworthy and fit for purpose. Standard quality metrics include data completeness, accuracy, consistency, and timeliness.

- Metadata Standards: Metadata standards define metadata’s structure and organization, providing essential information about data resources. These standards facilitate data discovery, understanding, and interoperability. Examples of metadata standards include the Dublin Core Metadata Initiative (DCMI) and the ISO/IEC 11179 Metadata Registry.

- Security Protocols: Security protocols establish guidelines and mechanisms for protecting data during exchange and storage. These standards include encryption algorithms, authentication methods, and access control procedures. By implementing standardized security protocols, organizations can safeguard sensitive information and maintain data integrity and confidentiality.

Implementing Data Mesh Architecture

Successfully implementing Data Mesh requires a carefully planned approach:

Assessment and Preparation

To ensure a successful implementation of any data-driven initiative, a comprehensive evaluation of the following key factors is crucial:

Current Data Architecture:

- Assess the existing data landscape, including data sources, formats, quality, and governance practices.

- Identify data silos, data duplication, and data inconsistencies that may hinder effective data utilization.

- Evaluate the scalability and flexibility of the current data infrastructure to handle growing data volumes and evolving business needs.

Organizational Readiness:

- Assess the organization’s readiness to embrace a data-driven culture and make data-informed decisions.

- Evaluate the leadership’s commitment to data-driven initiatives and their willingness to invest in data capabilities.

- Identify potential resistance to change and address data privacy, security, and ethical concerns.

Technical Capabilities:

- Assess the organization’s technical infrastructure, including data storage, processing, and analytics tools.

- Evaluate the skillset and expertise of the IT team to support data-driven initiatives.

- Identify gaps in technical capabilities and determine whether additional training, resources, or partnerships with external providers are needed.

Resource Availability:

- Assess the availability of financial resources, personnel, and time required to implement and sustain data-driven initiatives.

- Evaluate the organization’s capacity to handle the ongoing data collection, storage, processing, and analysis costs.

- Determine the feasibility of scaling data-driven initiatives across different departments and business units.

Cultural Alignment:

- Assess the organization’s culture and values to determine if they align with data-driven decision-making.

- Evaluate the organization’s willingness to experiment with data and embrace a culture of continuous learning and improvement.

- Identify potential cultural barriers that may hinder the adoption of data-driven practices and develop strategies to address them.

Organizational Transformation

Transform the organization to support the new architecture:

Restructure teams around domains:

- Identify and create cross-functional teams aligned with the new architecture’s domains.

- Ensure that teams have the necessary skills and expertise to support the new architecture effectively.

- Foster collaboration and communication among team members to facilitate knowledge-sharing and problem-solving.

Define new roles and responsibilities:

- Conduct a thorough analysis of the current roles and responsibilities within the organization.

- Develop new role profiles that align with the requirements of the new architecture.

- Clearly define the responsibilities, accountabilities, and expectations for each new role.

- Provide employees with the necessary training and support to effectively transition into their new roles.

Establish governance frameworks:

- Develop a comprehensive governance framework that outlines the decision-making processes, policies, and procedures for managing the new architecture.

- Define the roles and responsibilities of key stakeholders involved in governance, such as the architecture board, technical steering committee, and change control board.

- Implement mechanisms for monitoring, evaluating, and reporting on the effectiveness of the governance framework.

Develop training programs:

- Design and deliver training programs to equip employees with the knowledge and skills required to operate and maintain the new architecture.

- Tailor training programs to specific roles and responsibilities within the organization.

- Provide hands-on experience and practical exercises to reinforce learning.

- Make training programs accessible and convenient for employees through various delivery methods (e.g., online, in-person, on-demand).

Create change management plans:

- Develop a comprehensive change management plan outlining the steps, timeline, and resources required to transition to the new architecture successfully.

- Identify and address potential resistance to change by communicating the benefits of the new architecture and providing support to employees affected by the change.

- Establish mechanisms for monitoring and evaluating the progress of the change management plan and making necessary adjustments along the way.

Technical Implementation

Execute the technical transformation in phases:

Infrastructure Platform Development

- Build core services

- Establish security frameworks

- Create deployment pipelines

- Implement monitoring systems

Domain Migration

- Identify pilot domains

- Create initial data products

- Establish domain boundaries

- Implement local governance

Scale and Optimization

- Expand to additional domains

- Refine processes

- Optimize performance

- Enhance automation

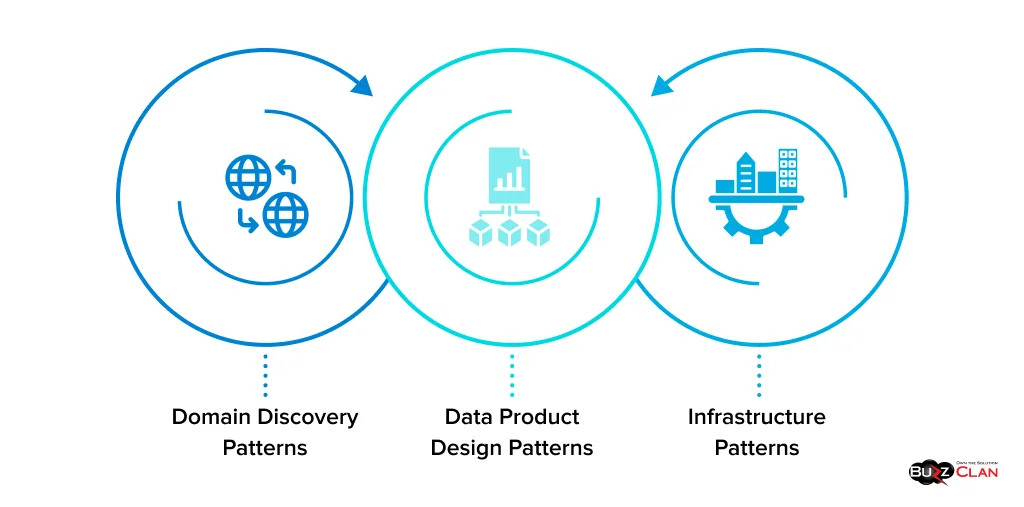

Data Mesh Architecture Patterns

Successful Data Mesh implementations rely on established patterns:

Domain Discovery Patterns

- Bounded context analysis identifies the different bounded contexts within a domain, each representing a distinct area of responsibility.

- Domain event storming: A collaborative workshop where stakeholders and domain experts brainstorm the key events within a domain, helping uncover the domain’s underlying structure.

- Data flow mapping: Visualizes data flow between different components within a domain, helping identify potential bottlenecks and inefficiencies.

- Responsibility mapping assigns responsibilities for different tasks and decisions to specific domains’ components, ensuring a clear understanding of who is responsible for what.

- Interface definition: Defines the contracts between different components within a domain, ensuring that they can communicate and interact effectively.

Data Product Design Patterns

- Schema evolution: Provides techniques for managing changes to the schema of a data product over time, ensuring that existing clients can continue to access and use the data.

- Version management: Tracks different versions of a data product, allowing users to access specific versions as needed.

- Quality control: Ensures that the data in a data product is accurate, consistent, and complete.

- Access patterns: Identifies common data access patterns, such as queries, reads, and writes, and optimizes the data product’s design to support these patterns efficiently.

- Metadata management: Captures and manages information about the data in a data product, such as its structure, lineage, and usage patterns.

Infrastructure Patterns

- Distributed storage: Provides techniques for storing data across multiple servers or nodes, ensuring the data is available and accessible even if one or more servers fail.

- Processing frameworks: Provides tools and libraries for processing large volumes of data in parallel, enabling efficient and scalable data analysis.

- Security models: Ensures that data is protected from unauthorized access, modification, and deletion.

- Monitoring systems collect and analyze data about a data infrastructure’s operation, helping identify potential problems and performance bottlenecks.

- Deployment patterns provide best practices for deploying and managing data infrastructure components, ensuring reliability, scalability, and security.

AWS Implementation Specifics

Implementing Data Mesh on AWS leverages several key services:

Core Services

- Amazon S3 for data storage: Amazon S3 is a highly scalable, low-cost object storage service that provides virtually unlimited storage for data of any size. It is designed for high availability and durability, making it an ideal choice for storing and protecting data.

- AWS Lake Formation for security: AWS Lake Formation is a service that helps customers build, secure, and manage data lakes in the cloud. It provides several features to help customers secure their data, including access control, encryption, and data auditing.

- Amazon EMR for processing: Amazon EMR is a managed Hadoop framework that makes it easy to process large amounts of data. It provides several features to help customers process data efficiently, including support for multiple programming languages, scalable compute resources, and various data processing tools.

- AWS Glue for metadata: AWS Glue is a fully managed ETL (extract, transform, and load) service that makes it easy to integrate data from various sources, clean and transform data, and load data into various targets. It provides several features to help customers manage metadata, including a data catalog, a schema registry, and a lineage tracker.

- Amazon EventBridge for integration: Amazon EventBridge is a serverless event bus that makes it easy to connect applications and services. It provides several features to help customers integrate data and applications, including event filtering, routing, and replay support.

Reference Architecture

A typical AWS Data Mesh implementation includes:

- Domain-specific data lakes: Data lakes are central repositories for storing large amounts of data, often in its raw format. In AWS Data Mesh, domain-specific data lakes are created for each business domain. This allows data consumers to easily find and access the needed data without searching through a single, large data lake.

- Shared control plane: The control plane is the central management component of AWS Data Mesh. It provides a single control point for managing all aspects of the data mesh, such as data access, security, and governance. The control plane is shared across all data lakes in the data mesh, which ensures that all data is managed consistently.

- Cross-account access: AWS Data Mesh allows data consumers to access data from multiple AWS accounts. This is important for organizations storing data in multiple accounts, such as accounts for different departments or business units. Cross-account access allows data consumers to easily access all the data they need, regardless of where it is stored.

- Centralized monitoring: AWS Data Mesh provides centralized monitoring for all data lakes in the mesh. This allows data engineers to monitor the health and performance of the data mesh and identify and resolve any issues that may arise. Centralized monitoring also helps ensure that all data is managed consistently and securely.

- Automated deployment: AWS Data Mesh provides automated deployment for data lakes and other components of the data mesh. This simplifies creating and managing a data mesh, reducing the risk of errors. Automated deployment also helps ensure the data mesh is always up-to-date with the latest features and security patches.

Best Practices

Use AWS Organizations for Domain Separation:

- Create multiple AWS accounts to separate different environments (e.g., production, testing, development) or business units.

- Use AWS Organizations to manage and control these accounts centrally.

- Implement policies to restrict cross-account access and enforce security standards.

Implement Cross-Account Access Patterns:

- Use IAM roles to grant temporary access to resources from one account to another.

- Configure resource-based policies to restrict access to specific resources based on the principal (e.g., IAM user or role) making the request.

- Implement least privilege by granting only the required permissions for a specific task.

Leverage Managed Services:

- Detect and respond to security threats using managed services such as Amazon GuardDuty, Amazon Inspector, and Amazon Macie.

- Use Amazon CloudWatch to monitor and log security-related events.

- Use Amazon CloudTrail to audit all API calls made to AWS resources.

Automate Security Controls:

- Use AWS Config to monitor and enforce security configurations continuously.

- Use AWS Systems Manager to automate security tasks such as patching and vulnerability management.

- Use AWS CloudFormation to deploy and manage secure infrastructure using templates.

Monitor Costs Actively:

- Use AWS Cost Explorer to monitor and analyze cloud costs.

- Set up cost budgets to track spending and receive alerts when exceeding thresholds.

- Use AWS Trusted Advisor to identify cost-saving opportunities.

Real-World Applications

Data Mesh has been successfully implemented across various industries:

Financial Services

- Customer data management: collecting, storing, and analyzing customer data to understand financial needs and behaviors.

- Risk analysis: assessing the risk of financial losses due to factors such as credit, market, and operational risks.

- Regulatory reporting: complying with financial regulations and reporting requirements, such as those imposed by the Securities and Exchange Commission (SEC) and the Financial Industry Regulatory Authority (FINRA).

- Transaction processing: handling financial transactions such as deposits, withdrawals, and payments.

- Fraud detection: identifying and preventing fraudulent transactions, such as credit card fraud and identity theft.

Healthcare

- Patient records management: collecting, storing, and managing patient medical records, including medical history, diagnoses, treatments, and medications.

- Clinical trial data management: collecting, analyzing, and reporting data from clinical trials to evaluate the safety and efficacy of new drugs and treatments.

- Insurance claims processing: processing and paying insurance claims for medical expenses.

- Research data management: collecting, storing, and analyzing research data to advance medical knowledge and improve patient care.

- Regulatory compliance: adhering to healthcare regulations and standards, such as those established by the Food and Drug Administration (FDA) and the Centers for Medicare and Medicaid Services (CMS).

Manufacturing

- Supply chain optimization: managing the flow of goods and materials from suppliers to customers, including inventory management, transportation, and logistics.

- Quality control: ensuring that products meet quality standards and specifications.

- Production planning: scheduling and coordinating the production of goods, considering factors such as demand, capacity, and lead times.

- Inventory management: tracking and controlling inventory levels to ensure enough stock to meet customer demand without overstocking.

- Predictive maintenance: monitoring equipment condition and performance to identify potential problems and prevent breakdowns.

Impact on Data Operations

Data Mesh transforms traditional data operations:

Governance Evolution

The evolution of governance in complex systems, such as enterprise IT, is characterized by several key trends:

- Shift from centralized to federated governance: As systems become more distributed and interconnected, the traditional centralized governance approach becomes less effective. Federated governance, in which decision-making is decentralized to the level of individual domains or subsystems, is more agile and responsive.

- Automated policy enforcement: The enforcement of governance policies is increasingly automated, using tools such as policy engines and configuration management systems. This automation helps ensure that policies are consistently applied and that systems comply.

- Domain-specific controls: Governance controls are becoming more domain-specific, tailored to the needs of specific systems and applications. This allows for a more targeted and practical approach to governance.

- Cross-domain standards: Cross-domain governance standards are being developed to ensure interoperability and consistency across different domains. These standards provide a common framework for governance policies and controls.

- Continuous compliance: The goal of governance is not simply to achieve compliance with regulations and standards but to maintain continuous compliance in the face of changing requirements and threats. This requires a proactive approach to governance that includes ongoing monitoring and assessment.

Team Structure Changes

To support the evolution of governance, changes are also being made to team structures and responsibilities:

- Domain-aligned teams: Teams are being organized around specific domains or subsystems rather than according to traditional functional silos. This alignment helps to improve communication and collaboration and enables teams to take ownership of their domains.

- Product ownership model: A product ownership model is adopted in which each domain or subsystem has a designated product owner responsible for its governance. This role is responsible for defining the domain’s governance requirements, ensuring that those requirements are met, and communicating with stakeholders about governance issues.

- Cross-functional capabilities: Teams are being developed with cross-functional capabilities, including technical expertise, business knowledge, and governance experience. This mix of skills allows teams to address governance issues from a holistic perspective.

- Reduced dependencies: Teams are empowered to make decisions and are less dependent on central governance authorities. This autonomy allows teams to be more agile and responsive to changing needs.

- Increased autonomy: Teams are given increased autonomy to manage their governance processes. This autonomy allows teams to experiment with new approaches and tailor governance to their domains’ needs.

Future Trends and Considerations

The Data Mesh landscape continues to evolve, with several key trends emerging:

Integration with AI/ML platforms

As AI and machine learning (ML) become more prevalent, there is a growing need for data meshes that integrate with these platforms. This integration allows organizations to use their data to train and deploy AI/ML models and gain insights from the data generated by these models.

Enhanced automation capabilities

Data meshes are becoming increasingly automated, which can help organizations reduce the cost and complexity of managing their data. This automation includes tasks such as data discovery, data profiling, data cleansing, and data integration.

Improved interoperability standards

Several new standards are being developed to improve the interoperability of data meshes. These standards include the Data Mesh Interoperability Framework (DMIF) and the Data Mesh Vocabulary (DMV). These standards will make it easier for organizations to connect their data meshes and share data across organizational boundaries.

Advanced governance tools

Data meshes are also equipped with advanced governance tools, which can help organizations ensure their data is used compliantly and ethically. These tools include features such as data lineage tracking, data access control, and data privacy protection.

Simplified implementation patterns

Data meshes are becoming easier to implement, with several new tools and platforms available to help organizations get started. These tools and platforms provide pre-built templates and best practices to help organizations quickly and easily deploy a data mesh.

These trends contribute to the growth and adoption of data meshes, which are becoming increasingly essential for organizations that want to maximize their data.

FAQs

Get In Touch