Mastering Model Drift: Techniques and Best Practices for Robust Machine Learning

Rahul Rastogi

May 3, 2024

Introduction

Model drift refers to the degradation of a machine learning model's performance over time due to changes in the underlying data distribution or the relationships between input features and target variables. As the data landscape evolves, the patterns and assumptions learned by the model during training may no longer hold, leading to a decline in predictive accuracy and reliability.

Understanding and addressing model drift is crucial for anyone leveraging machine learning, as it directly impacts the effectiveness and trustworthiness of the models in production. This comprehensive article will explore the fundamentals of model drift, its causes, detection techniques, and strategies to mitigate its impact. By the end of this piece, you will have a solid understanding of model drift and be equipped with the knowledge to maintain the performance and reliability of your machine-learning models.

Fundamentals of Model Drift

Machine learning models are built on the assumption that the patterns and relationships learned from historical data will remain stable and applicable to future data. However, in reality, data is rarely static. It evolves due to various factors, such as changes in user behavior, market dynamics, or external events. When the data distribution or the underlying relationships change, the model's performance can deteriorate, leading to inaccurate predictions and suboptimal decision-making.

Model drift can be visualized by plotting the model's performance metrics, such as accuracy or error rate, against time. The model's performance curve may gradually decline as drift occurs, indicating a divergence between the model's predictions and the ground truth. This divergence can have significant consequences, especially in critical applications like healthcare diagnostics or financial risk assessment.

It's important to distinguish between model drift and related concepts like data drift.

Data Drift vs Model Drift

In machine learning, "data drift" and "model drift" are often used interchangeably. Still, they refer to distinct concepts that are important to understand for maintaining the performance and reliability of machine learning models over time.

Data drift, also known as feature drift or covariate shift, refers to the change in the distribution of input features over time. It occurs when the statistical properties of the data, such as the mean, variance, or correlation between features, change significantly from what the model was trained on. Data drift can happen due to various reasons, such as changes in data collection methods, sensor degradation, or population shifts.

For example, consider a fraud detection model trained on historical transaction data. If the patterns of fraudulent transactions evolve, such as fraudsters using new tactics or targeting different demographics, the input features may exhibit data drift. The model may struggle to accurately identify fraudulent transactions if it relies on outdated feature distributions.

On the other hand, model drift refers to the degradation of a machine learning model's performance over time, even when the input data remains stable. Model drift can occur due to various factors, such as changes in the relationship between the input features and the target variable (concept drift) or the model's inability to generalize to new, unseen data.

Concept drift is a specific model drift where the fundamental relationship between the input features and the target variable changes over time. It happens when the underlying concept that the model is trying to learn evolves, rendering the learned patterns and associations less relevant or accurate.

For instance, consider a customer churn prediction model in the telecommunications industry. The factors influencing customer churn may change over time due to evolving market conditions, competitor offerings, or shifts in customer preferences. Even if the input features remain stable, the model's performance may degrade if it fails to capture the new relationships between the features and the likelihood of churn.

It's crucial to distinguish between data and model drift because they require different detection and mitigation strategies. Data drift can be detected by monitoring the statistical properties of the input features and comparing them against a reference distribution. Techniques like the Kolmogorov-Smirnov test or the Population Stability Index (PSI) can quantify the degree of data drift and trigger alerts when the drift exceeds predefined thresholds.

Mitigating data drift often involves updating the model with new training data representing the current feature distribution. This may require regular data collection, preprocessing, and model retraining to ensure the model remains aligned with the changing data landscape.

Model drift, on the other hand, is typically detected by monitoring the model's performance metrics, such as accuracy, precision, recall, or error rates, over time. Significant deviations from the expected performance or a consistent decline in metrics can indicate model drift.

Mitigating model drift may involve combining techniques, such as regular model retraining, using ensemble methods to improve robustness, or employing adaptive learning algorithms that automatically adjust the model's parameters based on new data.

It's worth noting that data drift and model drift are not mutually exclusive; they can co-occur and influence each other. Changes in the input data distribution can lead to model drift if the model is not updated to reflect the new patterns. Similarly, a drifting model may struggle to accurately capture the relationships in the data, even if the data remains stable.

Monitoring and proactively addressing data and model drift ensures the long-term performance and reliability of machine learning models. Implementing a comprehensive monitoring and alerting system, coupled with well-defined processes for data updates and model retraining, can help organizations stay ahead of drift and maintain the effectiveness of their machine learning applications.

Causes and Types of Model Drift

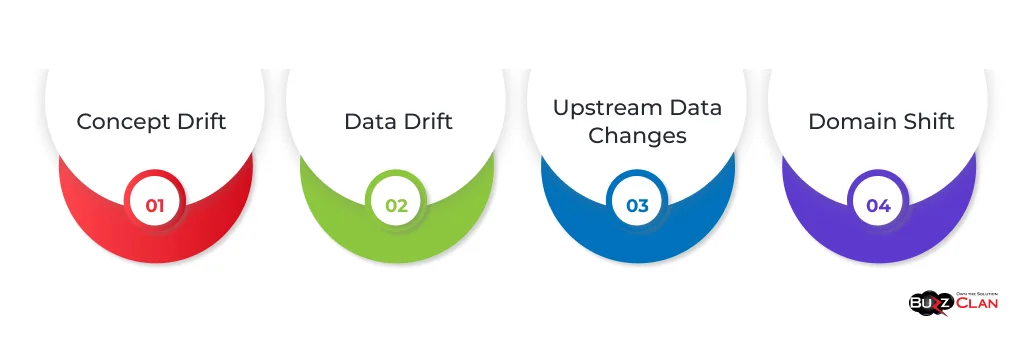

Model drift can occur for various reasons, and understanding these causes is essential for effectively managing and mitigating its impact. Let's explore the common types of model drift and their underlying causes.

Concept drift occurs when the fundamental relationship between the input features and the target variable changes over time. For example, in a customer churn prediction model, the factors influencing churn may evolve as customer preferences and market conditions change. Concept drift can be gradual, sudden, or recurring, requiring updating the model to capture the new relationships.

Data drift refers to changes in the distribution of input features over time. It can happen due to various reasons, such as changes in data collection methods, sensor degradation, or population shifts. For instance, in a fraud detection model, the patterns of fraudulent transactions may change as fraudsters adapt their techniques. Data drift can lead to model performance degradation if the model is not retrained on the updated data distribution.

Model drift can also occur due to changes in the upstream data pipeline or data preprocessing steps. If the data ingestion, cleaning, or transformation processes are modified, it can introduce inconsistencies or errors that impact the model's performance. Ensuring the stability and integrity of the data pipeline is crucial for maintaining model accuracy.

Domain shift occurs when the model is applied to data from a different domain or context than it was initially trained on. For example, a sentiment analysis model trained on product reviews may need to improve when applied to social media posts due to language, tone, and context differences. Domain adaptation techniques can help mitigate the impact of domain shift.

Real-world examples of model drift can be found in various industries. In healthcare, a disease diagnosis model may experience concept drift as new medical knowledge emerges or treatment guidelines change. In e-commerce, a recommendation engine may face data drift as user preferences and purchasing patterns evolve.

Measuring and Detecting Model Drift

Detecting model drift is crucial for maintaining the performance and reliability of machine learning models in production. However, not all changes in model performance are immediately apparent, and proactive monitoring is necessary to identify drift early on. Let's explore some techniques and metrics for measuring and detecting model drift.

Regularly monitoring the model's performance metrics, such as accuracy, precision, recall, or error rates, can help identify potential drift. Comparing the model's performance over time against a baseline or predefined thresholds can detect significant deviations that may indicate drift. Visualization tools like performance dashboards can aid in tracking and analyzing performance trends.

Statistical tests can be used to compare the distribution of input features or model predictions between the training and production data. Techniques like the Kolmogorov-Smirnov test, the Chi-squared test, or the Population Stability Index (PSI) can help quantify the degree of drift and determine if it exceeds acceptable thresholds. These tests quantitatively measure the difference between the expected and observed data distributions.

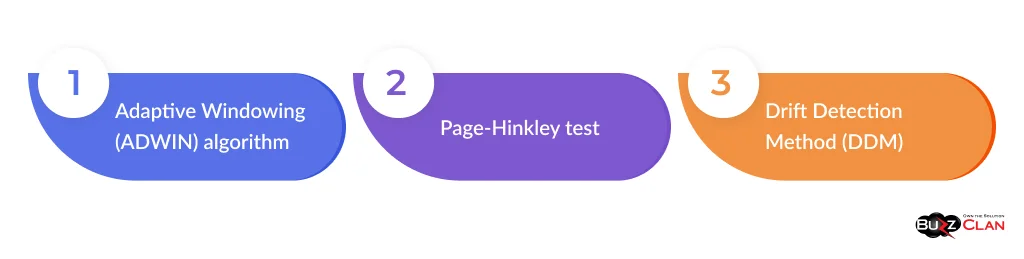

Various drift detection algorithms have been developed to identify and alert the model drift. These algorithms continuously monitor the model's inputs and outputs and use statistical methods to detect significant deviations from the expected behavior. Examples of drift detection algorithms include the Adaptive Windowing (ADWIN) algorithm, the Page-Hinkley test, and the Drift Detection Method (DDM).

The ADWIN algorithm is a popular drift detection method that adapts to changes in the data stream by dynamically adjusting the size of a sliding window. It maintains a window of recently seen data points and compares the statistical properties of the current window with the historical data.

The algorithm divides the data stream into two sub-windows: a reference window and a test window. The reference window represents historical data, while the test window contains the most recent data points. DWIN computes the average and variance of the data in each sub-window and compares them using a statistical test, such as the Hoeffding bound or the Kolmogorov-Smirnov test.

If the difference between the sub-windows exceeds a predefined threshold, ADWIN concludes that a drift has occurred and triggers an alert. It then discards the older data from the reference window and updates the window size to adapt to the new data distribution.

ADWIN is particularly effective in detecting gradual and abrupt drifts, as it dynamically adjusts the window size based on the rate of change in the data. It is widely used in streaming data scenarios, such as real-time monitoring and online learning.

The Page-Hinkley test is a sequential analysis technique for detecting abrupt changes in the mean value of a signal or a data stream. It is based on the cumulative sum (CUSUM) of the differences between the observed values and their expected mean.

The test maintains two variables: the cumulative sum (mT) and the minimum value of the cumulative sum (vT). The cumulative sum is updated with each new data point, adding the difference between the observed value and the expected mean. A positive difference indicates an increase in the mean, while a negative difference suggests a decrease.

The Page-Hinkley test compares the current cumulative sum (mT) with the minimum value (vT). If the difference between mT and vT exceeds a predefined threshold (lambda), the test concludes that a significant change in the mean has occurred, indicating a drift.

The Page-Hinkley test detects sudden shifts in the data distribution, such as concept drift or anomalies. It is computationally efficient and can be applied in real-time scenarios.

The Drift Detection Method is a statistical approach for detecting gradual and abrupt changes in a learning algorithm's error rate. It monitors the model's performance and raises alerts when the error rate significantly increases.

DDM maintains two variables: the error rate (p) and the standard deviation (s) of the error rate. It assumes that the error rate follows a binomial distribution and uses statistical tests to determine if the observed error rate deviates significantly from the expected value.

The method defines two thresholds: a warning threshold and a drift threshold. If the error rate exceeds the warning threshold, DDM enters a warning state, indicating a potential drift. If the error rate exceeds the drift threshold, DDM concludes that a drift has occurred and triggers an alert.

When a drift is detected, DDM can trigger actions such as retraining the model, updating the model's parameters, or collecting new labeled data to adapt to the changing environment.

DDM is widely used in incremental learning scenarios, where the model is continuously updated with new data. It helps maintain the model's performance by detecting and reacting to changes in the error rate.

Drift detection algorithms are not limited to the three examples discussed here. Other notable algorithms include the Early Drift Detection Method (EDDM), the Adaptive Sliding Window Algorithm (ASWAD), and the Resampling-based Drift Detection (RDD). Each algorithm has its strengths and limitations, and the field of drift detection continues to evolve with new research and innovations.

Techniques like Bayesian inference or ensemble methods can estimate the uncertainty associated with the model's predictions. By monitoring the uncertainty levels over time, you can identify instances where the model is less confident in its predictions, which may indicate drift. Increased uncertainty can indicate that the model encounters data points different from the training distribution.

Several tools and software platforms provide built-in capabilities for detecting and monitoring model drift. These tools automate collecting and analyzing model performance data, generating alerts when drift is detected, and providing visualizations and reports for easier interpretation. Examples of such tools include IBM Watson OpenScale, Amazon SageMaker Model Monitor, and Google Cloud AI Platform.

Strategies to Mitigate Model Drift

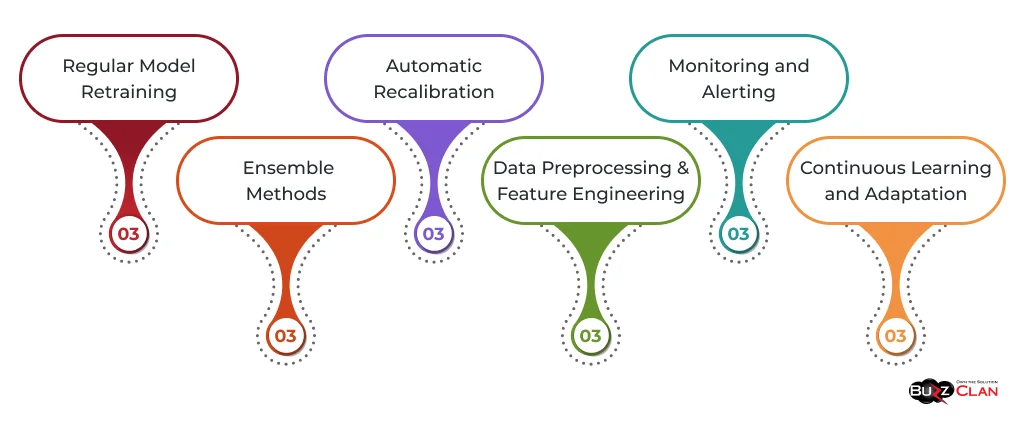

Detecting model drift is just the first step; taking proactive measures to mitigate its impact is equally important. Here are some strategies and best practices for managing and counteracting model drift:

One of the most effective ways to combat model drift is to retrain the model on updated data regularly. The model can learn and adapt to the changing data distribution by incorporating the latest data points into the training process. The retraining frequency depends on the drift rate and the application's requirements. Automated retraining pipelines can be set up to streamline this process.

Ensemble methods involve combining multiple models to make predictions, which can help mitigate the impact of drift. Using techniques like bagging, boosting, or stacking, you can create a more robust and stable model that is less sensitive to individual model fluctuations. Ensemble models can adapt better to changing data distributions and provide more reliable predictions.

Automatic recalibration techniques involve adjusting the model's parameters or decision thresholds based on the observed drift. By dynamically updating the model's calibration, you can maintain its performance and align it with the changing data distribution. Techniques like Platt scaling or isotonic regression can be used for recalibration.

Drift can sometimes be mitigated through data preprocessing and feature engineering techniques. By carefully selecting and transforming input features, you can create a more robust and stable data representation less susceptible to drift. Techniques like feature scaling, normalization, and dimensionality reduction can help reduce the impact of drift on the model's performance.

A robust monitoring and alerting system is crucial for proactively detecting and responding to model drift. You can receive timely notifications when drift occurs by setting up automated alerts based on predefined thresholds or anomaly detection algorithms. This allows you to take corrective actions, such as retraining the model or adjusting the data pipeline, to minimize the impact of drift.

In some cases, model drift may require a more fundamental shift in the modeling approach. Continuous learning and adaptation techniques, such as online or incremental learning, can enable the model to update continuously as new data arrives. These techniques allow the model to learn from a data stream and quickly adapt to changing patterns.

It's important to note that the choice of mitigation strategy depends on the specific characteristics of the model, the data, and the application domain. A combination of techniques may be necessary to manage model drift and maintain optimal performance effectively.

Advanced Topics in Model Drift

While the fundamentals of model drift are essential for any machine learning practitioner, some advanced topics and techniques offer deeper insights and innovative solutions. Let's explore some of these advanced areas:

Drift diffusion modeling is a statistical approach aiming to capture model drift dynamics over time. It uses stochastic differential equations to model the evolution of model performance and provides a framework for understanding and predicting drift. Drift diffusion models can help estimate the drift rate, identify the most influential factors contributing to drift, and guide the selection of appropriate mitigation strategies.

Adaptive learning algorithms are designed to automatically adjust the model's parameters or structure in response to drift. These algorithms continuously monitor the model's performance and use statistical techniques to detect and adapt to changes in the data distribution. Examples of adaptive learning algorithms include the Adaptive Random Forest (ARF) and the Self-Adjusting Memory (SAM) model.

Transfer learning and domain adaptation techniques can be employed to mitigate the impact of domain shift and improve the model's performance on new or unseen data distributions. These techniques involve leveraging knowledge learned from one domain or task to enhance the model's performance in a different but related domain. Transferring learned representations or fine-tuning the model on a small amount of target domain data allows the model to adapt to the new domain more effectively.

Explainable AI techniques can provide valuable insights into the factors contributing to model drift. By understanding the model's decision-making process and the influence of individual features, you can identify the root causes of drift and take targeted actions to mitigate its impact. Techniques like feature importance analysis, partial dependence plots, and surrogate models can help explain the model's behavior and identify the most relevant factors affecting its performance.

Anomaly detection and outlier analysis techniques can be used to identify unusual or unexpected data points that may contribute to model drift. By detecting and flagging anomalies in the input data or the model's predictions, you can proactively identify potential sources of drift and take corrective actions. Techniques like isolation forests, local outlier factor (LOF), and autoencoders can be employed for anomaly detection in high-dimensional data.

These advanced topics and techniques offer a deeper understanding of model drift and provide additional tools for managing and mitigating its impact. However, they often require a strong foundation in statistical modeling, machine learning theory, and computational methods. Collaboration with domain experts and continuous learning are essential for effectively applying these advanced techniques in real-world scenarios.

Case Studies and Industry Impact

Model drift is not just a theoretical concept; it has significant implications for businesses and organizations across various industries. Let's explore some case studies and real-world examples that illustrate the impact of model drift and the strategies used to address it.

In the financial industry, fraud detection models are critical for identifying and preventing fraudulent transactions. However, as fraudsters adapt their tactics and new fraud patterns emerge, these models can experience drift. A major credit card company implemented a proactive drift detection and mitigation strategy by continuously monitoring the model's performance and retraining it on updated data. They also employed ensemble methods and anomaly detection techniques to improve the model's robustness and detect emerging fraud patterns. As a result, they maintained high fraud detection accuracy and reduced financial losses.

Machine learning models are used in healthcare for disease diagnosis and treatment recommendations. However, as medical knowledge evolves and new clinical data becomes available, these models can experience concept drift. A leading healthcare provider addressed this challenge by implementing a continuous learning framework that allowed the model to adapt to new data and medical guidelines. They also employed transfer learning techniques to leverage knowledge from related disease domains and improve the model's performance on rare or emerging conditions. By mitigating model drift, they were able to provide more accurate and up-to-date diagnoses and treatment recommendations.

E-commerce platforms rely heavily on recommendation engines to personalize user experiences and drive sales. However, these models can suffer from data drift as user preferences and purchasing patterns change. A major online retailer tackled this challenge by implementing regular model retraining and using adaptive learning algorithms to update the model's parameters continuously. They also employed collaborative filtering and matrix factorization techniques to capture evolving user preferences and improve recommendation quality. By effectively managing model drift, they maintained high user engagement and increased revenue through personalized recommendations.

These case studies demonstrate the real-world impact of model drift and the importance of implementing effective strategies to mitigate its effects. Businesses can ensure their machine-learning applications' long-term accuracy and reliability by proactively monitoring model performance, employing adaptive learning techniques, and continuously updating models with fresh data.

Conclusion

In this comprehensive article, we have explored the concept of model drift, its causes, detection techniques, and mitigation strategies. We have seen how model drift can significantly impact the performance and reliability of machine learning models in production environments and why understanding and addressing it is crucial for maintaining the effectiveness of AI-driven solutions.

We discussed the fundamentals of model drift, including its visualization and comparison with related concepts like data drift. We delved into the various types of drift, such as concept drift, data drift, and domain shift, and explored real-world examples of how they manifest in different industries.

We also covered techniques for measuring and detecting model drift, including performance monitoring, statistical tests, and drift detection algorithms. We highlighted the importance of proactive monitoring and using tools and software platforms to automate the process.

Furthermore, we explored strategies for mitigating model drift, such as regular model retraining, ensemble methods, automatic recalibration, and continuous learning and adaptation. We emphasized the need for a combination of techniques tailored to the specific characteristics of the model and the application domain.

In the advanced topics section, we pushed the boundaries of model drift understanding by discussing drift diffusion modeling, adaptive learning algorithms, transfer learning, explainable AI, and anomaly detection. These techniques offer deeper insights and innovative solutions for managing model drift in complex scenarios.

Through case studies from finance, healthcare, and e-commerce, we demonstrated the real-world impact of model drift and the successful implementation of mitigation strategies. These examples highlight the importance of proactively addressing model drift to ensure machine learning applications' long-term accuracy and reliability.

As you embark on your machine learning journey, remember that model drift is an inherent challenge that requires ongoing attention and management. By incorporating drift detection and mitigation strategies into your machine learning workflows, you can greatly improve the robustness and accuracy of your models.

Stay curious, keep learning, and embrace the opportunity to improve and adapt your machine learning solutions continuously. Model drift is an exciting area of research and innovation, and by staying at the forefront of these developments, you can unlock AI's full potential and drive meaningful impact in your industry.

FAQs

Get In Touch