MLOps Explained: The Ultimate Guide to Machine Learning Operations

Ananya Arora

Jan 14, 2025

Introduction

MLOps, short for Machine Learning Operations, is a set of practices and principles that aim to reliably and efficiently deploy and maintain machine learning models in production. It encompasses the end-to-end process of integrating machine learning models into real-world applications, from data preparation and model training to deployment, monitoring, and governance.

This comprehensive article will explore the world of MLOps, delving into its origins, core principles, and practical applications. We will examine the technological foundations of MLOps, including popular tools and platforms, and present case studies showcasing MLOps in action across various industries. Furthermore, we will provide a step-by-step guide on building an MLOps pipeline, discuss the role of data management in MLOps, and explore strategies for enterprises to scale their machine learning initiatives.

Whether you are a data scientist, machine learning engineer, or business leader looking to optimize your organization’s machine learning efforts, this article will provide valuable insights and practical knowledge to navigate the evolving landscape of MLOps.

The Emergence of MLOps

The MLOps concept is rooted in the DevOps movement, which revolutionized software development by fostering collaboration, automation, and continuous delivery. As machine learning models became increasingly integrated into software applications, the need for a similar approach to managing their lifecycle became apparent.

The term “MLOps” has gained prominence in recent years as it describes the practices and principles that enable the effective development, deployment, and maintenance of machine learning models in production environments. It combines the best practices of DevOps, such as version control, continuous integration, and continuous deployment, with the unique challenges of machine learning, such as data management, model training, and performance monitoring.

MLOps differs from related fields like AIOps and ModelOps in its focus on the end-to-end lifecycle of machine learning models. While AIOps focuses on using machine learning to enhance IT operations and ModelOps emphasizes model management and governance, MLOps encompasses the entire process from data preparation to model deployment and monitoring.

Key milestones in the evolution of MLOps include the release of Google’s TFX (TensorFlow Extended) platform, which provided an end-to-end platform for deploying and maintaining machine learning models, and the establishment of the MLOps community and conferences, such as the MLOps World and OpML, which bring together practitioners and experts to share knowledge and best practices.

Core Principles of MLOps

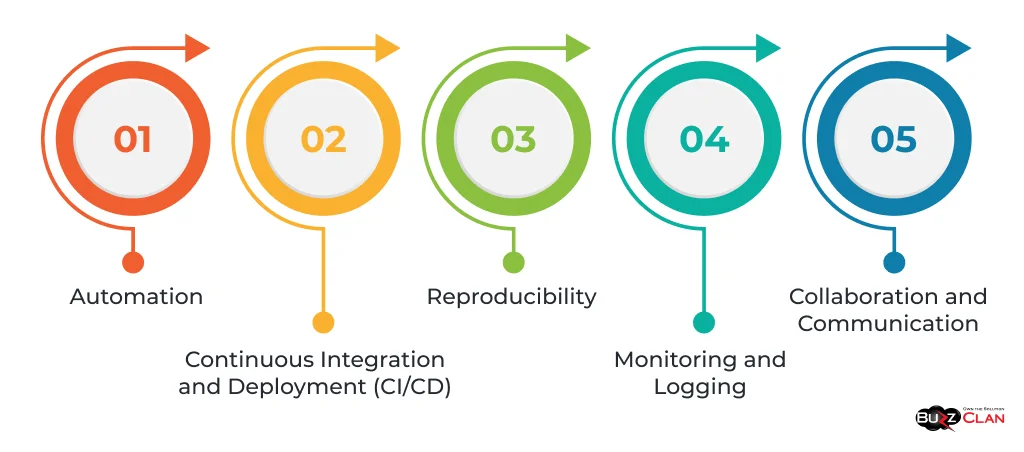

At its core, MLOps is guided by fundamental principles that ensure the reliability, scalability, and efficiency of machine learning models in production. These principles include:

- Automation: MLOps emphasizes automating the entire machine learning lifecycle, from data ingestion and preprocessing to model training, deployment, and monitoring. Automation reduces manual errors, improves reproducibility, and enables faster iterations.

- Continuous Integration and Deployment (CI/CD): MLOps adopts DevOps’s CI/CD practices to enable frequent and reliable updates to machine learning models. This includes automating model building, testing, deployment, and managing model versioning and dependencies.

- Reproducibility: MLOps ensures that machine learning models can be consistently reproduced and redeployed. This involves version control of code, data, and models and documenting the entire pipeline to enable traceability and reproducibility.

- Monitoring and Logging: MLOps emphasizes the importance of monitoring machine learning models in production to detect anomalies, track performance metrics, and ensure the models behave as expected. Logging and auditing are crucial for debugging, compliance, and governance.

- Collaboration and Communication: MLOps fosters collaboration among data scientists, machine learning engineers, and operations teams to ensure smooth handoffs and alignment throughout the lifecycle of machine learning models. It also promotes effective communication with business stakeholders to ensure models meet their intended objectives.

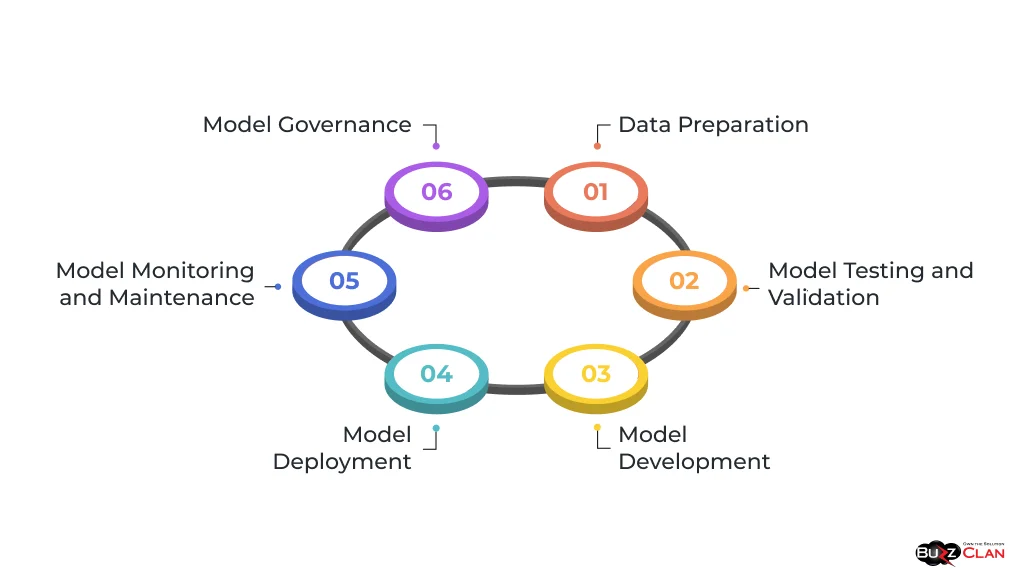

The MLOps lifecycle encompasses the entire journey of a machine learning model, from conception to retirement. It typically includes the following stages:

- Data Preparation: Collecting, cleaning, and preprocessing data to ensure it is suitable for model training.

- Model Development: Designing, training, and evaluating machine learning models based on business requirements and performance metrics.

- Model Testing and Validation: Thoroughly testing and validating models to ensure they meet performance and quality standards.

- Model Deployment: Packaging and deploying models into production environments, ensuring seamless integration with existing systems and applications.

- Model Monitoring and Maintenance: Continuously monitor the performance and behavior of models in production, detect anomalies, and trigger alerts or automated actions when necessary. Regularly update and retrain models to adapt to changing data patterns and business requirements.

- Model Governance: Establishing policies, standards, and processes for managing the lifecycle of machine learning models, ensuring compliance with regulations and ethical considerations.

By adhering to these principles and following a structured lifecycle, MLOps enables organizations to develop, deploy, and maintain machine learning models in a reliable, scalable, and efficient manner.

MLOps in the Tech Ecosystem

The technological landscape of MLOps is vast and constantly evolving, with a wide range of tools, platforms, and frameworks available to support the end-to-end machine learning lifecycle. Let’s explore some of the leading solutions in the MLOps ecosystem.

- Databricks: Databricks provides a unified analytics platform that combines data engineering, machine learning, and collaborative notebooks. It offers a fully managed MLOps solution that enables data scientists and engineers to build, train, deploy, and monitor machine learning models at scale. Databricks integrates with popular open-source libraries and frameworks, such as TensorFlow, PyTorch, and scikit-learn, and provides seamless integration with cloud platforms like AWS, Azure, and Google Cloud.

- Amazon SageMaker: Amazon SageMaker is a fully managed platform enabling data scientists and developers to quickly build, train, and deploy machine learning models. It provides an integrated Jupyter authoring notebook interface, distributed training capabilities, and a model hosting service for deploying models in production. SageMaker also offers a range of built-in algorithms and frameworks and integration with other AWS services, such as S3 for data storage and Lambda for serverless computing.

- Azure Machine Learning: Azure Machine Learning is a cloud-based platform that enables data scientists and developers to build, train, and deploy machine learning models. It provides a visual interface for building and managing machine learning workflows and supports popular open-source frameworks like TensorFlow, PyTorch, and scikit-learn. Azure Machine Learning integrates with other Azure services, such as Azure Databricks for big data processing and Azure DevOps for CI/CD pipelines.

- Google Cloud AI Platform: Google Cloud AI Platform is a suite of tools and services that enable data scientists and developers to build, train, and deploy machine learning models. It includes pre-built models and APIs for common use cases, such as image classification and natural language processing, and support for custom model development using TensorFlow, PyTorch, and scikit-learn. Google Cloud AI Platform also provides managed services for data labeling, model evaluation, and model serving.

- Kubeflow: Kubeflow is an open-source platform for deploying and managing machine learning workflows on Kubernetes. It provides tools and abstractions for building end-to-end machine learning pipelines, including data preparation, model training, hyperparameter tuning, and model serving. Kubeflow integrates with popular machine learning frameworks and tools, such as TensorFlow, PyTorch, and Jupyter notebooks, and enables scalable and portable machine learning workflows across on-premises and cloud environments.

When comparing and contrasting different MLOps stacks and frameworks, it’s important to consider factors such as ease of use, scalability, integration with existing tools and platforms, and support for specific use cases and requirements. Organizations should evaluate their current infrastructure, skills, and goals to determine the best fit for their MLOps needs.

MLOps at Work: Case Studies

Let’s explore case studies from various industries to better understand the practical applications and benefits of MLOps.

| Various Industries | Challenge | Solution | Results |

|---|---|---|---|

| Fraud Detection in Financial Services | A large financial institution struggled with high rates of credit card fraud, leading to significant financial losses and customer dissatisfaction. The existing fraud detection models were static and unable to adapt to evolving fraud patterns. | The institution implemented an MLOps pipeline that automated the entire lifecycle of its fraud detection models. The pipeline ingested real-time transaction data, preprocessed it, and continuously trained and deployed updated models. The MLOps platform also monitored the models' performance in production and triggered alerts when anomalies were detected. | The MLOps implementation reduced the institution's fraud losses by 60% and improved fraud detection accuracy by 30%. The automated pipeline enabled faster iteration and deployment of models, allowing the institution to stay ahead of evolving fraud patterns. |

| Predictive Maintenance in Manufacturing | A manufacturing company experienced frequent equipment failures, which led to production downtime and increased maintenance costs. The company lacked a proactive approach to predicting and preventing equipment failures. | The company implemented an MLOps solution that collected sensor data from its manufacturing equipment, preprocessed it, and trained predictive maintenance models. The MLOps platform orchestrated the deployment of the models and monitored their performance in real time. The platform triggered alerts and maintenance work orders when the models predicted a high likelihood of equipment failure. | The predictive maintenance initiative reduced unplanned downtime by 45% and maintenance costs by 30%. The MLOps platform enabled the company to optimize its maintenance schedules and proactively address potential equipment failures before they occurred. |

| Personalized Recommendations in E-commerce | An e-commerce company struggled to provide personalized product recommendations to their customers, resulting in low conversion rates and customer engagement. The existing recommendation models were static and failed to capture individual customer preferences. | The company implemented an MLOps pipeline that ingested customer behavior data, such as browsing history and purchase records, and trained personalized recommendation models for each customer segment. The MLOps platform automatically deployed the models and served personalized recommendations in real time through the company's website and mobile app. | The personalized recommendation initiative increased conversion rates by 25% and average order value by 15%. The MLOps platform enabled the company to continuously update and optimize its recommendation models based on real-time customer data, improving the relevance and timeliness of the recommendations. |

These case studies demonstrate the tangible impact of MLOps in solving real-world challenges and driving business value across industries. By automating and streamlining the end-to-end machine learning lifecycle, MLOps enables organizations to develop, deploy, and maintain machine learning models at scale, improving performance, efficiency, and customer satisfaction.

Building an MLOps Pipeline

Constructing an effective MLOps pipeline is crucial for operationalizing machine learning models and ensuring their reliability and scalability in production. Let’s walk through the key steps in setting up an MLOps pipeline.

| Steps | Task |

|---|---|

| Define the Machine Learning Workflow | Identify the key stages of your machine learning workflow, such as data ingestion, data preprocessing, model training, model evaluation, and model deployment. |

| Determine the dependencies and data flow between each stage and identify any external systems or data sources that need integration. | |

| Set Up Version Control | Establish a version control system, such as Git, to track changes to your code, data, and model artifacts. |

| Create separate repositories for your data, code, and model artifacts, and establish branching and merging strategies to enable collaboration and parallel development. | |

| Automate Data Ingestion and Preprocessing | Implement automated data ingestion pipelines to collect and store data from various sources, such as databases, APIs, or streaming platforms. |

| Develop data preprocessing scripts or workflows to clean, transform, and feature-engineer the data, ensuring consistency and quality. | |

| Establish data versioning and lineage tracking to enable reproducibility and traceability of data transformations. | |

| Develop and Train Models | Based on your use case and requirements, select appropriate machine learning frameworks and libraries, such as TensorFlow, PyTorch, or scikit-learn. |

| Develop modular and reusable code for model training, hyperparameter tuning, and evaluation, leveraging best practices such as code reviews and unit testing. | |

| Implement automated model training pipelines that trigger training jobs based on data updates or scheduled intervals and track model performance metrics and artifacts. | |

| Implement Model Deployment and Serving | Choose a model serving framework or platform, such as TensorFlow Serving, KubeFlow, or cloud-based solutions like AWS SageMaker or Google Cloud AI Platform. |

| Develop deployment scripts or workflows to package and deploy trained models to the serving infrastructure, ensuring compatibility and scalability. | |

| Establish model versioning and rollback mechanisms to enable seamless updates and rollbacks in case of issues. | |

| Set Up Monitoring and Logging | Implement monitoring and logging frameworks to track the performance and behavior of models in production, such as request rates, latency, and error rates. |

| Configure alerts and notifications to proactively detect and respond to anomalies or degradations in model performance. | |

| Establish centralized logging and dashboarding to provide visibility into model performance and enable troubleshooting and root cause analysis. | |

| Implement Continuous Integration and Deployment (CI/CD) | Set up CI/CD pipelines to automate your machine learning models' build, test, and deployment processes. |

| Integrate your version control system with CI/CD tools, such as Jenkins, GitLab CI/CD, or Azure DevOps, to trigger automated builds and tests on code changes. | |

| Establish automated deployment pipelines that promote models through various environments, such as development, staging, and production, based on predefined quality gates and approvals. |

Best practices for CI/CD in machine learning include:

| Best practices | Description |

| Automated testing | Implement comprehensive testing strategies, including unit tests, integration tests, and model performance tests, to ensure the reliability and correctness of your machine learning models. |

| Reproducibility | Ensure that your CI/CD pipelines are deterministic and reproducible by versioning your code, data, and dependencies and documenting your workflows and configurations. |

| Rollback and recovery | Establish mechanisms to quickly roll back or recover from failed deployments or model issues, such as blue-green deployments or canary releases. |

| Collaboration and documentation | Establish code review processes, documentation standards, and communication channels to foster collaboration and knowledge sharing among team members. |

By following these steps and best practices, you can build a robust and scalable MLOps pipeline that enables the continuous development, deployment, and monitoring of machine learning models in production.

The Role of Data in MLOps

Data is the lifeblood of machine learning, and effective data management is crucial for the success of MLOps initiatives. In this section, we will explore the importance of data quality and versioning in MLOps and introduce the concept of DataOps and its integration with MLOps.

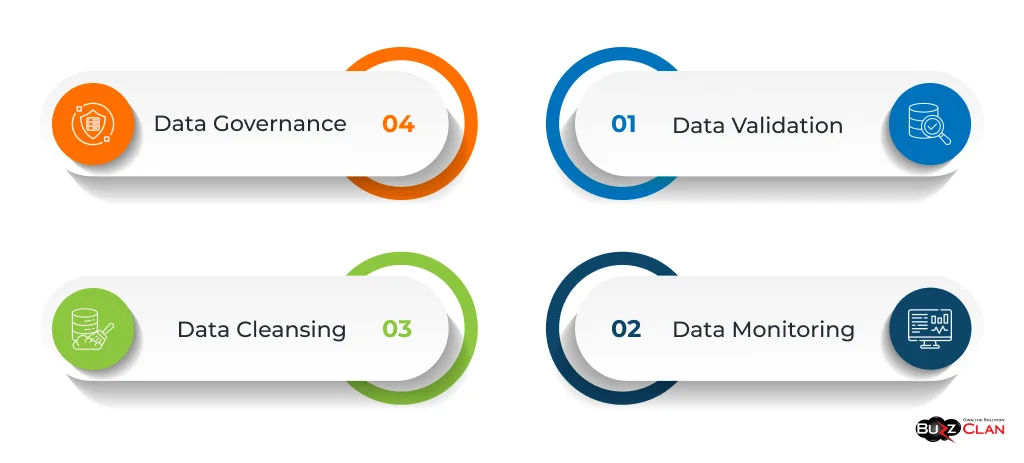

Data quality is a critical factor in the performance and reliability of machine learning models. Poor data quality, such as missing values, outliers, or inconsistencies, can lead to inaccurate predictions and suboptimal model performance. In MLOps, ensuring data quality involves several key practices:

- Data Validation: Implementing automated data validation checks to ensure incoming data meets predefined quality criteria, such as data type, range, and consistency constraints. Data validation can be performed at various data pipeline stages, such as data ingestion, preprocessing, and model training.

- Data Monitoring: Continuously monitoring the quality and distribution of data used for model training and inference and detecting anomalies or drift in data patterns. Data monitoring helps identify issues impacting model performance and enables proactive remediation.

- Data Cleansing: Establish data cleansing processes to handle missing values, outliers, and inconsistencies in the data. Depending on the nature of the data and the requirements of the machine learning model, data cleansing techniques may include imputation, filtering, or transformation.

- Data Governance: Implementing policies and processes to ensure data security, privacy, and compliance in machine learning workflows. Data governance includes access controls, encryption, and retention policies.

Data versioning is another critical aspect of MLOps, as it enables the reproducibility and traceability of machine learning workflows. Data versioning involves tracking changes to datasets over time, including metadata such as data source, schema, and transformations applied. By versioning data, MLOps teams can:

- Reproduce Models: Recreate the exact data conditions under which a model was trained, enabling the reproduction of model results and the debugging of issues.

- Compare Models: Evaluate the impact of data changes on model performance by comparing models trained on different versions of the data.

- Ensure Compliance: Maintain an audit trail of data lineage and provenance, which is crucial for regulatory compliance and data governance.

DataOps is an emerging discipline focusing on the end-to-end management and optimization of data pipelines, from data ingestion to consumption. It shares many principles with MLOps, such as automation, continuous integration and delivery, and team collaboration.

Integrating DataOps and MLOps enables a holistic approach to managing the entire data and machine learning lifecycle. By applying DataOps practices to the data management aspects of MLOps, organizations can:

- Ensure Data Quality: Implement automated data validation, monitoring, and cleansing processes to maintain high-quality data for machine learning workflows.

- Streamline Data Pipelines: Automate and optimize data pipelines to enable efficient and reliable data ingestion, preprocessing, and feature engineering for machine learning models.

- Enable Data Discovery and Collaboration: Establish data catalogs and metadata management practices to enable data discovery, collaboration, and reuse across teams and projects.

- Ensure Data Security and Compliance: Implement data security and compliance measures, such as data encryption, access controls, and auditing, to protect sensitive data and meet regulatory requirements.

By leveraging DataOps practices and integrating them with MLOps, organizations can build a robust and scalable foundation for their machine learning initiatives, ensuring high-quality data, efficient pipelines, and compliant workflows.

MLOps for the Enterprise

Enterprises face unique challenges when adopting MLOps at scale, such as managing complex data landscapes, ensuring governance and compliance, and integrating with existing systems and processes. This section will explore strategies for enterprises to leverage MLOps effectively and discuss the role of MLOps consulting and services in supporting enterprise adoption.

To successfully implement MLOps at an enterprise scale, organizations should consider the following strategies:

| Best practices | Description |

|---|---|

| Establish an MLOps Center of Excellence (CoE) | Create a centralized team or organization to define MLOps standards, best practices, and governance policies. The MLOps CoE serves as a hub for knowledge sharing, training, and support for machine learning teams across the enterprise. |

| Develop an MLOps Platform Strategy | Evaluate and select an MLOps platform that aligns with the organization's requirements, such as scalability, security, and integration with existing tools and systems. The MLOps platform should provide end-to-end data management, model development, deployment, and monitoring capabilities. |

| Implement Governance and Compliance Frameworks | Establish governance and compliance frameworks to ensure machine learning workflows adhere to internal policies and external regulations. This includes practices such as model validation, risk assessment, and audit trails. |

| Foster Collaboration and Knowledge Sharing | Encourage collaboration and knowledge sharing among data scientists, machine learning engineers, and domain experts across the enterprise. Establish communities of practice, forums, and knowledge bases to facilitate the exchange of ideas, best practices, and lessons learned. |

| Invest in Skills Development and Training | Provide training and development opportunities for employees to acquire MLOps skills and stay current with the latest tools and techniques. This includes technical training for data scientists and machine learning engineers and business training for managers and executives to understand the potential and limitations of MLOps. |

| Measure and Optimize MLOps Performance | Establish key performance indicators (KPIs) and metrics to measure the effectiveness and efficiency of MLOps processes, such as model deployment frequency, model performance, and data quality. Continuously monitor and optimize MLOps workflows based on these metrics to drive continuous improvement. |

MLOps consulting and services can play a crucial role in helping enterprises navigate the complexity of MLOps adoption. MLOps consultants bring expertise and experience in implementing MLOps solutions across various industries and use cases. They can provide valuable guidance and support in areas such as:

- MLOps Strategy and Roadmap Development: Assessing the organization’s current MLOps maturity, identifying gaps and opportunities, and developing a strategic roadmap for MLOps adoption.

- MLOps Platform Selection and Implementation: Evaluating and selecting the most suitable MLOps platform based on the organization’s requirements and supporting its implementation and integration with existing systems and processes.

- MLOps Workflow Design and Optimization: Designing and optimizing end-to-end MLOps workflows, including data management, model development, deployment, and monitoring, based on best practices and industry standards.

- MLOps Governance and Compliance: Developing and implementing governance and compliance frameworks for MLOps, including model validation, risk assessment, and audit trails, to ensure adherence to internal policies and external regulations.

- MLOps Training and Enablement: Providing training and enablement programs for data scientists, machine learning engineers, and business stakeholders to build MLOps skills and foster a culture of continuous learning and improvement.

By leveraging the expertise and support of MLOps consulting and services, enterprises can accelerate their MLOps adoption journey, avoid common pitfalls, and realize the full potential of machine learning at scale.

The MLOps Community and Resources

The MLOps community is a vibrant and growing ecosystem of practitioners, researchers, and thought leaders passionate about advancing the field of MLOps. Engaging with the MLOps community and leveraging available resources can be invaluable for individuals and organizations looking to deepen their knowledge and stay current with the latest trends and best practices.

One of the primary ways to engage with the MLOps community is through online forums and platforms. Some popular MLOps community platforms include:

- MLOps Community (https://mlops.community/): A dedicated online community for MLOps practitioners featuring forums, blogs, and events.

- Kubeflow Community (https://www.kubeflow.org/docs/about/community/) is The official community for the Kubeflow open-source MLOps platform, offering mailing lists, forums, and contributor resources.

- MLOps.org (https://mlops.org/) is a community-driven MLOps knowledge-sharing and collaboration platform featuring articles, tutorials, and a curated list of MLOps tools and resources.

- Data Science and Machine Learning Platform Engineering Meetup This global meetup group focused on MLOps and data science platform engineering, with regular online and in-person events.

In addition to community platforms, there are numerous resources available for individuals looking to deepen their knowledge of MLOps, including:

Books:

- “Machine Learning Engineering” by Andriy Burkov

- “Introducing MLOps: How to Scale Machine Learning in the Enterprise” by Mark Treveil et al.

- “Building Machine Learning Powered Applications: Going from Idea to Product” by Emmanuel Ameisen

Courses and Certifications:

- ML Ops Fundamentals

- MLOps: Machine Learning Operations Specialization

- MLOps Engineer Nanodegree

Conferences and Events:

- MLOps World (https://mlopsworld.com/)

- OpML (https://www.opml.co/)

- MLOps Summit (https://www.mlops-summit.com/)

Blogs and Tutorials:

- Google Cloud MLOps Guides – Microsoft Azure MLOps Documentation

- Towards Data Science MLOps Articles

By engaging with the MLOps community and leveraging available resources, practitioners can stay informed about the latest advancements, share knowledge and experiences, and collaborate on solving common challenges in operationalizing machine learning at scale.

Navigating MLOps Challenges

While MLOps offers significant benefits for organizations looking to scale their machine learning efforts, it also presents several challenges that must be navigated effectively. This section will explore common MLOps challenges and discuss strategies for overcoming them, including insights on monitoring, version control, and model governance.

| Various Industries | Challenge | Strategy |

|---|---|---|

| Complexity and Skill Gaps | MLOps involves a complex interplay of data, models, and infrastructure, requiring a diverse set of skills spanning data science, software engineering, and IT operations. Organizations often need help finding and retaining talent with the necessary MLOps skills. | Invest in upskilling and reskilling programs to build MLOps capabilities within the existing workforce. Foster a culture of continuous learning and provide opportunities for cross-functional collaboration and knowledge sharing. Consider partnering with MLOps consulting and service providers to bridge skill gaps and accelerate adoption. |

| Data Quality and Consistency | Machine learning models are highly dependent on the quality and consistency of the input data. Ensuring data quality across disparate sources, formats, and pipelines can significantly challenge MLOps. | Implement robust data validation, monitoring, and cleansing processes to identify and address data quality issues proactively. Establish data governance policies and standards to ensure consistency and reliability of data across the MLOps lifecycle. |

| Model Versioning and Reproducibility | As machine learning models evolve, managing multiple versions of models, data, and code can become complex and hinder reproducibility. | Adopt version control practices for models, data, and code using tools like Git and MLflow. Establish clear versioning conventions and metadata management practices to enable traceability and reproducibility of ML workflows. |

| Model Monitoring and Drift Detection | Machine learning models can degrade as data patterns and relationships change, leading to concept drift and reduced model performance. | Implement continuous monitoring and drift detection mechanisms to identify and alert model performance degradation proactively. Establish automated retraining and model updating workflows to adapt models to changing data patterns. |

| Model Governance and Compliance | Ensuring model governance and compliance with internal policies and external regulations can be complex, especially in regulated industries such as finance and healthcare. | Develop model governance frameworks that define policies, standards, and processes for model development, validation, and deployment. Implement model risk assessment and audit trails to ensure compliance with regulatory requirements—Foster collaboration between data science, legal, and compliance teams to align MLOps practices with governance objectives. |

| Scalability and Performance | As the volume and complexity of machine learning workloads grow, ensuring the scalability and performance of MLOps pipelines can become challenging. | Leverage cloud-native technologies and serverless architectures to enable elastic scaling of MLOps workloads. Optimize data pipelines and model serving infrastructures for performance and cost-efficiency. Conduct regular performance testing and capacity planning to identify and address bottlenecks. |

| Integration with Business Processes | Integrating machine learning models into existing business processes and systems can be complex, requiring collaboration across multiple stakeholders and technology stacks. | Foster close collaboration between data science, engineering, and business teams to ensure alignment of MLOps initiatives with business objectives. Develop seamless integration strategies and APIs to incorporate machine learning models into business workflows seamlessly. Establish feedback loops and communication channels to gather input and iterate on model performance and business impact. |

By proactively addressing these challenges and implementing effective strategies, organizations can navigate the complexities of MLOps and realize the full potential of machine learning at scale. Continuous monitoring, robust version control, and strong model governance practices are key to ensuring the reliability, reproducibility, and compliance of MLOps workflows.

Careers in MLOps

As machine learning adoption grows across industries, the demand for professionals with MLOps skills is rising. In this section, we will explore the MLOps engineer role, including the required skills and potential career paths, as well as data on job market trends and salary expectations.

The MLOps engineer is critical at the intersection of data science, software engineering, and IT operations. MLOps engineers are responsible for designing, implementing, and maintaining the infrastructure and workflows that enable the deployment and operation of machine learning models in production environments.

Key responsibilities of an MLOps engineer include:

- Developing and automating MLOps pipelines for data ingestion, preprocessing, model training, and deployment.

- Implementing version control and reproducibility practices for models, data, and code.

- Designing and managing the infrastructure for model serving, monitoring, and scaling.

- Collaborating with data scientists, software engineers, and business stakeholders to ensure machine learning models’ reliability, performance, and business impact.

- Implementing model governance and compliance processes, including model validation, risk assessment, and audit trails.

To succeed in an MLOps engineer role, individuals should possess a combination of technical and soft skills, including:

- Strong programming skills in Python, Java, and Scala.

- Familiarity with machine learning frameworks such as TensorFlow, PyTorch, and scikit-learn.

- Experience with data engineering and pipeline tools like Apache Spark, Airflow, and Kafka.

- Knowledge of containerization and orchestration technologies such as Docker and Kubernetes.

- Understanding software engineering best practices, including version control, testing, and continuous integration/delivery (CI/CD).

- Strong problem-solving and analytical skills, with the ability to troubleshoot and optimize complex systems.

- Excellent communication and collaboration skills, with the ability to work effectively with cross-functional teams.

Potential career paths for MLOps engineers include:

- MLOps Architect: Designing and overseeing the implementation of enterprise-wide MLOps strategies and platforms.

- MLOps Manager: Leading and managing teams of MLOps engineers, ensuring the delivery of high-quality machine learning solutions.

- Data Science Platform Engineer: Building and maintaining the infrastructure and tools that enable data scientists to develop and deploy machine learning models efficiently.

- Machine Learning Infrastructure Engineer: Designing and operating the underlying infrastructure for machine learning workloads, including computing, storage, and networking.

The demand for MLOps skills is skyrocketing as organizations seek to operationalize their machine learning initiatives at scale. The World Economic Forum’s Future of Jobs 2023 report estimates that 2027, the demand for data analysts, scientists, engineers, BI analysts, and other big data and database professionals will grow by 30%–35%.

Salary expectations for MLOps engineers vary based on location, experience, and industry. As of Dec 30, 2024, the average annual pay for an MLOps engineer in the United States is $87,220. While ZipRecruiter is seeing annual salaries as high as $136,500 and as low as $37,000, the majority of MLOps engineer salaries currently range between $76,500 (25th percentile) and $97,500 (75th percentile), with top earners (90th percentile) making $114,500 annually across the United States.

As the field of MLOps continues to evolve, individuals with a strong foundation in data science, software engineering, and IT operations, along with a passion for continuous learning and innovation, will be well-positioned to build rewarding careers in this exciting and impactful domain.

The Future of MLOps

As machine learning becomes increasingly integral to business operations and decision-making, the future of MLOps is poised for significant growth and innovation. In this section, we will explore emerging trends and predictions for the evolution of MLOps, including technological advancements and market growth, as well as the potential impact on fields such as large language models (LLMs) and edge computing.

Automated Machine Learning (AutoML):

- Prediction: AutoML technologies will mature and become more widely adopted, enabling the automation of key tasks such as feature engineering, model selection, and hyperparameter tuning.

- Impact: AutoML will democratize machine learning, making it more accessible to a broader range of users and reducing the reliance on scarce data science expertise.

MLOps Platforms and Marketplaces:

- Prediction: The emergence of comprehensive MLOps platforms and marketplaces will simplify adopting and integrating MLOps tools and services.

- Impact: MLOps platforms and marketplaces will enable organizations to quickly and easily access best-of-breed tools and services, accelerating the development and deployment of machine learning solutions.

Explainable AI (XAI) and Model Interpretability:

- Prediction: The demand for explainable and interpretable machine learning models will grow, driven by regulatory requirements and the need for transparency and accountability.

- Impact: MLOps will play a critical role in enabling the development and deployment of explainable and interpretable models through techniques such as model-agnostic explanations and counterfactual analysis.

Federated Learning and Privacy-Preserving ML:

- Prediction: Federated learning and privacy-preserving techniques will become more prevalent, enabling the training of machine learning models on decentralized and sensitive data.

- Impact: MLOps must evolve to support federated learning and privacy-preserving workflows, ensuring the secure and compliant exchange of model updates and aggregations.

MLOps for Large Language Models (LLMs):

- Prediction: The rise of large language models, such as GPT-3 and BERT, will create new challenges and opportunities for MLOps.

- Impact: MLOps must adapt to LLMs’ unique requirements, such as distributed training, model compression, and efficient inference at scale.

Edge MLOps:

- Prediction: The proliferation of edge computing and Internet of Things (IoT) devices will drive the need for MLOps at the edge.

- Impact: MLOps will need to support the deployment, monitoring, and updating of machine learning models on resource-constrained edge devices, enabling real-time intelligence and decision-making.

The MLOps market is projected to experience significant growth in the coming years. According to a report by MarketsandMarkets, the MLOps market size is projected to grow from USD 1.1 billion in 2022 to USD 5.9 billion by 2027, at a CAGR of 41.0% during the forecast period.

As machine learning adoption accelerates across industries, the demand for MLOps solutions and expertise will continue to rise. Organizations that invest in building robust MLOps capabilities will be well-positioned to realize machine learning’s full potential, driving innovation, efficiency, and competitive advantage.

Conclusion

Throughout this comprehensive article, we have explored the fascinating world of MLOps, from its origins and core principles to its practical applications and future directions. We have seen how MLOps transforms organizations’ development, deployment, and operation of machine learning models, enabling them to scale their AI initiatives with greater efficiency, reliability, and impact.

At its core, MLOps is about bringing the principles of DevOps and software engineering to the unique challenges of machine learning. By adopting practices such as automation, continuous integration and delivery, and monitoring, MLOps enables organizations to streamline the end-to-end machine learning lifecycle, from data preparation and model training to deployment and maintenance.

Through real-world case studies and practical guidance, we have seen how MLOps can be applied across various industries and use cases, from fraud detection in finance to predictive maintenance in manufacturing. We have explored the key components of an MLOps pipeline, including data management, model development, deployment, and monitoring, and discussed best practices for ensuring the reliability, scalability, and performance of machine learning workflows.

As we look to the future, it is clear that MLOps will play an increasingly critical role in the success of AI initiatives. With the rapid advancements in technologies such as automated machine learning, edge computing, and large language models, the need for robust and scalable MLOps practices will only continue to grow.

For organizations seeking to harness machine learning’s transformative potential, investing in MLOps capabilities is no longer optional—it is a strategic imperative. By building a strong foundation in MLOps, organizations can accelerate their AI journey, reduce risks and costs, and unlock the full value of their data assets.

As individuals, the field of MLOps presents a wealth of exciting opportunities for growth and impact. Whether you are a data scientist looking to expand your skills, a software engineer eager to apply your expertise to machine learning, or a business leader seeking to drive innovation through AI, MLOps offers a path to make a meaningful difference.

To succeed in this dynamic and fast-paced field, it is essential to stay curious, continuously learn, and actively engage with the MLOps community. By leveraging the wealth of resources, best practices, and expertise available, you can position yourself at the forefront of this transformative discipline and help shape the future of AI.

So, whether you are just starting your MLOps journey or looking to take your skills to the next level, we encourage you to embrace the opportunities and challenges ahead. The future of MLOps is bright, and with dedication, collaboration, and a commitment to excellence, we can unlock the full potential of machine learning to drive innovation, efficiency, and positive impact for organizations and society as a whole.

FAQs

Get In Touch