What Does a Modern Data Stack Actually Look Like?

Rahul Rastogi

Sep 4, 2025

Data is no longer just a byproduct of business activity — it is the business. The organizations that win today are the ones that can translate data into decisions faster than their competitors.

Yet, despite its importance, nearly 70% of all enterprise data never gets converted into insights or business value. This means lost revenue, missed opportunities, and delayed innovation.

With more than 400 million terabytes of data generated every day, the pressure on organizations to harness data effectively has never been greater.

For data leaders, this shift is a wake-up call

Legacy systems are unable to handle the speed, complexity, and scale of modern data. The answer is not another patchwork of tools, but a new foundation: the modern data stack, which is cloud-native, flexible, and built to transform raw information into reliable, actionable insights at scale.

In this blog, we’ll break down what a modern data stack looks like, explain how its components work together, and share best practices to help you design one that delivers long-term value.

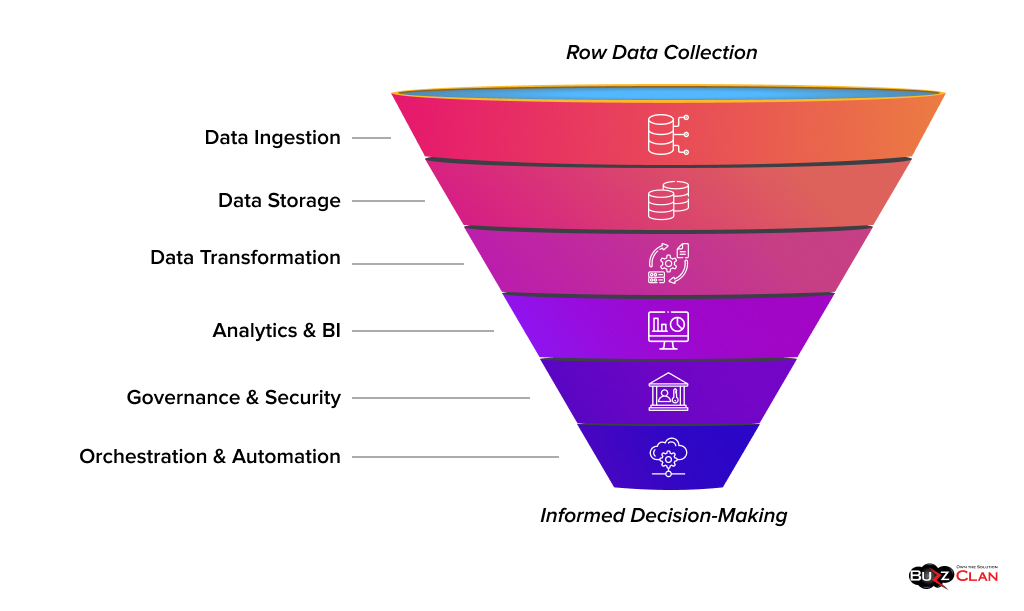

Defining the Modern Data Stack

The term modern data stack (MDS) gets thrown around a lot. But at its core, it’s simply a cloud-native collection of tools and technologies that manage the entire data journey — from collection and ingestion to storage, transformation, analysis, and governance.

As the name suggests, a “stack” layers these capabilities to ensure organizations can maintain data quality and unlock the full value of their information.

Legacy data stacks, built on rigid, on-premises infrastructure, often struggle with scalability, flexibility, and real-time demands. In contrast, the MDS offers a modular, cloud-first approach designed to streamline automation, reduce costs, and accelerate insights.

Just as importantly, it empowers modern use cases such as self-service analytics and AI-driven applications that businesses increasingly rely on.

Think of the modern data stack as the assembly line of digital operations: each component plays a role in moving data seamlessly from its source to analysis. By automating and scaling these workflows, the MDS ensures data is processed, governed, and put to work with precision, driving better decision-making and innovation.

The primary functions of the MDS include:

- Storage: Consolidating data in cloud data warehouses, data lakes, or hybrid lakehouses.

- Ingestion: Moving data from multiple sources into pipelines for downstream use.

- Transformation: Cleaning, normalizing, and aggregating raw data into structured, usable formats.

- Business Intelligence and Analytics: Powering reporting, visualization, and machine learning models with trusted data.

- Data Observability and Governance: Monitoring pipelines, ensuring quality and availability, and maintaining compliance.

Core Components of a Modern Data Stack

A modern data stack isn’t a single product. It’s a combination of layers that work together to turn raw data into actionable insights. Each layer has its own purpose, and the real power comes from how well they connect.

Let’s understand each layer comprehensively:

Data Sources & Ingestion

This is where data enters the stack. Whether from transactional systems, SaaS platforms, IoT devices, or real-time event streams. Modern stacks use automated connectors and pipelines to bring this data in quickly and accurately.

- Benefits: Reduces manual ETL work, supports both batch and streaming, and enables near real-time updates.

- Some Examples of Tools That Enable Ingestion: Azure Data Factory, Fivetran, Airbyte. These platforms provide ready-made connectors and pipelines to move data from diverse sources into your environment quickly and reliably.

Data Storage Layer

Once ingested, data needs a secure, scalable place to live. Modern data stacks rely on cloud-native data warehouses and data lakes that separate storage from compute, making it easier to scale cost-effectively.

- Benefits: Handles large volumes cost-efficiently, supports structured and semi-structured formats, and provides centralized access for downstream analytics.

- Tools That Enable Storage & Warehousing: Snowflake, Google BigQuery, Amazon Redshift. These platforms are designed for elastic scaling, strong security, and query performance across diverse data sets.

Transformation Layer

Raw data is rarely ready for analysis. Transformation involves cleaning, structuring, and enriching data so it can be trusted and queried effectively. In a modern data stack, this happens inside cloud warehouses using an ELT model — data is loaded first, then transformed through modular, SQL-driven pipelines that are version-controlled and automated.

- Benefits: Greater flexibility for changing business logic, faster load times, and reduced latency.

- Tools That Enable Transformation: dbt, Apache Spark, Matillion. They bring engineering rigor to transformation with testing, automation, and scalability.

Analytics & BI Layer

This is where business value becomes visible. In a modern data stack, analytics tools sit directly on top of the warehouse, eliminating the need for separate reporting databases. They connect to fresh, transformed data and provide governed self-service — giving business users freedom to explore while maintaining consistency and security.

- Benefits: Democratized access to insights, faster decision-making, and alignment on a single source of truth.

- Tools That Enable Analytics & BI: Power BI, Tableau, Looker. These platforms are designed to deliver interactive dashboards, self-service exploration, and embedded analytics at scale.

Governance & Security

In a modern data stack, data governance and security are built into the fabric of the platform rather than added as an afterthought. Centralized policies define who can access what data, while automated controls enforce compliance with regulations like HIPAA, GDPR, and SOX. Metadata catalogs, data lineage, and role-based access ensure that data is both trustworthy and protected.

- Benefits: Protects sensitive information, meets compliance standards (HIPAA, GDPR), and builds trust in the data.

- Tools That Enable Governance & Security: Microsoft Purview, Alation, Collibra. These platforms provide centralized governance frameworks, automated policy enforcement, and visibility into how data is used across the organization.

Orchestration & Automation

Modern data stacks rely on orchestration tools to coordinate complex pipelines across ingestion, transformation, and analytics layers. These platforms go beyond simple scheduling — they enable dependency management, observability, and event-driven workflows that adapt to business needs in real time. Automated alerts and retries keep data flowing reliably without constant manual oversight.

- Benefits: Reduces downtime, ensures data arrives on time, and minimizes manual intervention.

- Tools That Enable Orchestration & Automation: Apache Airflow, Prefect, Dagster. These frameworks manage scheduling, execution, and monitoring of pipelines, making large-scale data workflows reliable and auditable.

Want to keep your data flowing without delays?

The Blueprint for Building a Sustainable Modern Data Stack

A modern data stack is only as strong as its ability to adapt. As data volumes and regulations evolve, designing for scalability and resilience from the outset ensures your stack won’t just meet today’s needs but also continue to deliver.

Key considerations for a future-ready stack:

- Scalability: Select platforms that can handle rapid growth in data size and usage without performance degradation. Cloud-native solutions with auto-scaling keep workloads efficient and costs predictable.

- Interoperability: Prioritize tools with strong APIs and pre-built connectors. This avoids the pain of vendor lock-in, eases integration with emerging technologies, and supports seamless data flow across systems.

- Cost Efficiency: Continuously monitor consumption and optimize storage tiers to control costs and prevent waste. Usage-based pricing models keep costs transparent and align expenses with actual business value.

- Security & Compliance: Build in governance from the ground up. Features like encryption, audit logging, and access controls are essential, especially in regulated industries where compliance failures can be costly.

- Automation: Use orchestration to reduce manual intervention. Automated workflows keep pipelines running and identify issues before they escalate.

At BuzzClan, we’ve seen these principles come to life. For example, in a recent project with a leading financial services firm, we integrated Azure Data Factory with Databricks to create a scalable, cloud-first architecture. The solution not only enabled real-time analytics for leadership teams but also streamlined complex data challenges—ultimately giving the business deeper, faster insights into its operations.

Read the full case study to see how we made it happen.

Common Pitfalls to Avoid

Building a modern data stack isn’t just about choosing the right tools—it’s about understanding the subtle architectural decisions that separate successful implementations from expensive failures. After architecting stacks for Fortune 500 companies, we’ve seen patterns that consistently derail even well-funded projects.

Not Planning for Change

Business needs and data sources don’t stay the same. If your stack isn’t built to handle changes such as new fields, formats, or metrics, you’ll face constant breakages and rework. Planning for flexibility from the start helps avoid delays and complications later.

Losing Track of Historical Data

Business leaders often need to revisit historical data with new definitions or updated KPIs—whether due to evolving compliance requirements or shifts in strategy. Without the ability to reprocess this history, reports become inconsistent, and the organization risks losing trust in its data-driven decisions.

No Clear Data Ownership

When multiple teams modify data without alignment, dashboards break and trust declines. Defining ownership ensures accountability and reliability.

Overlooking Cost Control

Cloud costs can escalate quickly. Without careful monitoring and optimization, expenses may outpace the value being created. Setting budgets, tracking usage regularly, and optimizing storage and compute resources ensure costs stay aligned with business impact.

Underestimating Change Management

New platforms don’t just replace technology — they change how people work. If teams aren’t trained and leaders don’t secure buy-in, resistance builds quickly. The result is underused tools, frustrated employees, and missed opportunities to realize the full value of the investment. Successful modernization requires as much focus on people and processes as on the technology itself.

Avoid these pitfalls by working with experts who’ve built resilient, scalable stacks across industries.

Explore BuzzClan’s Data Engineering Services and ensure your stack is built for long-term success.

Conclusion

The modern data stack is more than just a collection of tools. It’s the backbone of a fast, reliable, and scalable analytics strategy. When each layer works together, organizations can move from raw data to actionable insights in minutes, not days.

Designing it right means planning for growth, ensuring strong governance, and selecting tools that align with your long-term vision. When done well, it can reduce costs, expedite decision-making, and pave the way for real-time analytics.

You’ve seen how a modern data stack transforms speed and accuracy. Now it’s your turn.

Let’s design yours so you can start turning data into results—faster than ever. Talk to our experts.

FAQs

Get In Touch