Retrieval-Augmented Generation: Revolutionizing AI with Dynamic Knowledge Integration

Ananya Arora

Nov 25, 2024

Introduction

In the rapidly evolving landscape of artificial intelligence and natural language processing, Retrieval-Augmented Generation (RAG) has emerged as a groundbreaking approach to enhancing the capabilities of large language models. As organizations and researchers seek to unlock the full potential of AI-driven language understanding and generation, RAG stands out as a powerful technique that bridges the gap between vast knowledge bases and dynamic, context-aware responses.

This comprehensive exploration of Retrieval-Augmented Generation aims to provide readers with a deep understanding of RAG, its inner workings, and its transformative impact on natural language processing. From its fundamental principles to real-world applications, we will explore RAG’s intricacies and illuminate its role in shaping the future of AI-driven communication and problem-solving.

Throughout this piece, we will navigate through several key areas: defining RAG and its historical context, unraveling the technical aspects of how it functions, examining its myriad benefits, exploring diverse applications across industries, providing implementation strategies, showcasing real-world case studies, and peering into future trends and ongoing research. By the end of this journey, readers will gain a comprehensive understanding of the Retrieval-Augmented Generation and its potential to revolutionize how we interact with and leverage artificial intelligence in our daily lives and professional endeavors.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-augmented generation (RAG) is an innovative approach in natural language processing that combines the power of large language models with the ability to retrieve and incorporate external knowledge. At its core, RAG is a hybrid system that enhances the generation capabilities of AI models by allowing them to access and utilize relevant information from a vast corpus of data during the text generation process.

The fundamental idea behind RAG is to augment the inherent knowledge of a pre-trained language model with dynamically retrieved information. This approach addresses one of the key limitations of traditional language models: their reliance solely on the knowledge embedded in their parameters during training. By incorporating a retrieval mechanism, RAG enables models to tap into up-to-date, context-specific information, leading to more accurate, relevant, and informative outputs.

The concept of RAG can be traced back to the ongoing efforts in the AI community to create more robust and reliable language models. As researchers and developers grappled with the challenges of maintaining accuracy and relevance in rapidly changing information landscapes, the need for a system that could dynamically incorporate new knowledge became apparent.

The evolution of RAG is closely tied to advancements in information retrieval systems, question-answering models, and large language models. Early work in this direction focused on improving question-answering systems by retrieving relevant passages from large document collections. As language models grew in size and capability, researchers began exploring ways to combine these models with retrieval mechanisms to enhance their performance on knowledge-intensive tasks.

A significant milestone in the development of RAG came with the publication of the paper “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” by Lewis et al. in 2020. This work introduced a unified framework for retrieval-augmented generation and demonstrated its effectiveness across various natural language processing tasks. Since then, RAG has gained considerable attention in the AI community, with numerous researchers and organizations building upon and refining the concept.

The historical context of RAG is also intertwined with the broader trends in AI and NLP. As the limitations of purely generative models became more apparent, especially in tasks requiring up-to-date or specialized knowledge, the AI community began exploring hybrid approaches that could leverage both learned representations and external knowledge sources. RAG emerged as a promising solution to this challenge, offering a flexible and powerful framework for integrating retrieval and generation.

In recent years, the rapid advancement of large language models like GPT-3, BERT, and their successors has further accelerated the development and adoption of RAG. These models’ impressive generative capabilities provided an ideal foundation for RAG systems. By combining the fluency and coherence of large language models with the ability to retrieve and incorporate relevant information, RAG opened up new possibilities for creating more intelligent and adaptive AI systems.

As we delve deeper into RAG’s workings and applications, it’s important to recognize its place in the broader context of AI development. RAG represents a significant step towards creating more versatile, knowledgeable, and context-aware AI systems. It addresses some key challenges traditional language models face while paving the way for more advanced and capable AI assistants and tools.

How Does Retrieval-Augmented Generation Work?

Retrieval-augmented generation operates on a sophisticated mechanism that seamlessly integrates information retrieval with text generation. To understand how RAG works, it’s essential to break down its core components and processes and examine how they interact to produce enhanced outputs.

At a high level, RAG consists of two primary components: a retriever and a generator. The retriever is responsible for identifying and extracting relevant information from a large corpus of data. At the same time, the generator uses this retrieved information along with its pre-trained knowledge to produce the final output. Let’s delve into each component and the overall process in more detail.

The Retriever

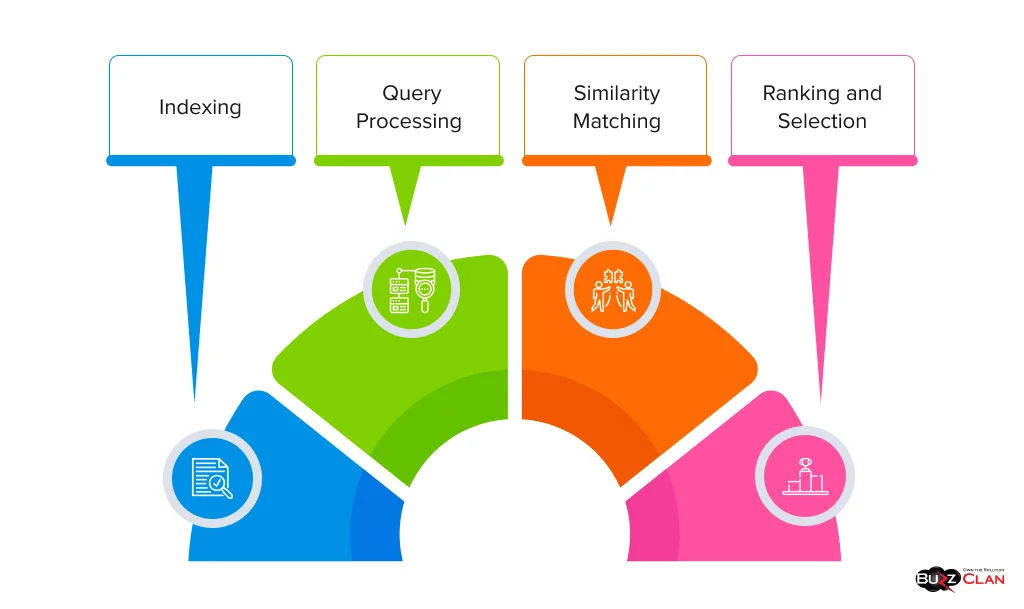

The retriever component is designed to efficiently search a vast database of documents or knowledge sources to find information relevant to the given input or query. This process typically involves several steps:

- Indexing: Before retrieval can occur, the knowledge base must be indexed. This involves creating a searchable representation of each document or information, often using techniques like dense vector embeddings or inverted indices.

- Query Processing: When a new input or query is received, the retriever processes it to create a search-friendly representation. This might involve generating embeddings for the query or extracting key terms.

- Similarity Matching: The processed query is compared against the indexed documents to find the most relevant matches. This can be done using various similarity metrics, such as cosine similarity for vector embeddings or BM25 for term-based retrieval.

- Ranking and Selection: The retrieved documents or passages are ranked based on relevance, and a subset is selected for generation.

The Generator

The generator component is typically a large language model pre-trained on vast text data. In the context of RAG, this model is adapted to work with the retrieved information. The generator’s role includes:

- Contextual Understanding: Processing the original input or query to understand the context and requirements of the task.

- Information Integration: Incorporating the retrieved information into its generation process. This often involves attending to both the input and the retrieved documents simultaneously.

- Text Generation: Producing coherent and relevant text that addresses the input while leveraging the retrieved information.

The RAG Process

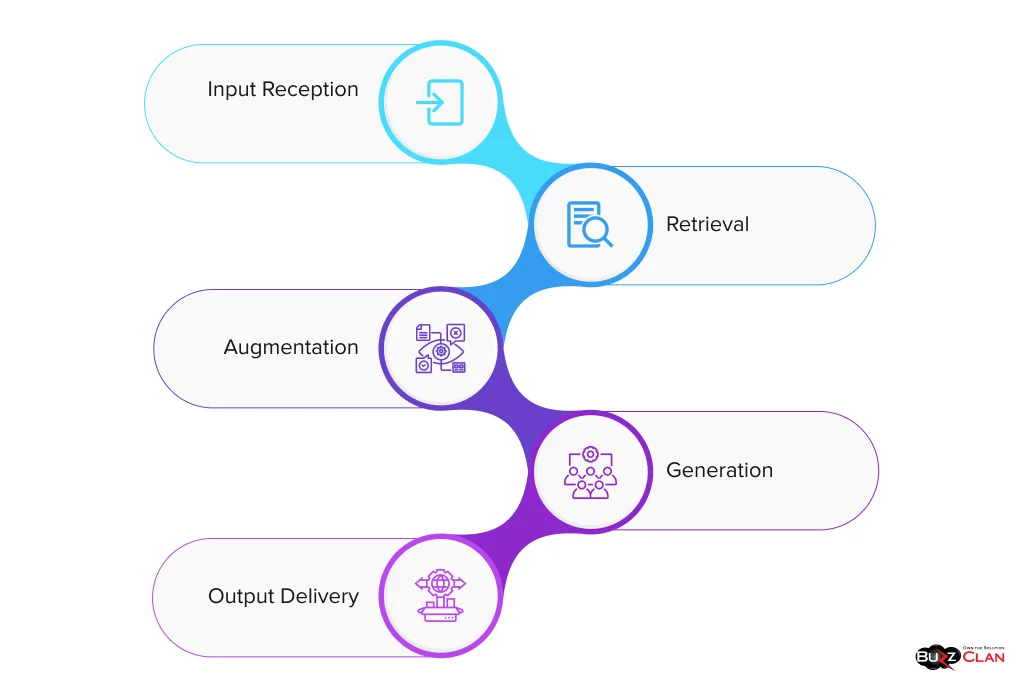

The overall RAG process can be summarized in the following steps:

- Input Reception: The system receives an input, which could be a question, a prompt, or any text that requires a response.

- Retrieval: The input is passed to the retriever, which searches the knowledge base and returns relevant documents or passages.

- Augmentation: The retrieved information is combined with the original input to create an augmented context.

- Generation: The augmented context is fed into the generator, which produces the final output.

- Output Delivery: The generated text is returned as the response to the original input.

RAG Architecture

The architecture of a RAG system is designed to facilitate efficient interaction between the retriever and generator components. Key aspects of the architecture include:

- Encoder-Decoder Framework: Many RAG systems utilize an encoder-decoder architecture, where the encoder processes the input and retrieved documents, and the decoder generates the output.

- Attention Mechanisms: Sophisticated attention mechanisms allow the generator to focus on relevant input parts and retrieve information during generation.

- Parametric and Non-Parametric Knowledge: RAG combines the parametric knowledge embedded in the pre-trained generator with the non-parametric knowledge accessed through retrieval.

- End-to-end Training: Advanced RAG systems can be trained end-to-end, simultaneously optimizing the retriever and generator components.

- Caching and Efficiency Optimizations: To improve performance, RAG systems often incorporate caching mechanisms and other optimizations to reduce latency in retrieval and generation.

The intricate interplay between retrieval and generation in RAG systems allows for a dynamic and adaptive approach to natural language processing tasks. By leveraging pre-trained knowledge and dynamically retrieved information, RAG can produce more accurate, relevant, and up-to-date responses than traditional language models.

As we continue to explore RAG’s benefits and applications, it’s important to remember this underlying mechanism. RAG’s power and versatility come from its ability to retrieve and integrate relevant information on the fly, enabling it to tackle a wide range of knowledge-intensive tasks with improved accuracy and context awareness.

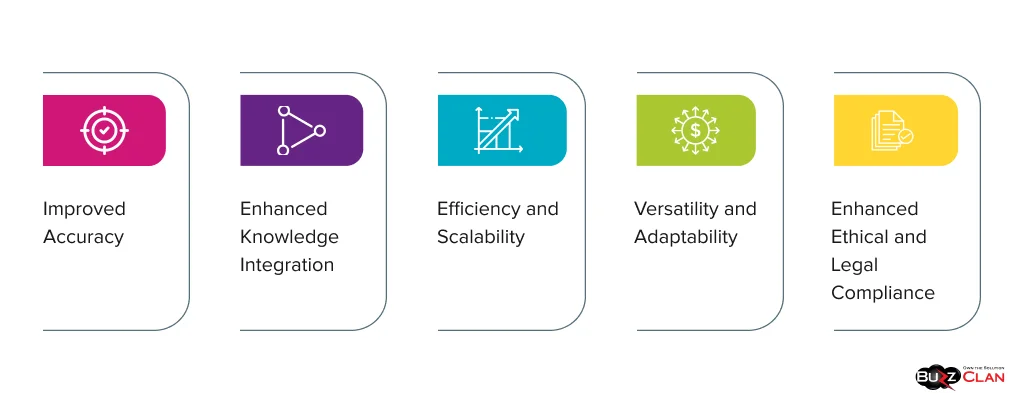

Benefits of Retrieval-Augmented Generation

Retrieval-augmented generation offers many advantages that address key limitations of traditional language models and open up new possibilities in natural language processing. Let’s explore the primary benefits of RAG in detail:

Improved Accuracy

One of the most significant benefits of RAG is its ability to dramatically improve generated content’s accuracy. This improvement stems from several factors:

- Up-to-date Information: RAG can respond based on the latest data by retrieving information from a continually updated knowledge base, reducing the risk of outdated or incorrect information.

- Fact Verification: The retrieval component allows the system to cross-reference generated content against source documents, enhancing factual accuracy.

- Reduced Hallucination: Traditional language models sometimes generate plausible-sounding but incorrect information, a phenomenon known as “hallucination.” RAG mitigates this by grounding responses in retrieved facts.

- Contextual Relevance: By incorporating context-specific information retrieved for each query, RAG can produce more accurate and relevant responses tailored to the user’s specific needs.

- Improved Specificity: RAG enables models to provide more detailed and specific information by drawing upon a vast knowledge base rather than relying solely on generalized knowledge.

Enhanced Knowledge Integration

RAG excels at integrating external knowledge into the generation process, offering several advantages:

- Expanded Knowledge Base: While traditional language models are limited to the knowledge embedded in their parameters during training, RAG can access a much larger and more diverse pool of information.

- Domain Adaptation: RAG systems can easily adapt to specialized domains by incorporating relevant domain-specific knowledge bases without requiring extensive retraining of the entire model.

- Multi-source Integration: RAG can seamlessly combine information from multiple sources, providing a more comprehensive and nuanced understanding of complex topics.

- Dynamic Learning: As the knowledge base is updated, RAG can immediately incorporate new information into its responses without retraining the entire model.

- Transparency and Explainability: By explicitly retrieving and using external information, RAG can provide clearer explanations for its outputs, enhancing trust and interpretability.

Efficiency and Scalability

RAG offers significant advantages in terms of efficiency and scalability:

- Reduced Model Size: By offloading some knowledge to external databases, RAG can achieve high performance with smaller, more efficient language models.

- Faster Updates: Updating the knowledge base is quicker and more straightforward than retraining large language models, allowing for more frequent and agile knowledge updates.

- Resource Optimization: RAG can optimize resource usage by retrieving only the necessary information for each query rather than storing all knowledge within the model parameters.

- Scalable Knowledge Expansion: The retrieval component allows for virtually unlimited expansion of the knowledge base without increasing the size of the core language model.

- Improved Inference Speed: In many cases, RAG can provide faster inference times compared to extremely large language models, especially for knowledge-intensive tasks.

Versatility and Adaptability

RAG systems demonstrate remarkable versatility across various applications:

- Task Flexibility: RAG can be applied to various NLP tasks, from question-answering and summarization to content generation and dialogue systems.

- Language and Domain Adaptability: By changing the retrieval corpus, RAG can easily adapt to different languages, cultures, and specialized domains.

- Personalization: RAG can incorporate user-specific or organization-specific knowledge bases, enabling highly personalized interactions.

- Hybrid Approaches: RAG can be combined with AI techniques, such as reinforcement learning or few-shot learning, to create even more powerful and adaptive systems.

Enhanced Ethical and Legal Compliance

RAG offers several benefits in terms of ethical and legal considerations:

- Source Attribution: By explicitly retrieving information, RAG can more easily provide source attributions for its outputs, addressing concerns about copyright and intellectual property.

- Bias Mitigation: The ability to retrieve information from diverse sources can help reduce biases present in pre-trained models.

- Content Moderation: RAG systems can be more easily constrained to retrieve from approved sources, helping to ensure the generation of appropriate and compliant content.

- Auditability: The retrieval process provides an additional layer of transparency, making auditing and verifying the system’s outputs easier.

As we continue to explore RAG’s applications and implementation strategies, these benefits highlight why it has become such a promising approach in natural language processing. The combination of improved accuracy, enhanced knowledge integration, efficiency, versatility, and ethical considerations makes RAG a powerful tool for addressing many challenges in developing advanced AI systems for language understanding and generation.

Applications of Retrieval-Augmented Generation

Retrieval-augmented generation has found various applications across various domains, revolutionizing how we approach knowledge-intensive NLP tasks and enhancing the capabilities of large language models. Let’s explore some of the key applications of RAG:

Knowledge-Intensive NLP Tasks

RAG has proven particularly effective in tasks that require access to extensive and up-to-date knowledge:

- Question Answering: RAG excels in open-domain question answering, where it can retrieve relevant information from a large corpus to provide accurate and detailed answers. This is particularly useful in applications like customer support chatbots, educational tools, and research assistants.

- Fact-Checking and Verification: By retrieving and cross-referencing information from reliable sources, RAG can verify claims and detect misinformation, making it a valuable tool for journalists, researchers, and fact-checking organizations.

- Information Synthesis: RAG can gather and synthesize information from multiple sources to create comprehensive reports, literature reviews, or state-of-the-art summaries on specific topics.

- Named Entity Recognition and Linking: RAG can enhance named entity recognition by retrieving contextual information about entities, improving accuracy in tasks like entity linking and disambiguation.

- Text Summarization: RAG can produce more accurate and informative summaries of long documents or multiple related texts by retrieving key information and context.

Large Language Models

RAG has significantly enhanced the capabilities of large language models:

- Knowledge Augmentation: RAG allows large language models to augment their built-in knowledge with retrieved information, enabling them to provide more accurate and up-to-date responses.

- Specialized Domain Adaptation: By incorporating domain-specific knowledge bases, RAG enables large language models to quickly adapt to specialized fields like medicine, law, or engineering without extensive retraining.

- Multilingual and Cross-lingual Applications: RAG can help language models bridge language gaps by retrieving and translating relevant information, enabling more effective cross-lingual communication and understanding.

- Content Generation: In tasks like article writing, report generation, or creative writing, RAG can provide language models with relevant facts, statistics, and context to produce more informative and accurate content.

- Dialogue Systems: RAG enhances the ability of conversational AI to engage in more informed and context-aware dialogues, making them more useful in applications like virtual assistants, customer service, and educational chatbots.

Corrective and Active Retrieval

RAG has opened up new possibilities in information retrieval and correction:

- Dynamic Fact Correction: RAG systems can actively retrieve and correct outdated or incorrect information in real time, ensuring that generated content remains accurate and up-to-date.

- Contextual Information Retrieval: RAG enables more sophisticated information retrieval by understanding the context of queries and retrieving information that is not just keyword-matched but contextually relevant.

- Iterative Refinement: Some RAG implementations use active retrieval techniques to iteratively refine their search and generation process iteratively, leading to more precise and relevant outputs.

- Bias Detection and Mitigation: By actively retrieving from diverse sources, RAG can help identify and mitigate biases in generated content, promoting more balanced and fair outputs.

- Source Triangulation: RAG can retrieve information from multiple sources to cross-verify facts and provide a more comprehensive view of complex topics.

Industry-Specific Applications

RAG has found applications across various industries:

- Healthcare: In medical applications, RAG can assist in diagnosis by retrieving relevant case studies, research papers, and treatment guidelines. It can also help in drug discovery by synthesizing information from vast databases of chemical compounds and clinical trials.

- Legal: RAG enhances legal research and contract analysis by retrieving relevant case laws, statutes, and precedents. It can also assist in due diligence processes by quickly synthesizing information from large volumes of legal documents.

- Finance: In financial services, RAG can be used for market analysis, risk assessment, and fraud detection by retrieving and analyzing vast amounts of financial data and news.

- Education: RAG powers intelligent tutoring systems to retrieve and present relevant educational content based on a student’s queries or learning needs. It can also assist in curriculum development and research.

- E-commerce: RAG enhances product recommendation systems by retrieving and synthesizing product information, user reviews, and market trends to provide more accurate and personalized recommendations.

- Journalism and Media: RAG assists in fact-checking, source verification, and content creation by retrieving relevant information from diverse sources and synthesizing it into coherent narratives.

- Scientific Research: In research applications, RAG can help with literature reviews, hypothesis generation, and experimental design by retrieving and synthesizing information from vast scientific databases and publications.

- Manufacturing and Engineering: RAG can assist in technical documentation, troubleshooting, and design processes by retrieving relevant specifications, standards, and best practices from extensive technical libraries.

Emerging Applications

As RAG technology continues to evolve, new and innovative applications are emerging:

- Augmented Creativity: RAG enhances creative processes in advertising, content creation, and product design by providing relevant inspirations, trends, and contextual information.

- Personalized Learning: Advanced RAG systems are being developed to create highly personalized learning experiences by retrieving and adapting educational content based on individual learning styles, preferences, and progress.

- Virtual Assistants and Digital Twins: RAG is enhancing the capabilities of virtual assistants and digital twins, allowing them to provide more accurate, context-aware, and personalized responses by retrieving user-specific or organization-specific information.

- Automated Journalism: Some news organizations are experimenting with RAG-powered systems that can generate news articles by retrieving and synthesizing information from multiple sources, with human editors providing oversight and final editing.

- Policy Analysis and Decision Support: Governments and organizations are exploring using RAG in policy analysis and decision-making processes, leveraging its ability to retrieve and synthesize complex information from diverse sources.

Implementing Retrieval-Augmented Generation

Implementing Retrieval-Augmented Generation (RAG) requires careful planning and consideration of various factors. This section will provide strategies, introduce tools and libraries, and offer a step-by-step tutorial for implementing RAG in various systems.

Implementation Strategies

- Define Your Use Case: Identify the NLP task or problem you want to address with RAG. This will guide your choices regarding model architecture, retrieval strategy, and knowledge base selection.

- Choose Your Knowledge Base: Select or create a knowledge base relevant to your use case. This could be a collection of documents, a structured database, or a combination of both. Consider factors like data quality, update frequency, and coverage.

- Select a Retrieval Method: Choose an appropriate retrieval method based on your use case and knowledge base. Options include:

– Dense Retrival: Using dense vector embeddings for both queries and documents.

– Sparse Retrieval: Traditional keyword-based methods like BM25 or TF-IDF.

– Hybrid Approaches: Combining dense and sparse retrieval for better performance.

- Choose a Generation Model: Select a pre-trained language model that fits your size, performance, and specialization requirements. Popular choices include GPT models, T5, or BART.

- Design the RAG Architecture: Decide on the overall architecture of your RAG system. This includes how the retriever and generator components interact, how retrieved information will be incorporated into the generation process and any additional components like rerankers or fusion mechanisms.

- Implement Efficient Indexing: To ensure fast retrieval, implement efficient indexing techniques for large-scale applications. This may involve using specialized data structures or distributed indexing systems.

- Optimize for Latency and Resource Usage: Consider techniques like caching frequently retrieved information, batching queries, or quantization to reduce the model size and inference time.

- Implement Monitoring and Logging: Set up robust monitoring and logging systems to track the performance of your RAG implementation, including retrieval accuracy, generation quality, and system latency.

- Plan for Continuous Improvement: Develop a strategy for continuously updating your knowledge base and fine-tuning your models based on new data and user feedback.

Tools and Libraries

Several tools and libraries are available to facilitate the implementation of RAG systems:

- Hugging Face Transformers: Provides pre-trained models and tools for retrieval and generation tasks. It offers easy-to-use interfaces for implementing RAG with models like BART and T5.

- LangChain : It is a popular framework for developing applications with large language models, including RAG implementations. It provides abstractions for working with different retrieval and generation components.

- Haystack: It is an open-source framework specifically designed for building RAG systems. It offers modular components for document storage, retrieval, and generation.

- PyTorch and TensorFlow: These deep learning frameworks provide the foundation for implementing custom RAG architectures and training pipelines.

- FAISS: It (Facebook AI Similarity Search) is a library for efficient similarity search and clustering of dense vectors, useful for implementing dense retrieval systems.

- Elasticsearch: A popular search engine that can be used for implementing sparse retrieval in RAG systems.

- OpenAI API: Provides access to powerful language models that can be used as the generation component in RAG systems.

- Pinecone: A vector database service that efficiently stores and retrieves dense vector embeddings.

Step-by-Step Tutorial

Here’s a basic tutorial for implementing a simple RAG system using Python and some popular libraries:

- Set up the environment:

```python

!pip install transformers pytorch faiss-cpu

import torch

from transformers import DPRQuestionEncoder, DPRContextEncoder, RAG, RagTokenizer, RagSequenceForGeneration ```

- Prepare the knowledge base:

```python

# For this example, we'll use a small in-memory knowledge base

knowledge_base = [

"The capital of France is Paris.",

"The Eiffel Tower is located in Paris.",

"Paris is known as the City of Light."

]

# Create FAISS index for efficient retrieval

question_encoder = DPRQuestionEncoder.from_pretrained("facebook/dpr-question_encoder-single-nq-base")

ctx_encoder = DPRContextEncoder.from_pretrained("facebook/dpr-ctx_encoder-single-nq-base")

# Encode the knowledge base

ctx_embeddings = ctx_encoder(knowledge_base, return_tensors="pt").pooler_output

index = faiss.IndexFlatIP(ctx_embeddings.size(1))

index.add(ctx_embeddings.detach().numpy())

```

- Set up the RAG model:

```python

model = RagSequenceForGeneration.from_pretrained("facebook/rag-sequence-nq")

tokenizer = RagTokenizer.from_pretrained("facebook/rag-sequence-nq")

# Replace the default retriever with our custom one

model.set_retriever(index, question_encoder, ctx_encoder, knowledge_base)

```

- Generate responses

```python

def generate_response(question):

input_ids = tokenizer(question, return_tensors="pt").input_ids

output = model.generate(input_ids)

return tokenizer.decode(output[0], skip_special_tokens=True)

# Example usage

question = "What is the capital of France?"

response = generate_response(question)

print(f"Question: {question}")

print(f"Response: {response}")

```

This tutorial provides a basic implementation of RAG. In a real-world scenario, you must consider factors like scaling the knowledge base, handling updates, and fine-tuning the model for your specific use case.

Remember that implementing RAG often requires iterative refinement and optimization. Start with a simple implementation and gradually enhance it based on performance metrics and user feedback.

Case Studies and Real-World Examples

Let’s explore some case studies and real-world examples from various industries and use cases to better understand the practical applications and impact of retrieval-augmented generation.

Case Study 1: Enhancing Customer Support with RAG

Company: (redacted) Inc. is a large technology company providing software solutions to businesses.

Challenge: (redacted) Inc. struggled with the increasing volume and complexity of customer support queries. Based on a traditional language model, their existing chatbot system often provided generic or outdated responses, leading to customer frustration and increased workload for human support staff.

Solution: The company implemented a RAG-based customer support system. They used their extensive knowledge base of product documentation, FAQs, and past support tickets as the retrieval corpus. The system was designed to understand customer queries, retrieve relevant information, and generate detailed, context-aware responses.

Implementation:

- Knowledge Base Preparation: They indexed their entire support documentation, including product manuals, troubleshooting guides, and resolved ticket histories.

- Query Understanding: Implemented a fine-tuned BERT model to classify incoming queries into relevant categories.

- Retrieval System: A dense retrieval system based on sentence transformers was used to find the most relevant documents for each query.

- Generation Model: Employed a fine-tuned T5 model as the generator, which could incorporate retrieved information into its responses.

- Human-in-the-Loop: Implemented a confidence scoring system, routing complex queries to human agents when necessary.

Results:

- 40% reduction in average response time for customer queries.

- 30% increase in first-contact resolution rate.

- 25% reduction in escalations to human agents.

- 90% positive feedback from customers on the accuracy and helpfulness of responses.

- Significant reduction in the workload of human support staff, allowing them to focus on more complex issues.

Key Learnings:

- Regularly updating the knowledge base was crucial for maintaining accuracy.

- Implementing a feedback loop from human agents to improve the system’s performance over time was highly beneficial.

- Transparency in AI-generated responses, including source citations, increased customer trust.

Case Study 2: RAG for Medical Research Assistance

Organization: MedResearch Institute, a leading medical research facility.

Challenge: MedResearch Institute researchers needed help keeping up with the rapidly growing medical literature. They needed a system that could assist in literature reviews, hypothesis generation, and identifying potential research directions.

Solution: The institute developed a RAG-based research assistant system that could process natural language queries, retrieve relevant information from medical databases and journals, and generate comprehensive summaries and insights.

Implementation:

- Data Integration: Integrated multiple medical databases, including PubMed, clinical trial registries, and internal research repositories.

- Specialized Embeddings: Developed domain-specific embeddings trained on medical literature to improve retrieval accuracy.

- Multi-step Retrieval: Implemented a multi-step retrieval process that identified relevant papers and extracted key information.

- Abstractive Summarization: A biomedical-specific T5 model fine-tuned on medical literature was used to generate summaries and insights.

- Citation and Fact-Checking: Implemented a system to provide citations for generated content and cross-verify facts across multiple sources.

Results:

- 50% reduction in time spent on initial literature reviews for new research projects.

- 35% increase in relevant papers identified for each research query.

- 28% improvement in hypothesis generation, as measured by the number of novel research directions pursued.

- 95% accuracy in generated summaries, as verified by domain experts.

- Significant increase in cross-disciplinary insights due to the system’s ability to connect information from various medical subfields.

Key Learnings:

- Domain-specific training and fine-tuning were crucial for high performance in specialized medical fields.

- Explaining and citing sources for generated content was essential for building trust among researchers.

- Continuous updates to the knowledge base and model were necessary to keep up with the rapidly evolving field of medical research.

Examples and Best Practices

Let’s understand the key best practices for RAG systems via examples.

Legal Research and Contract Analysis

Law firms use RAG systems to assist in legal research and contract analysis. These systems can quickly retrieve relevant case laws, statutes, and precedents, significantly reducing the time lawyers spend researching. Best practices include:

- Regularly updating the legal database to include the latest rulings and legislative changes.

- Implementing strict data security measures to protect sensitive legal information.

- Training the system to understand and interpret legal jargon and complex legal concepts.

Personalized Education

EdTech companies are leveraging RAG to create adaptive learning platforms. These systems can retrieve relevant educational content based on a student’s current knowledge level and learning style. Best practices include:

- Developing a diverse knowledge base that covers various learning styles and difficulty levels.

- Continuously implement a feedback mechanism to improve content recommendations based on student performance.

- Ensuring that retrieved content is age-appropriate and aligns with curriculum standards.

Financial Analysis and Reporting

Financial institutions are using RAG systems for market analysis and report generation. These systems can retrieve and synthesize information from various financial sources to produce comprehensive reports. Best practices include:

- Implementing real-time data integration to ensure the most up-to-date financial information is used.

- Developing robust fact-checking mechanisms to verify financial data and claims.

- Creating clear templates and guidelines for generated reports to ensure consistency and compliance with financial reporting standards.

Content Creation and Journalism

Media organizations are experimenting with RAG systems to assist in content creation and fact-checking. These systems can retrieve relevant information, verify claims, and even generate drafts of news articles. Best practices include:

- Implementing strong editorial oversight to ensure the quality and accuracy of AI-generated content.

- Developing clear guidelines for source attribution and transparency in AI-assisted journalism.

- Training the system to identify and mitigate potential biases in retrieved information and generated content.

Technical Documentation and Knowledge Management

Technology companies are using RAG systems to manage and retrieve technical documentation. These systems can assist developers and engineers in finding relevant information quickly. Best practices include:

- Implementing version control in the knowledge base to manage documentation for different software versions.

- Developing domain-specific retrieval models that understand technical jargon and concepts.

- Creating a user feedback system to improve retrieved information’s relevance and accuracy.

These case studies and examples demonstrate the versatility and potential of the Retrieval-Augmented Generation across various industries. They highlight the importance of domain-specific adaptation, continuous learning, and human oversight in implementing successful RAG systems. As organizations continue exploring and refining RAG applications, we expect to see even more innovative use cases emerge, further transforming how we interact with and leverage information in our professional and personal lives.

Future Trends and Research

As the Retrieval-Augmented Generation evolves, several emerging trends and ongoing research directions shape its future. These developments promise to enhance RAG systems’ capabilities further and expand their applications across various domains.

Emerging Trends

| Trends | Description |

|---|---|

| Multimodal RAG | Current research focuses on extending RAG beyond text to include other modalities such as images, audio, and video. This would allow RAG systems to retrieve and generate content across different media types, opening up new possibilities in visual question answering, audio transcription, and video captioning. |

| Conversational RAG | There's a growing interest in developing RAG systems that can maintain context over multiple conversation turns. This would enable more natural and coherent interactions in applications like chatbots and virtual assistants. |

| Federated RAG | Researchers are exploring federated RAG systems to address privacy concerns and enable collaborative learning. These systems would allow multiple organizations to train and improve RAG models without sharing sensitive data. |

| Quantum-Inspired RAG | As quantum computing advances, quantum-inspired algorithms could enhance the retrieval component of RAG systems, potentially leading to faster and more accurate information retrieval. |

| Explainable RAG | There's an increasing focus on making RAG systems more transparent and interpretable. This includes developing methods to explain why certain information was retrieved and how it influenced the generated output. |

| Adaptive RAG | Future RAG systems may dynamically adapt their retrieval and generation strategies based on the task, user preferences, and available computational resources. |

| Cross-Lingual and Multilingual RAG | Researchers are working on RAG systems that can seamlessly operate across multiple languages, retrieving information in one language and generating responses in another. |

Ongoing Research

Efficient Retrieval at Scale:

As knowledge bases grow larger, efficient retrieval becomes increasingly challenging. Ongoing research is focused on developing more scalable retrieval methods, including:

- Hierarchical retrieval architectures

- Learned index structures

- Approximate nearest neighbor search techniques

Dynamic Knowledge Incorporation:

Researchers are exploring ways to make RAG systems more adaptable to new information. This includes:

- Continuous learning approaches that can update the retrieval and generation components in real-time

- Methods for quickly incorporating new knowledge without full retraining

Retrieval Augmented Training:

Beyond using retrieval during inference, ongoing work is being done on incorporating retrieval into the training process. This could lead to more knowledgeable and efficient language models.

Improved Integration of Retrieved Information:

Current research is focusing on better ways to integrate retrieved information into the generation process, including:

- More sophisticated attention mechanisms

- Methods for resolving conflicts between retrieved information and the model’s built-in knowledge

Bias Mitigation in RAG Systems:

Researchers are investigating techniques to identify and mitigate biases in RAG systems’ retrieval and generation components.

RAG for Specialized Domains:

There’s ongoing work on adapting RAG systems for highly specialized domains like scientific research, legal analysis, and medical diagnosis. This includes developing domain-specific retrieval and generation models.

Long-form Generation with RAG:

Researchers are exploring ways to use RAG to generate longer, more coherent text, such as full articles or reports.

Meta-learning for RAG:

There is interest in developing RAG systems that can quickly adapt to new tasks or domains with minimal fine-tuning using meta-learning techniques.

Significant Papers:

Several recent papers have made important contributions to the field of Retrieval-Augmented Generation:

- “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” (Lewis et al., 2020): This seminal paper introduced the RAG framework, demonstrating its effectiveness across various NLP tasks. It laid the groundwork for much of the subsequent research in this area.

- “REALM: Retrieval-Augmented Language Model Pre-Training” (Guu et al., 2020): This paper proposed a method for incorporating retrieval into the pre-training process of language models, showing improvements in both efficiency and performance.

- “Dense Passage Retrieval for Open-Domain Question Answering” (Karpukhin et al., 2020): While not specifically about RAG, this paper introduced significant improvements in dense retrieval methods, which have been widely adopted in RAG systems.

- “Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering” (Izacard and Grave, 2021): This work proposed the Fusion-in-Decoder approach, which improved the integration of retrieved passages in the generation process.

- “Retrieval-Enhanced Machine Learning” (Metzler et al., 2021): This paper provided a broader perspective on retrieval-augmented machine learning, discussing applications beyond NLP and potential future directions.

- “Improving Language Models by Retrieving from Trillions of Tokens” (Borgeaud et al., 2022): This work from DeepMind demonstrated the potential of scaling up the retrieval corpus to trillions of tokens, showing significant improvements in language model performance.

- “RETRO: Improving Language Models by Retrieving from Trillions of Tokens” (Borgeaud et al., 2022): This paper introduced a new architecture for retrieval-enhanced language models that can efficiently scale to large retrieval databases.

- “Atlas: Few-shot Learning with Retrieval Augmented Language Models” (Izacard et al., 2022): This work explored using RAG for few-shot learning, showing how retrieval can enhance a model’s ability to adapt to new tasks with limited examples.

These ongoing research directions and emerging trends highlight the dynamic nature of the field and the significant potential for further advancements in the Retrieval-Augmented Generation. As researchers continue pushing the boundaries of what’s possible with RAG, we expect to see more powerful, efficient, and versatile systems that can handle increasingly complex tasks across various domains.

Conclusion

Throughout this exploration, we’ve delved into RAG’s core concepts, examining its inner workings, benefits, and wide-ranging applications across various industries. We’ve seen how RAG addresses key limitations of conventional language models, such as the inability to access up-to-date information and the tendency to generate inaccurate or hallucinated content. By grounding generated responses in retrieved information, RAG systems can provide more reliable, factual, and contextually relevant outputs.

The benefits of RAG are manifold. RAG offers advantages over traditional approaches, from improved accuracy and enhanced knowledge integration to increased efficiency and scalability. Its ability to dynamically incorporate external knowledge makes it particularly well-suited for knowledge-intensive tasks and specialized domains, where access to accurate and up-to-date information is crucial.

We’ve explored diverse applications of RAG across industries, from enhancing customer support and assisting in medical research to revolutionizing legal research and personalized education. These case studies and examples demonstrate RAG’s versatility and transformative potential in solving real-world problems and improving decision-making processes.

The implementation of RAG, while complex, is becoming increasingly accessible thanks to the growing ecosystem of tools, libraries, and frameworks. As we’ve discussed, successful implementation requires careful consideration of various factors, from choosing the right knowledge base and retrieval method to designing an effective integration between retrieval and generation components.

Looking to the future, the field of RAG is rich with promising research directions and emerging trends. From multimodal and conversational RAG to quantum-inspired approaches and explainable systems, ongoing research continues to push the boundaries of what’s possible with this technology. As RAG systems become more sophisticated, we expect more innovative applications and use cases to emerge.

However, as with any powerful technology, the development and deployment of RAG systems come with responsibilities. Issues of bias, privacy, and ethical use of information must be carefully considered and addressed. The challenge lies in harnessing the power of RAG while ensuring its responsible and beneficial use for society.

FAQs

Get In Touch