What is Serverless Computing, and How Can It Transform Your Business?

Gururaj Singh

May 2, 2025

What if developers didn’t need to manage servers and could solely focus on coding? Fortunately, this idea has been translated into reality, with serverless computing simplifying application development and bringing much-needed innovation to cloud computing. This is also why more than 50% of AWS, Google Cloud, and Azure customers depend on more than serverless solutions.

The market is expected to reach a record USD 44.7 billion by 2029, and the benefits go far beyond reduced costs, improved scalability, and enhanced performance.

Even Werner Vogels, Amazon’s CTO, said, “Serverless has to be the best experience evolution of cloud computing, as you can build great applications without the hassles of infrastructure management.”

Well, there is much more to explore about serverless computing. What is it all about? How does it work, and why are organizations investing heavily in it? This blog will decode its benefits and limitations and help you decide if it fits your organization.

What is Serverless Computing?

When we talk about serverless computing, we don’t mean that there are no servers; it’s just that developers can code without worrying about them. You only need to purchase Backend-as-a-Service (BaaS) from a trusted provider, who will manage databases, file storage, and authentication for you. Serverless environments work on pay-as-you models, and you only pay per computation. This means you don’t need to block a designated number of servers or network bandwidth, which can help you save costs.

If you wish to go beyond database and storage, you can invest in Function-as-a-Service (FaaS). This way, your developers can execute small code (functions) to build a modular architecture. These codes are often executed via stateless functions and don’t retain any information between executions. Whenever a function is invoked, it is treated independently. Serverless computing is frequently used with microservices architectures, where small and independent functions handle specific tasks.

In the long run, serverless computing helps you cut the time to market. Developers don’t need to go through lengthy processes to roll out applications and fix them; they can fix codes in a series of steps.

How Does Serverless Computing Work?

Serverless architectures follow a simple process; let’s look at how they work.

- Developers write code for a stateless function. This could be anything from resizing an image to sending a notification.

- Once the code has been written, it is uploaded to the serverless platform, and execution limits are defined.

- In this stage, functions are tied to event triggers to make changes more straightforward.

- The platform detects the event and creates an execution environment to run the function. In this stage, necessary resources are allocated, and the function code is loaded with dependencies.

- The function is executed and isolated to ensure no interference with other functions.

- The platform scales resources per the demand, and you are billed only for the compute time.

- The function finally returns the output per the given input.

- The environment is destroyed once the function is executed, and the resources are released to keep costs minimal.

Components of Serverless Architecture

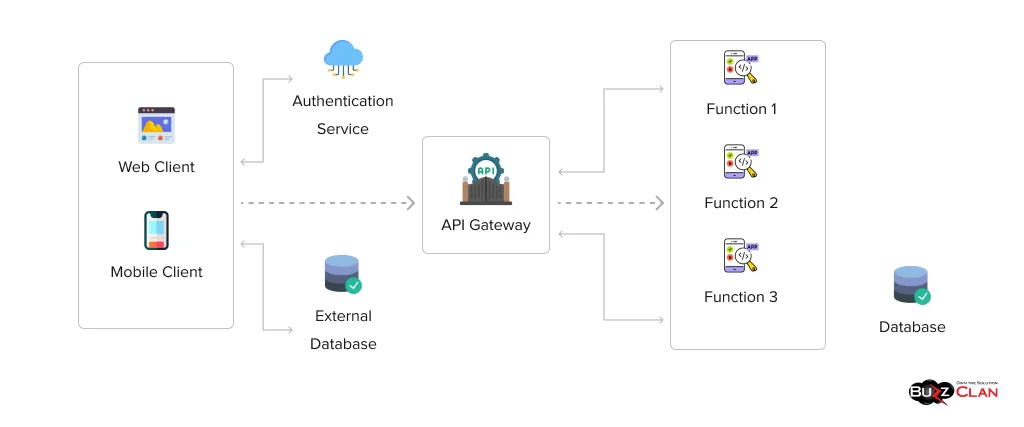

Let’s understand how the main components of serverless architecture work.

- Function as a Service (FaaS): This component forms the core of serverless computing. Here, you write functions that are executed in response to events, and once the job is done, they are shut down.

- Event Triggers: These components order your function to run. For example, if you upload a file to the storage, a function will be triggered to process it.

- API Gateway: They route your requests to the correct serverless function.

- Backend-as-a-Service (BaaS): These services handle authentication, databases, and file storage and free you from the hassle of managing infrastructure.

- Serverless Orchestration and Workflow Services: These components promote teamwork by coordinating multiple serverless functions and services for complex workflows.

- Monitoring and Logging: They track the performance and errors of your functions, helping you debug and optimize them.

- Continuous Integration and Continuous Delivery (CI/CD): They help platforms automate the deployment of serverless functions. Developers can quickly change applications and push updates with minimal downtime.

Serverless vs. Traditional Cloud Computing

Let’s understand how serverless computing differs from traditional computing.

| Parameter | Serverless Computing | Cloud Computing |

|---|---|---|

| Infrastructure Management | The cloud provider fully manages the infrastructure. | You are responsible for configuring servers. |

| Scalability | Scaling is automatic and event-driven. | The scaling can be manual or automated, and you must configure your systems. |

| Control and Customization | You will have limited control over infrastructure and runtime environments. | You will have complete control over operating systems, servers, and configurations. |

| Use Case | These are best suited for stateless applications and event-driven microservices. | Ideal for legacy applications. |

| Resource Allocation | Resources are allocated dynamically based on workloads. | The resources are provisioned in advance. |

| Latency | You may experience cold starts due to infrequent executions. | They offer consistent performance and have no cold starts. |

| Deployment Speed | These are faster to deploy as the focus is on code. | The deployment process is longer as you must set up and maintain the server. |

| Monitoring and Debugging | Distributed functions make monitoring and debugging complex. Special tools are required for the same. | Monitoring and debugging are easier with centralized infrastructure and tools. |

| Vendor Lock-In | There is a high risk of vendor lock-in as you can get dependent on serverless frameworks. | Less risk, as VMs and containers can be migrated. |

| Cost at Scale | It can be expensive for heavy workloads. | Ideal for high-throughput workloads. |

Why is Serverless Computing Important?

Serverless computing has gained massive importance because it relieves developers of a significant responsibility—managing servers. This allows them to deliver applications faster. You can also save costs, as you only pay for the actual usage, not idle server time. Let’s understand the importance of serverless implementation in IT environments.

- Seamless Scalability: Serverless platforms provide automated scaling to meet your demands. If there is a sudden surge in traffic, you don’t need to configure your application manually. The platform will take care of this and ensure your app always remains responsive.

- Improved Reliability: No customer or business wants to deal with unreliable applications. Serverless computing eases this issue. You can quickly build fault-tolerant applications that seamlessly handle failures. Added advantages include reduced downtime and high availability at all times.

- Better Resource Utilization: Zero waste has to be serverless computing’s best benefit. By preventing resource under- and overuse, you can lower costs and benefit the environment in the long run.

- Event-Driven Architecture: Serverless computing integrates well with event-driven architectures, allowing for a flexible approach to application building.

Security in Serverless Architecture

When applications face security risks, organizations often depend on traditional methods such as firewalls and using advanced intrusion detection tools to protect their systems. However, the same is not the case with serverless architectures. Let’s understand it and the security best practices you must follow to ensure your serverless environments are always protected.

What is Serverless Security?

Regarding serverless architecture, the focus shifts from inspecting networks to code protection. Serverless security adds a layer of protection to the applications by securing code functions. This is a win-win for developers as they can guarantee compliance and look forward to enhanced application security.

Is Serverless Architecture Secure?

While serverless architectures are great for application development and scaling with ease, there are security concerns you need to address. Once you address them, you can reduce the risk of threats and keep your applications secure.

Serverless Security Risks

Here is a list of risks you must consider when working with serverless applications.

- Since serverless functions are short-lived, traditional monitoring tools are not apt to detect threats in these environments, let alone respond to them in real-time. These tools cannot track API calls or suspicious activities in serverless applications.

- HTTP requests or changes in object storage trigger functions. However, if these are not validated, attackers can inject malicious code that harms applications.

- Not all functions require admin permissions. Granting excessive permissions only invites threats, and hackers can access all resources in the cloud environment.

- Serverless applications often depend on third-party libraries or SDKs (Software Development Kits). Unfortunately, attackers can exploit these dependencies and gain access to sensitive information.

- Since serverless functions automatically scale, attackers abuse this aspect to trigger multiple functions, leading to poor performance and high costs.

- Cold starts lead to a poor user experience and allow attackers to exploit idle or vulnerable functions.

Serverless Security Best Practices

Here is what you must do to protect your applications from basic and advanced threats.

- Ensure you only grant the privileges to serverless functions needed to perform their tasks. Identity access management (IAM) is necessary for these environments, with regular permission audits to stay on top of compliance needs.

- Ensure all the inputs are validated; not doing so invites unauthorized access.

- Use advanced logging tools to monitor activities and implement real-time alerts for suspicious activity. To increase security and visibility, invest in third-party tools.

- Encrypt data and use runtime protection tools to block threats during execution.

- Lastly, regular penetration tests should be conducted to identify application weaknesses and simulate attacks periodically to test the strength of your functions.

Advanced Topics in Serverless Computing

As serverless computing continues to evolve, several advanced topics and trends are emerging, pushing the boundaries of what’s possible with this technology. Let’s explore these advanced concepts in detail:

Serverless Edge Computing

Serverless edge computing combines both serverless functions and edge computing to minimize lags. This is done by executing code near the source without managing infrastructure. Since the scaling is automatic, fluctuating demands can easily be managed, and you won’t need to burn a hole in your pocket as you pay per computation. Streaming platforms use serverless edge computing to ensure video content is delivered to a user’s location. However, serverless edge computing comes with its set of challenges. Ensuring that distributed data stays consistent across edge locations can be challenging. Moreover, you will need to deal with vulnerabilities at multiple locations.

Databricks Serverless Compute

Well, these managed services let you run analytics and machine learning workloads without the need to manage the underlying cluster infrastructure. The compute capacity is adjusted automatically, and you will be charged only for the resources consumed during computation. Often, fintech firms use Databricks to detect potential frauds and analyze transactions in real-time.

Latency and Performance Challenges

Cold starts are a common issue in serverless environments. The problems worsen if the edge locations are far from users or you are dealing with peak traffic. Not only will it lead to multiple cold starts, but it will lead to a poor experience, all due to limited bandwidth and poor communication. You must only collaborate with providers offering pre-initialized environments to solve these issues. Another solution is to balance loads using traffic management solutions. This will help you reduce such instances and balance peak performance during high traffic.

Cost Management in Serverless Computing

Yes, serverless computing helps you save a lot on costs. However, all that glitters is not gold. You still need to check on storage aspects, which can add to extra expenses. This is a common issue when you are dealing with peak workloads. So, how can you maintain peak performance without spending a lot? Well, you need to set alerts and analyze your spending patterns. You can also use cloud cost management solutions to reduce your efforts.

Compliance and Data Governance

Guaranteeing compliance and data governance are significant concerns in serverless environments. Third-party providers govern these functions and may not follow all the regulations. As a result, you may face high fines and penalties. Moreover, it can also lead to reputational damage if you fail to protect your user data. So, what can be done to eliminate these issues? Ensure you only work with providers with compliance certifications and know the regions where serverless functions are deployed.

Multi-Cloud Serverless

Multi-cloud serverless computing lets you deploy multiple serverless applications across various cloud providers. How does this benefit your business? Not only can you reduce instances of vendor lock-in, but you can also enhance redundancy by leveraging the best features of different cloud platforms. These architectures use APIs, Kubernetes, and containerization to ensure a seamless experience and portability across various providers. However, all that glitters is not gold. You will need to deal with changes in latency and data synchronization issues. Luckily, with abstraction layers (a uniform interface is provided so containers can run without modifications on any platform and cloud-agnostic development frameworks.

Performance Optimization Techniques

Businesses worldwide are betting high stakes on serverless computing due to its phenomenal capabilities. However, performance optimization continues to be a significant cause of concern in serverless environments. Improved execution speed, minimizing latency, and ensuring optimum resource utilization are some aspects every cloud provider works on. For starters, you will need to work on mitigating cold starts. This is possible by keeping functions warm with scheduled invocations (triggering a Cloud Run at a dedicated time).

Another action that can be taken is to optimize your code size and dependencies to reduce the start time. At the same time, you can increase responsiveness with asynchronous processing and event-driven architectures. Caching mechanisms and content delivery networks (CDN) become your saviors for that extra edge. And why do we say that? Well, not only do they boost performance, but they also help you optimize networks by establishing efficient database connections.

Cost Optimization Strategies

Have you ever wondered how you can optimize your costs in serverless environments? Consider your job done if you can minimize idle time, manage resources well, and reduce function execution costs. Since serverless pricing works solely on execution time and resources consumed, ensure your code is optimized. This will help you minimize runtime. Another key aspect is to ensure your memory is allocated correctly to find the perfect balance between performance and costs. Here is a list of actions that can be taken to manage costs in serverless environments:

- Implement event-driven scaling and function chaining to control unnecessary invocations.

- Ensure you use free-tier limits, spot instances, and efficient logging practices to reduce costs.

- Use monitoring tools to maintain your usage patterns and monitor your expenses.

DevOps Integration in Serverless Computing

DevOps plays a significant role in serverless environments by ensuring seamless application delivery. You can monitor performance and look forward to automating deployments. You also get access to observability solutions and automated testing, which will help you manage serverless functions. If a retail company uses AWS Lambda, it can integrate DevOps pipelines to automatically update and roll back tasks in response to code changes. Thus ensuring smooth and reliable operations.

Impact on DevOps and CI/CD Pipelines

Since serverless functions are event-driven, traditional deployment models must be adapted for seamless automation. Once you fine-tune them, they can help you sail smoothly through the deployment process. If a fintech company uses Azure functions to implement an automated pipeline, it can reduce downtime and quickly boost agility. This is possible as each code is designed to trigger a series of tests and deployments without manual effort.

Best Practices for Serverless CI/CD

Now that you know the impact of DevOps and CI/CD Pipelines, it is time to understand how to make these practices work for your organization. You can start with the basics, which include infrastructure as code (IaC) management, automated testing, and ensuring security at all levels. Not only will IaC tools help you deploy consistently across environments, but with automated testing, you will also be able to ensure reliability. Also, you must remember that securing applications is one thing, but secure coding practices will help you boost your overall security posture.

Migration Strategies for Serverless Adoption

If you plan on moving to a serverless architecture, you need a rock-solid cloud migration strategy to ensure top-notch performance and seamless transition. Here are two approaches that will help you sail through the waters:

You can use the rebuilding approach, in which applications are designed with serverless functions in mind, and specific components from legacy systems are kept as-is.

You can also use the lift-and-shift approach, where workloads are moved with minimal changes and optimized over time.

If a travel booking company wishes to shift its application to a cloud provider, it can start by migrating its customer-facing applications. Once this is done, the back-end processing tasks can be performed to ensure minimal disruption.

Further Reading

Testing Practices in Serverless Computing

Testing practices for serverless architectures require unique approaches. Here is what you can do: To ensure that all individual functions work as expected, perform unit testing using frameworks like Jest (Node.js) or PyTest (Python).

- Integration testing will help you validate all interactions between functions, external databases, and APIs.

- With performance tests, you can identify all bottlenecks by simulating real-world traffic.

- Last but not least, security tests will help you ensure compliance and ensure least privileged access.

Pros and Cons of Serverless Computing

Knowing the advantages and disadvantages of serverless architectures will help you make the right decisions for your business.

| Pros | Cons |

|---|---|

| You only pay for the actual usage and can easily avoid the cost of idle infrastructure. | You will experience a lag when you trigger functions after idle. |

| These environments scale up/down based on the demand. | Tied to specific cloud provider's services and APIs. However, you will be tied to a particular cloud provider’s services and APIs (Application Program Interface) |

| You don’t need to manage servers, updates, or patches. | Effectively managing and deriving value from an SIEM solution requires specialized skills. Organizations may need to train or hire dedicated personnel to maximize their SIEM investment. |

| You don’t need to manage servers, updates, or patches. | Memory limits and maximum runtime restrict you. |

| You can look forward to more straightforward development and deployment processes. | It is more challenging to debug as functions are distributed. |

| Your developers can concentrate on writing code as the cloud provider manages the infrastructure. | You have less control over infrastructure and runtime environment. |

| You can deploy functions closer to users using edge computing. | Special tools are needed to monitor and trace functions. |

| They are ideal for applications with unpredictable or sporadic workloads. | The stateless nature of serverless computing can lead to complicated session data handling. |

Challenges in Serverless Computing

While serverless computing has multiple advantages, you must consider these challenges to use it best.

Cold Start and Latency

While lags were not an issue with traditional cloud computing methods, serverless computing is not free of them. Since serverless environments are often used after inactivity, a few minutes are required to gather resources and set up the environment. This leads to a cold start, can lead to lags, and can negatively affect user experience. The situation can worsen if you need to deal with high traffic.

You will need additional instances, which will lead to multiple cold starts. The inconsistencies may agitate your customers and cause them to leave bad reviews. To avoid such scenarios, choose providers who offer lower cold start times and use techniques like function warming.

Vendor Lock-In

You know that serverless platforms often use tools and APIs for seamless configurations. While they offer a good user experience and speed, migrating workloads to another provider will be complex and expensive. Similar is the case if you plan to shift to an on-premise solution. You must also deal with limited flexibility and increase your budget to move providers. To avoid these hassles, you can adopt multi or hybrid cloud strategies and leverage open-source frameworks like OpenFaaS or Knative.

Complexity in Debugging and Monitoring

Serverless functions are distributed and have a limited execution time. While it is great for application development, a significant disadvantage is attached to it. This fact makes it difficult for testers and developers to monitor and debug applications compared to traditional ones. They end up wasting precious time finding the root cause of the issue. To deal with these issues, you must invest in distributed tracing tools and monitoring solutions to help you find and solve problems faster.

Limits on Resource Allocation

As compared to traditional cloud computing requirements, serverless functions have many constraints. You cannot use resources on a whim, as you have minimal control over the infrastructure. This also means specific workloads cannot run in these environments. Also, if you wish to run an application with large memory requirements, your developers must build a different architecture. So, how can you solve these challenges? Ask developers to optimize code and use containers to handle resource-intensive workloads.

Security Concerns

The uniqueness of serverless functions is not limited to its process. Instead, they spill over to security concerns. Don’t be confused, and allow us to explain. You must ensure your function code is secure and protected against injection attacks. In case a breach occurs in a serverless application, it can compromise sensitive data. Luckily, there is a solution to all these issues. You must ensure the least privileged access to resources, validate inputs, and conduct dependency scanning.

Serverless Computing Platforms and Major Providers

Let’s have a look at the leading serverless computing providers.

| Parameter | AWS Lambda | Azure Functions | Google Cloud Functions | IBM Cloud Functions | Oracle Functions |

|---|---|---|---|---|---|

| Provider | Amazon Web Services | Microsoft Azure | Google Cloud Platform | IBM Cloud | Oracle Cloud |

| Max Execution Time | 15 minutes | 5 minutes (extendable to 60) | 9 minutes | 60 minutes | Unlimited |

| Language Support |

|

|

|

|

|

| Cold Start Latency | Medium | Low to Medium | Low | Medium | Medium |

| Pricing Model | Pay-per-invocation + compute time | Pay-per-execution and duration | Pay-per-execution and duration | Pay-per-execution and duration | Pay-per-use based on execution of functions. |

| Free Tier | 1M executions (in total) | 1M executions (in total) | 2M requests (in total) | 5M requests per month | There is no specific free tier. |

| Stateful Workflows | AWS Step Functions | Durable Functions | Workflows | IBM Composer | Oracle Functions Workflows |

| Advantages | It is a mature ecosystem and has a global reach. | Strong enterprise tools and IDE support. | Low-latency networking | OpenWhisk-based and hybrid support. | Enterprise-grade reliability |

| Deployment Tools |

|

|

|

|

|

| Limitations | Cold starts for infrequent functions | It is not a good option for small and medium-sized businesses. | Limited to the GCP ecosystem | Less popular in enterprise | Support for fewer regions. |

| Monitoring/Debugging Tools |

|

|

|

|

|

| Use Cases | Real-time data processing | ETL, mobile backend, and microservices | Event-driven apps and media processing | AI/ML workflows and automation | Building enterprise-grade apps. |

Future Trends in Serverless Computing

Here is what you can expect in the future.

Reduced Latency with Edge Computing

Due to latency challenges and cold starts, businesses are wary of serverless computing. Luckily, this will not be the case in the coming years. Cloud providers will integrate edge computing with serverless functions and deploy them closer to the user, reducing latency and improving performance. This trend will broadly impact the IoT, gaming, and AR/VR industries, where ultra-low latency is needed. This is why Cloudflare and Amazon enable developers to execute serverless functions at edge locations.

Shorter Learning Curve for Developers

Developers have a lot on their plate. They must learn and implement everything from coding applications to staying updated with the latest tools and technologies. Luckily, there will be a steep rise in advanced tools and low-code/no-code platforms that will simplify serverless development and reduce the learning curve. The best part is that even users with a non-tech background will be able to create these applications and foster innovation.

Use of Blockchain In Serverless Functions

As blockchain’s prowess grows, so will serverless computing. The future will witness their integration, offering better support to decentralized applications (dApps) and smart contracts. This will benefit users by reducing infrastructure costs and making decentralized applications accessible to developers and businesses. The combination of security, scalability, and speed will lead to groundbreaking innovation.

Enhanced Focus on Monitoring and Debugging

Monitoring and debugging serverless architectures is difficult because they have limited execution time. As a result, testers and developers waste hours finding the main issue. However, this will not be the case in the coming years. Cloud providers will work on developing distributed tracing tools that will help them get deeper visibility into serverless workflows. This will also lead to improved monitoring capabilities and more businesses investing in serverless computing.

Cost-Effective Serverless Functions

The demand-supply gap will be closed in the future. What does that mean? More cloud providers offering serverless functions will lead to affordable pricing models. Businesses can also leverage free-tier expansions, encouraging small businesses and individuals to adopt serverless computing. Cloud providers will also offer customized plans for startups and small businesses, leading to the widespread adoption of serverless computing.

Use Cases and Real-World Examples- Serverless Computing

Let’s look at some use cases and top players in the serverless computing space.

Media Processing

You can build various multimedia services in serverless environments. You can use these services to fine-tune your videos for different devices or easily resize the images. When your customers upload files, you can conduct processes to respond to them. You can also process pictures seamlessly in serverless architectures. You only need to implement image recognition; your serverless applications will do the rest.

Chatbots

Chatbots have been a savior for businesses, making responding to common user FAQs easier. You can quickly develop chatbots with serverless functions and pay only for the resources you use. But what makes these chatbots better than traditional chatbots? Well, you can automate many more functions by going serverless.

API Building

Serverless architectures let you create REST APIs with a basic web framework. While code can be pulled out from the backend, you can get the help of libraries to send back the data and create a REST API. This way, your developers can focus on building the code while serverless environments care for everything else.

Building Webhooks

What can be done if your application needs to interact with SaaS services? A simple way to achieve this is by implementing a webhook. They will perform tasks, provide notifications, and build webhooks built on a serverless architecture that can scale automatically and require minimal maintenance.

Real-World Examples

Let’s see how companies globally are using serverless computing to their advantage.

Major League Baseball

We all know about Major League Baseball, one of the oldest-running professional sports leagues. However, have you ever wondered how it offers real-time statistics? This is all due to Statcast, which runs on serverless computing. Statcast lets you access accurate data and analyze baseball games based on season type, individual players, and pitch velocity.

Netflix

The good old Netflix owes its seamless content delivery and media processing capabilities to serverless computing. Using AWS Lambda, Netflix ensures that the content you receive is suitable for multiple devices and network conditions. Since serverless architecture lets Netflix automate scaling and process massive amounts of data, it has kept its sole focus on content creation and delivering superlative user experiences.

Tailor by Autodesk

The well-known Autodesk also swears by the prowess of serverless computing. The application known as Tailor lets businesses create custom accounts on Autodesk with all the required specifications. The best part is that they could launch the application in 14 days using serverless architectures. And the benefits are surely going to shock you!

Before Tailor existed, provisioning an account required staff time of around $500. Post-launch, the price came down to $6 per account. Additionally, serverless design reduced operational costs and management hassles, enhancing cost management.

Redefine Serverless Computing With BuzzClan’s Enterprise Cloud Solutions

Cloud experts at BuzzClan hold in-depth expertise in serverless computing. We help you analyze your infrastructure and provide solutions that best fit your business. We have helped 100+ enterprises implement cloud solutions and strongly emphasize customization and data security. If you are looking for a cloud provider to partner with you for your serverless computing needs, we are here to help you navigate the web. We specialize in:

- Automated compliance and monitoring

- Reduce cloud spend by up to 40%

- Real-time cost monitoring

- Predictive scaling capabilities

- ROI-focused implementation

- Reduce deployment time by up to 60%

- Seamless application modernization

- Cloud-native development expertise

- Industry-specific compliance expertise

Summing Up

Now that you know about serverless computing, it is time to analyze how to incorporate it into your cloud strategies. If your IT team is pressed for time, you can always hire a consultant who can help you with the same. When you use the right tools and techniques, you can handle fluctuating workloads and unburden your developers from managing servers. Also, faster deployments will become the new normal when they can entirely focus on code and pave the way for exciting possibilities.

FAQs

Get In Touch

Follow Us

Table of Contents

- What is Serverless Computing?

- How Does Serverless Computing Work?

- Components of Serverless Architecture

- Serverless vs. Traditional Cloud Computing

- Why is Serverless Computing Important?

- Security in Serverless Architecture

- Advanced Topics in Serverless Computing

- DevOps Integration in Serverless Computing

- Testing Practices in Serverless Computing

- Pros and Cons of Serverless Computing

- Challenges in Serverless Computing

- Serverless Computing Platforms and Major Providers

- Future Trends in Serverless Computing

- Use Cases and Real-World Examples- Serverless Computing

- Real-World Examples

- Redefine Serverless Computing With BuzzClan’s Enterprise Cloud Solutions

- Summing Up

- FAQs

- Get In Touch